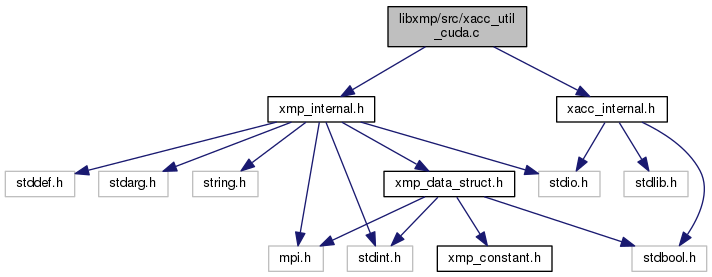

xacc_util_cuda.c File Reference

Include dependency graph for xacc_util_cuda.c:

Functions | |

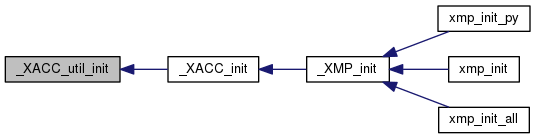

| void | _XACC_util_init (void) |

| void | _XACC_queue_create (_XACC_queue_t *queue) |

| void | _XACC_queue_destroy (_XACC_queue_t *queue) |

| void | _XACC_queue_wait (_XACC_queue_t queue) |

| void | _XACC_memory_alloc (_XACC_memory_t *memory, size_t size) |

| void | _XACC_memory_free (_XACC_memory_t *memory) |

| void | _XACC_memory_read (void *addr, _XACC_memory_t memory, size_t memory_offset, size_t size, _XACC_queue_t queue, bool is_blocking) |

| void | _XACC_memory_write (_XACC_memory_t memory, size_t memory_offset, void *addr, size_t size, _XACC_queue_t queue, bool is_blocking) |

| void | _XACC_memory_copy (_XACC_memory_t dst_memory, size_t dst_memory_offset, _XACC_memory_t src_memory, size_t src_memory_offset, size_t size, _XACC_queue_t queue, bool is_blocking) |

| void | _XACC_host_malloc (void **ptr, size_t size) |

| void | _XACC_host_free (void **ptr) |

| void * | _XACC_memory_get_address (_XACC_memory_t memory) |

| void | _XACC_memory_pack_vector (_XACC_memory_t dst_mem, size_t dst_offset, _XACC_memory_t src_mem, size_t src_offset, size_t blocklength, size_t stride, size_t count, size_t typesize, _XACC_queue_t queue, bool is_blocking) |

| void | _XACC_memory_unpack_vector (_XACC_memory_t dst_mem, size_t dst_offset, _XACC_memory_t src_mem, size_t src_offset, size_t blocklength, size_t stride, size_t count, size_t typesize, _XACC_queue_t queue, bool is_blocking) |

| void | _XACC_memory_pack_vector2 (_XACC_memory_t dst0_mem, size_t dst0_offset, _XACC_memory_t src0_mem, size_t src0_offset, size_t blocklength0, size_t stride0, size_t count0, _XACC_memory_t dst1_mem, size_t dst1_offset, _XACC_memory_t src1_mem, size_t src1_offset, size_t blocklength1, size_t stride1, size_t count1, size_t typesize, _XACC_queue_t queue, bool is_blocking) |

| void | _XACC_memory_unpack_vector2 (_XACC_memory_t dst0_mem, size_t dst0_offset, _XACC_memory_t src0_mem, size_t src0_offset, size_t blocklength0, size_t stride0, size_t count0, _XACC_memory_t dst1_mem, size_t dst1_offset, _XACC_memory_t src1_mem, size_t src1_offset, size_t blocklength1, size_t stride1, size_t count1, size_t typesize, _XACC_queue_t queue, bool is_blocking) |

Function Documentation

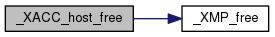

◆ _XACC_host_free()

| void _XACC_host_free | ( | void ** | ptr | ) |

Here is the call graph for this function:

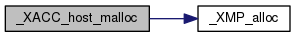

◆ _XACC_host_malloc()

| void _XACC_host_malloc | ( | void ** | ptr, |

| size_t | size | ||

| ) |

Here is the call graph for this function:

◆ _XACC_memory_alloc()

| void _XACC_memory_alloc | ( | _XACC_memory_t * | memory, |

| size_t | size | ||

| ) |

◆ _XACC_memory_copy()

| void _XACC_memory_copy | ( | _XACC_memory_t | dst_memory, |

| size_t | dst_memory_offset, | ||

| _XACC_memory_t | src_memory, | ||

| size_t | src_memory_offset, | ||

| size_t | size, | ||

| _XACC_queue_t | queue, | ||

| bool | is_blocking | ||

| ) |

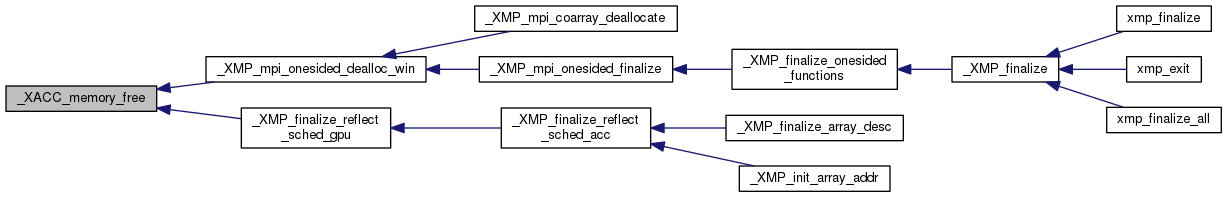

◆ _XACC_memory_free()

| void _XACC_memory_free | ( | _XACC_memory_t * | memory | ) |

◆ _XACC_memory_get_address()

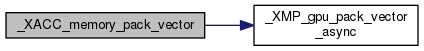

◆ _XACC_memory_pack_vector()

| void _XACC_memory_pack_vector | ( | _XACC_memory_t | dst_mem, |

| size_t | dst_offset, | ||

| _XACC_memory_t | src_mem, | ||

| size_t | src_offset, | ||

| size_t | blocklength, | ||

| size_t | stride, | ||

| size_t | count, | ||

| size_t | typesize, | ||

| _XACC_queue_t | queue, | ||

| bool | is_blocking | ||

| ) |

96 void _XMP_gpu_pack_vector_async(char * restrict dst, char * restrict src, int count, int blocklength, long stride, size_t typesize, void* async_id);

Here is the call graph for this function:

◆ _XACC_memory_pack_vector2()

| void _XACC_memory_pack_vector2 | ( | _XACC_memory_t | dst0_mem, |

| size_t | dst0_offset, | ||

| _XACC_memory_t | src0_mem, | ||

| size_t | src0_offset, | ||

| size_t | blocklength0, | ||

| size_t | stride0, | ||

| size_t | count0, | ||

| _XACC_memory_t | dst1_mem, | ||

| size_t | dst1_offset, | ||

| _XACC_memory_t | src1_mem, | ||

| size_t | src1_offset, | ||

| size_t | blocklength1, | ||

| size_t | stride1, | ||

| size_t | count1, | ||

| size_t | typesize, | ||

| _XACC_queue_t | queue, | ||

| bool | is_blocking | ||

| ) |

◆ _XACC_memory_read()

| void _XACC_memory_read | ( | void * | addr, |

| _XACC_memory_t | memory, | ||

| size_t | memory_offset, | ||

| size_t | size, | ||

| _XACC_queue_t | queue, | ||

| bool | is_blocking | ||

| ) |

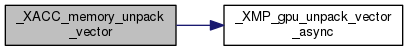

◆ _XACC_memory_unpack_vector()

| void _XACC_memory_unpack_vector | ( | _XACC_memory_t | dst_mem, |

| size_t | dst_offset, | ||

| _XACC_memory_t | src_mem, | ||

| size_t | src_offset, | ||

| size_t | blocklength, | ||

| size_t | stride, | ||

| size_t | count, | ||

| size_t | typesize, | ||

| _XACC_queue_t | queue, | ||

| bool | is_blocking | ||

| ) |

111 void _XMP_gpu_unpack_vector_async(char * restrict dst, char * restrict src, int count, int blocklength, long stride, size_t typesize, void* async_id);

Here is the call graph for this function:

◆ _XACC_memory_unpack_vector2()

| void _XACC_memory_unpack_vector2 | ( | _XACC_memory_t | dst0_mem, |

| size_t | dst0_offset, | ||

| _XACC_memory_t | src0_mem, | ||

| size_t | src0_offset, | ||

| size_t | blocklength0, | ||

| size_t | stride0, | ||

| size_t | count0, | ||

| _XACC_memory_t | dst1_mem, | ||

| size_t | dst1_offset, | ||

| _XACC_memory_t | src1_mem, | ||

| size_t | src1_offset, | ||

| size_t | blocklength1, | ||

| size_t | stride1, | ||

| size_t | count1, | ||

| size_t | typesize, | ||

| _XACC_queue_t | queue, | ||

| bool | is_blocking | ||

| ) |

◆ _XACC_memory_write()

| void _XACC_memory_write | ( | _XACC_memory_t | memory, |

| size_t | memory_offset, | ||

| void * | addr, | ||

| size_t | size, | ||

| _XACC_queue_t | queue, | ||

| bool | is_blocking | ||

| ) |

◆ _XACC_queue_create()

◆ _XACC_queue_destroy()

| void _XACC_queue_destroy | ( | _XACC_queue_t * | queue | ) |

◆ _XACC_queue_wait()

| void _XACC_queue_wait | ( | _XACC_queue_t | queue | ) |