|

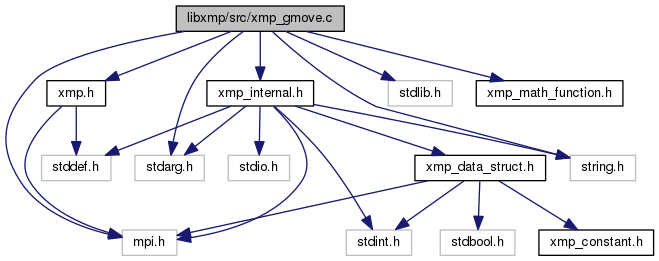

libxmp/libxmpf in Omni Compiler

1.3.4

|

#include <stdarg.h>

#include <stdlib.h>

#include <string.h>

#include "xmp.h"

#include "mpi.h"

#include "xmp_internal.h"

#include "xmp_math_function.h"

|

| void * | _XMP_get_array_addr (_XMP_array_t *a, int *gidx) |

| |

| void | _XMP_gtol_array_ref_triplet (_XMP_array_t *array, int dim_index, int *lower, int *upper, int *stride) |

| |

| void | _XMP_calc_gmove_rank_array_SCALAR (_XMP_array_t *array, int *ref_index, int *rank_array) |

| |

| int | _XMP_calc_gmove_array_owner_linear_rank_SCALAR (_XMP_array_t *array, int *ref_index) |

| |

| int | xmp_calc_gmove_array_owner_linear_rank_scalar_ (_XMP_array_t **a, int *ref_index) |

| |

| unsigned long long | _XMP_gmove_bcast_ARRAY (void *dst_addr, int dst_dim, int *dst_l, int *dst_u, int *dst_s, unsigned long long *dst_d, void *src_addr, int src_dim, int *src_l, int *src_u, int *src_s, unsigned long long *src_d, int type, size_t type_size, int root_rank) |

| |

| int | _XMP_check_gmove_array_ref_inclusion_SCALAR (_XMP_array_t *array, int array_index, int ref_index) |

| |

| void | _XMP_gmove_localcopy_ARRAY (int type, int type_size, void *dst_addr, int dst_dim, int *dst_l, int *dst_u, int *dst_s, unsigned long long *dst_d, void *src_addr, int src_dim, int *src_l, int *src_u, int *src_s, unsigned long long *src_d) |

| |

| int | _XMP_calc_global_index_HOMECOPY (_XMP_array_t *dst_array, int dst_dim_index, int *dst_l, int *dst_u, int *dst_s, int *src_l, int *src_u, int *src_s) |

| |

| int | _XMP_calc_global_index_BCAST (int dst_dim, int *dst_l, int *dst_u, int *dst_s, _XMP_array_t *src_array, int *src_array_nodes_ref, int *src_l, int *src_u, int *src_s) |

| |

| void | _XMP_sendrecv_ARRAY (int type, int type_size, MPI_Datatype *mpi_datatype, _XMP_array_t *dst_array, int *dst_array_nodes_ref, int *dst_lower, int *dst_upper, int *dst_stride, unsigned long long *dst_dim_acc, _XMP_array_t *src_array, int *src_array_nodes_ref, int *src_lower, int *src_upper, int *src_stride, unsigned long long *src_dim_acc) |

| |

| void | _XMP_gmove_BCAST_GSCALAR (void *dst_addr, _XMP_array_t *array, int ref_index[]) |

| |

| void | _XMP_gmove_SENDRECV_GSCALAR (void *dst_addr, void *src_addr, _XMP_array_t *dst_array, _XMP_array_t *src_array, int dst_ref_index[], int src_ref_index[]) |

| |

| void | _XMP_gmove_calc_unit_size (_XMP_array_t *dst_array, _XMP_array_t *src_array, unsigned long long *alltoall_unit_size, unsigned long long *dst_pack_unit_size, unsigned long long *src_pack_unit_size, unsigned long long *dst_ser_size, unsigned long long *src_ser_size, int dst_block_dim, int src_block_dim) |

| |

| void | _XMP_align_local_idx (long long int global_idx, int *local_idx, _XMP_array_t *array, int array_axis, int *rank) |

| |

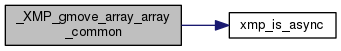

| void | _XMP_gmove_array_array_common (_XMP_gmv_desc_t *gmv_desc_leftp, _XMP_gmv_desc_t *gmv_desc_rightp, int *dst_l, int *dst_u, int *dst_s, unsigned long long *dst_d, int *src_l, int *src_u, int *src_s, unsigned long long *src_d, int mode) |

| |

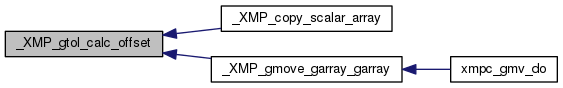

| unsigned long long | _XMP_gtol_calc_offset (_XMP_array_t *a, int g_idx[]) |

| |

| void | _XMP_copy_scalar_array (char *scalar, _XMP_array_t *a, _XMP_comm_set_t *comm_set[]) |

| |

| void | _XMP_gmove_INOUT_SCALAR (_XMP_array_t *dst_array, void *scalar,...) |

| |

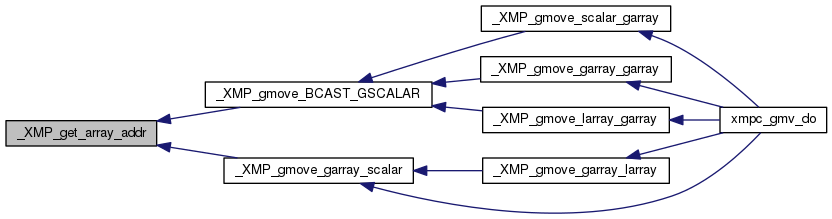

| void | _XMP_gmove_scalar_garray (void *scalar, _XMP_gmv_desc_t *gmv_desc_rightp, int mode) |

| |

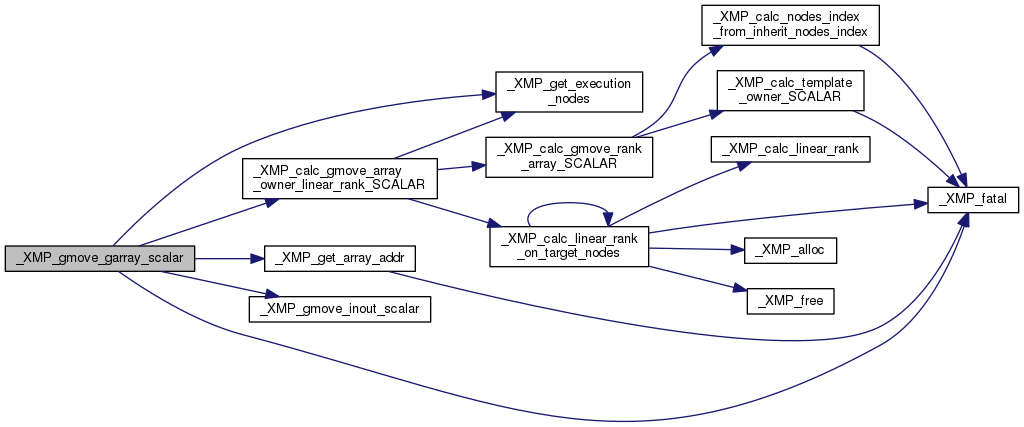

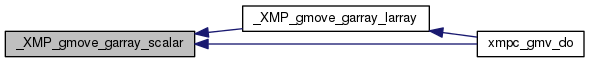

| void | _XMP_gmove_garray_scalar (_XMP_gmv_desc_t *gmv_desc_leftp, void *scalar, int mode) |

| |

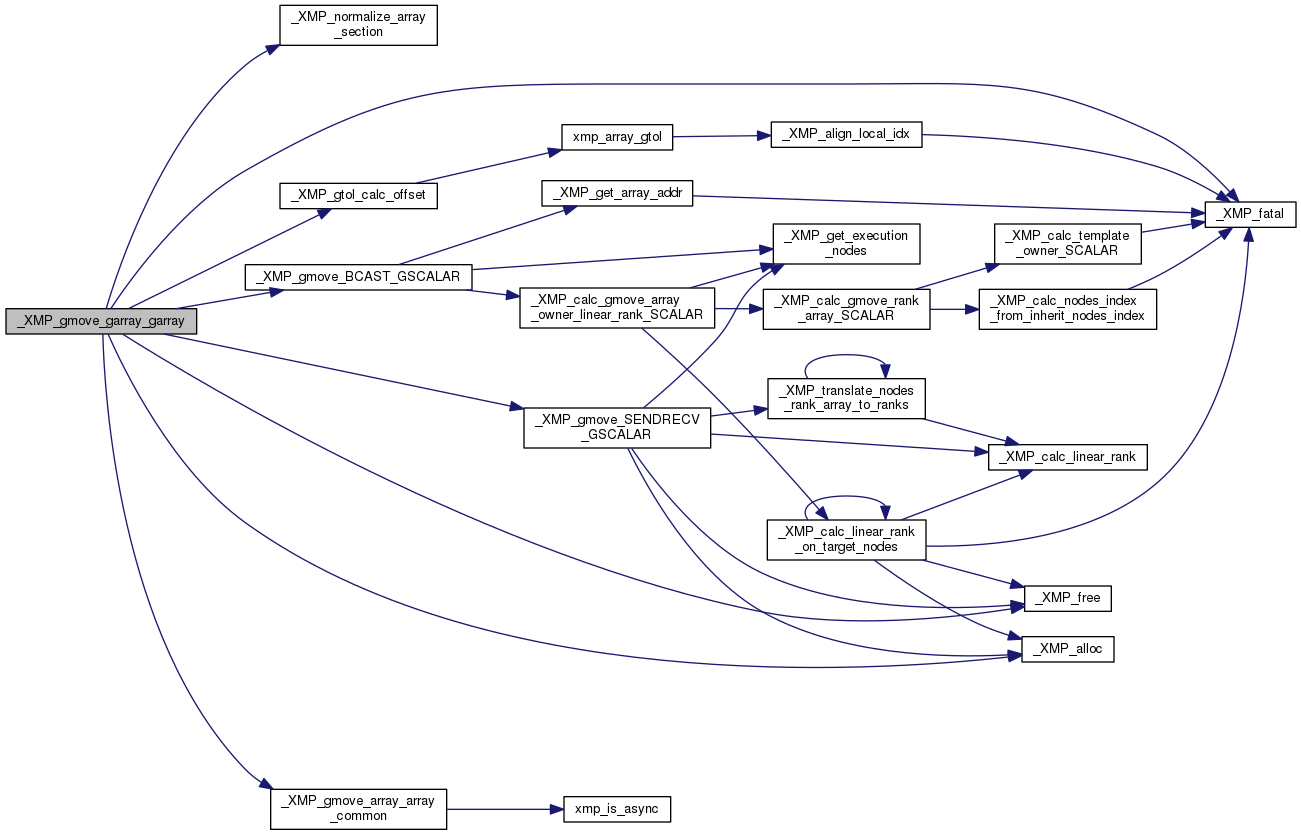

| void | _XMP_gmove_garray_garray (_XMP_gmv_desc_t *gmv_desc_leftp, _XMP_gmv_desc_t *gmv_desc_rightp, int mode) |

| |

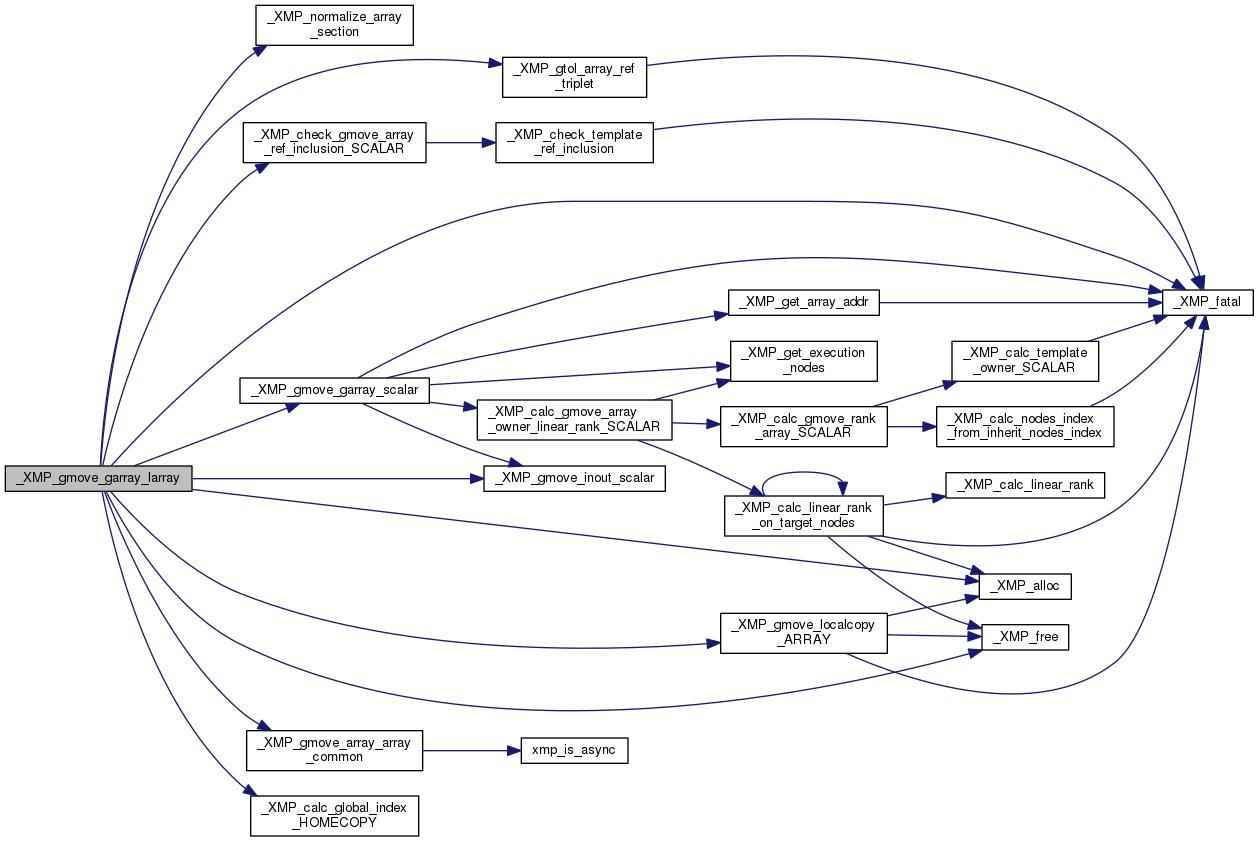

| void | _XMP_gmove_garray_larray (_XMP_gmv_desc_t *gmv_desc_leftp, _XMP_gmv_desc_t *gmv_desc_rightp, int mode) |

| |

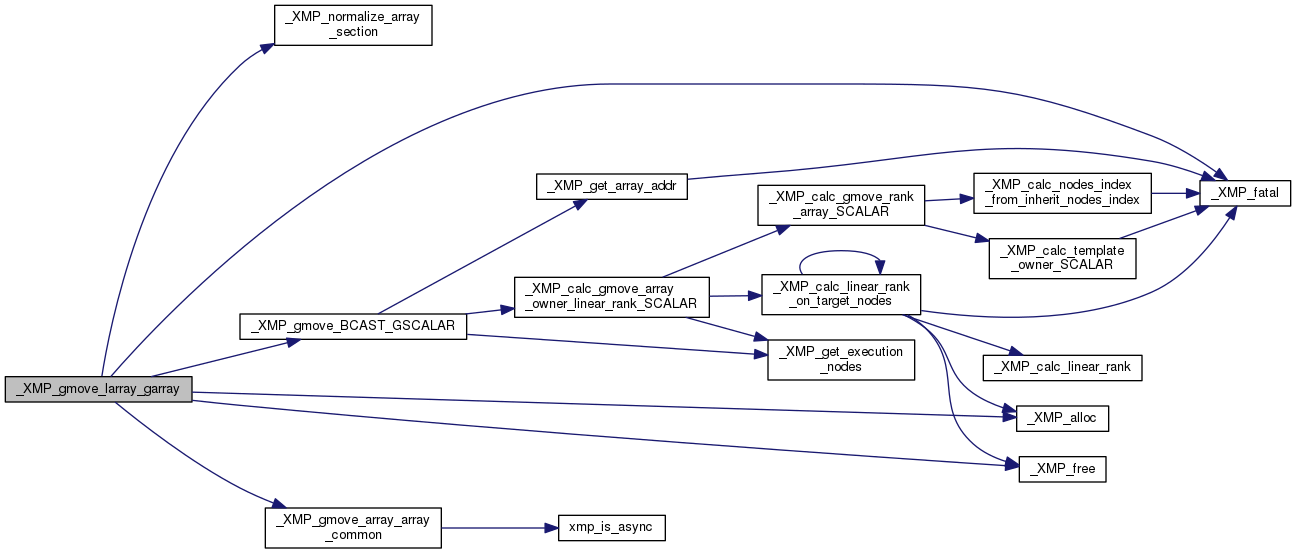

| void | _XMP_gmove_larray_garray (_XMP_gmv_desc_t *gmv_desc_leftp, _XMP_gmv_desc_t *gmv_desc_rightp, int mode) |

| |

◆ _XMP_SM_GTOL_BLOCK

| #define _XMP_SM_GTOL_BLOCK |

( |

|

_i, |

|

|

|

_m, |

|

|

|

_w |

|

) |

| (((_i) - (_m)) % (_w)) |

◆ _XMP_SM_GTOL_BLOCK_CYCLIC

| #define _XMP_SM_GTOL_BLOCK_CYCLIC |

( |

|

_b, |

|

|

|

_i, |

|

|

|

_m, |

|

|

|

_P |

|

) |

| (((((_i) - (_m)) / (((_P) * (_b)))) * (_b)) + (((_i) - (_m)) % (_b))) |

◆ _XMP_SM_GTOL_CYCLIC

| #define _XMP_SM_GTOL_CYCLIC |

( |

|

_i, |

|

|

|

_m, |

|

|

|

_P |

|

) |

| (((_i) - (_m)) / (_P)) |

◆ DBG_RANK

◆ MPI_PORTABLE_PLATFORM_H

| #define MPI_PORTABLE_PLATFORM_H |

◆ XMP_DBG

◆ XMP_DBG_OWNER_REGION

| #define XMP_DBG_OWNER_REGION 0 |

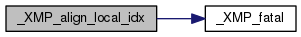

◆ _XMP_align_local_idx()

| void _XMP_align_local_idx |

( |

long long int |

global_idx, |

|

|

int * |

local_idx, |

|

|

_XMP_array_t * |

array, |

|

|

int |

array_axis, |

|

|

int * |

rank |

|

) |

| |

1366 long long tbase =

template->info[template_index].ser_lower;

1368 int irank, idiv, imod;

1374 *local_idx = global_idx + offset - base;

1389 idiv = offset/n_info->

size;

1390 imod = offset%n_info->

size;

1391 *rank = (global_idx + offset - base) % n_info->

size;

1392 *local_idx = (global_idx + offset - base) / n_info->

size;

1394 *local_idx = *local_idx - (idiv + 1);

1396 *local_idx = *local_idx - idiv;

1403 idiv = (offset/w)/n_info->

size;

1404 int imod1 = (offset/w)%n_info->

size;

1405 int imod2 = offset%w;

1406 int off = global_idx + offset - base;

1407 *local_idx = (off / (n_info->

size*w)) * w + off%w;

1409 *rank=(off/w)% (n_info->

size);

1411 if (imod1 == *rank ){

1412 *local_idx = *local_idx - idiv*w-imod2;

1413 }

else if (imod1 > *rank){

1414 *local_idx = *local_idx - (idiv+1)*w;

1416 }

else if (imod1 == 0){

1417 if (imod1 == *rank ){

1418 *local_idx = *local_idx - idiv*w -imod2;

1420 *local_idx = *local_idx - idiv*w;

1429 for(

int i=1;i<(n_info->

size+1);i++){

1430 if(global_idx + offset < chunk->mapping_array[i]+ (base - tbase)){

1436 for(

int i=1;i<n_info->size+1;i++){

1437 if(offset < chunk->mapping_array[i]+(base-tbase)){

1439 idiv = offset - (chunk->

mapping_array[i-1] + (base - tbase) - base);

1443 if (*rank == irank){

1444 *local_idx = *local_idx - idiv;

1451 *local_idx=global_idx - base;

1455 _XMP_fatal(

"_XMP_: unknown chunk dist_manner");

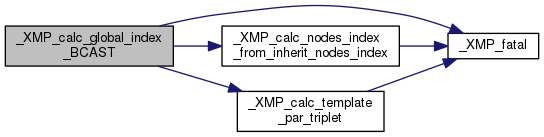

◆ _XMP_calc_global_index_BCAST()

| int _XMP_calc_global_index_BCAST |

( |

int |

dst_dim, |

|

|

int * |

dst_l, |

|

|

int * |

dst_u, |

|

|

int * |

dst_s, |

|

|

_XMP_array_t * |

src_array, |

|

|

int * |

src_array_nodes_ref, |

|

|

int * |

src_l, |

|

|

int * |

src_u, |

|

|

int * |

src_s |

|

) |

| |

423 int dst_dim_index = 0;

424 int array_dim = src_array->

dim;

425 for (

int i = 0; i < array_dim; i++) {

436 }

else if (dst_dim_index < dst_dim) {

439 _XMP_fatal(

"wrong assign statement for gmove");

443 int onto_nodes_index =

template->chunk[template_index].onto_nodes_index;

447 int rank = src_array_nodes_ref[array_nodes_index];

450 int template_lower, template_upper, template_stride;

457 if (_XMP_sched_gmove_triplet(template_lower, template_upper, template_stride,

459 &(src_l[i]), &(src_u[i]), &(src_s[i]),

460 &(dst_l[dst_dim_index]), &(dst_u[dst_dim_index]), &(dst_s[dst_dim_index]))) {

466 }

else if (dst_dim_index < dst_dim) {

469 _XMP_fatal(

"wrong assign statement for gmove");

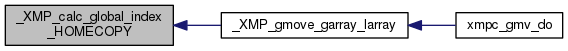

◆ _XMP_calc_global_index_HOMECOPY()

| int _XMP_calc_global_index_HOMECOPY |

( |

_XMP_array_t * |

dst_array, |

|

|

int |

dst_dim_index, |

|

|

int * |

dst_l, |

|

|

int * |

dst_u, |

|

|

int * |

dst_s, |

|

|

int * |

src_l, |

|

|

int * |

src_u, |

|

|

int * |

src_s |

|

) |

| |

411 dst_array, dst_dim_index,

413 src_l, src_u, src_s);

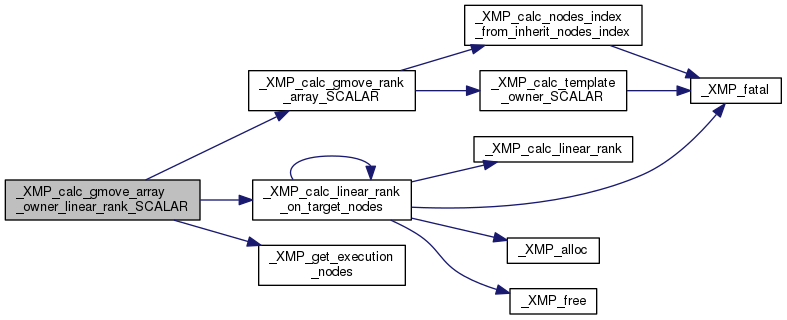

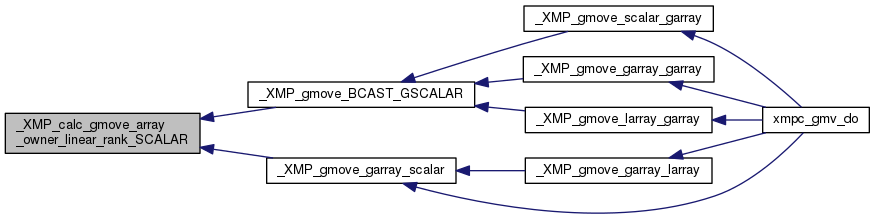

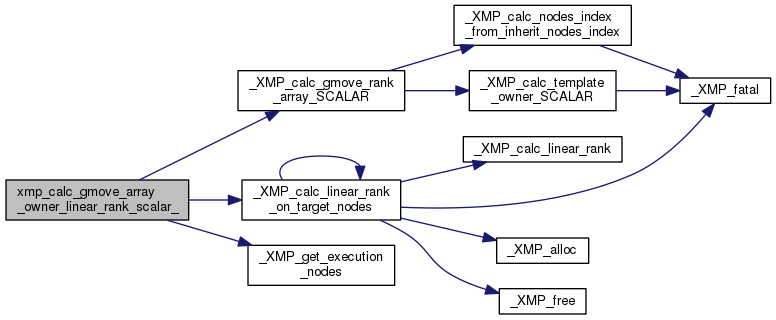

◆ _XMP_calc_gmove_array_owner_linear_rank_SCALAR()

| int _XMP_calc_gmove_array_owner_linear_rank_SCALAR |

( |

_XMP_array_t * |

array, |

|

|

int * |

ref_index |

|

) |

| |

218 int array_nodes_dim = array_nodes->

dim;

219 int rank_array[array_nodes_dim];

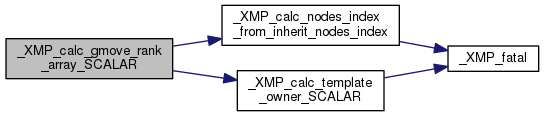

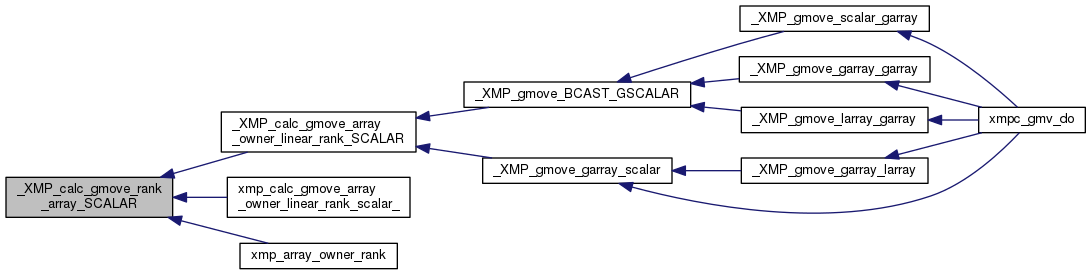

◆ _XMP_calc_gmove_rank_array_SCALAR()

| void _XMP_calc_gmove_rank_array_SCALAR |

( |

_XMP_array_t * |

array, |

|

|

int * |

ref_index, |

|

|

int * |

rank_array |

|

) |

| |

200 int array_dim = array->

dim;

201 for (

int i = 0; i < array_dim; i++) {

208 if (onto_nodes_index == -1)

continue;

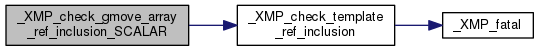

◆ _XMP_check_gmove_array_ref_inclusion_SCALAR()

| int _XMP_check_gmove_array_ref_inclusion_SCALAR |

( |

_XMP_array_t * |

array, |

|

|

int |

array_index, |

|

|

int |

ref_index |

|

) |

| |

◆ _XMP_copy_scalar_array()

4219 for (c[0] = comm_set[0]; c[0]; c[0] = c[0]->next){

4220 for (i[0] = c[0]->l; i[0] <= c[0]->u; i[0]++){

4226 for (c[1] = comm_set[1]; c[1]; c[1] = c[1]->next){

4227 for (i[1] = c[1]->l; i[1] <= c[1]->u; i[1]++){

4228 for (c[0] = comm_set[0]; c[0]; c[0] = c[0]->next){

4229 for (i[0] = c[0]->l; i[0] <= c[0]->u; i[0]++){

4236 for (c[2] = comm_set[2]; c[2]; c[2] = c[2]->next){

4237 for (i[2] = c[2]->l; i[2] <= c[2]->u; i[2]++){

4238 for (c[1] = comm_set[1]; c[1]; c[1] = c[1]->next){

4239 for (i[1] = c[1]->l; i[1] <= c[1]->u; i[1]++){

4240 for (c[0] = comm_set[0]; c[0]; c[0] = c[0]->next){

4241 for (i[0] = c[0]->l; i[0] <= c[0]->u; i[0]++){

4249 for (c[3] = comm_set[3]; c[3]; c[3] = c[3]->next){

4250 for (i[3] = c[3]->l; i[3] <= c[3]->u; i[3]++){

4251 for (c[2] = comm_set[2]; c[2]; c[2] = c[2]->next){

4252 for (i[2] = c[2]->l; i[2] <= c[2]->u; i[2]++){

4253 for (c[1] = comm_set[1]; c[1]; c[1] = c[1]->next){

4254 for (i[1] = c[1]->l; i[1] <= c[1]->u; i[1]++){

4255 for (c[0] = comm_set[0]; c[0]; c[0] = c[0]->next){

4256 for (i[0] = c[0]->l; i[0] <= c[0]->u; i[0]++){

4265 for (c[4] = comm_set[4]; c[4]; c[4] = c[4]->next){

4266 for (i[4] = c[4]->l; i[4] <= c[4]->u; i[4]++){

4267 for (c[3] = comm_set[3]; c[3]; c[3] = c[3]->next){

4268 for (i[3] = c[3]->l; i[3] <= c[3]->u; i[3]++){

4269 for (c[2] = comm_set[2]; c[2]; c[2] = c[2]->next){

4270 for (i[2] = c[2]->l; i[2] <= c[2]->u; i[2]++){

4271 for (c[1] = comm_set[1]; c[1]; c[1] = c[1]->next){

4272 for (i[1] = c[1]->l; i[1] <= c[1]->u; i[1]++){

4273 for (c[0] = comm_set[0]; c[0]; c[0] = c[0]->next){

4274 for (i[0] = c[0]->l; i[0] <= c[0]->u; i[0]++){

4284 for (c[5] = comm_set[5]; c[5]; c[5] = c[5]->next){

4285 for (i[5] = c[5]->l; i[5] <= c[5]->u; i[5]++){

4286 for (c[4] = comm_set[4]; c[4]; c[4] = c[4]->next){

4287 for (i[4] = c[4]->l; i[4] <= c[4]->u; i[4]++){

4288 for (c[3] = comm_set[3]; c[3]; c[3] = c[3]->next){

4289 for (i[3] = c[3]->l; i[3] <= c[3]->u; i[3]++){

4290 for (c[2] = comm_set[2]; c[2]; c[2] = c[2]->next){

4291 for (i[2] = c[2]->l; i[2] <= c[2]->u; i[2]++){

4292 for (c[1] = comm_set[1]; c[1]; c[1] = c[1]->next){

4293 for (i[1] = c[1]->l; i[1] <= c[1]->u; i[1]++){

4294 for (c[0] = comm_set[0]; c[0]; c[0] = c[0]->next){

4295 for (i[0] = c[0]->l; i[0] <= c[0]->u; i[0]++){

4306 for (c[6] = comm_set[6]; c[6]; c[6] = c[6]->next){

4307 for (i[6] = c[6]->l; i[6] <= c[6]->u; i[6]++){

4308 for (c[5] = comm_set[5]; c[5]; c[5] = c[5]->next){

4309 for (i[5] = c[5]->l; i[5] <= c[5]->u; i[5]++){

4310 for (c[4] = comm_set[4]; c[4]; c[4] = c[4]->next){

4311 for (i[4] = c[4]->l; i[4] <= c[4]->u; i[4]++){

4312 for (c[3] = comm_set[3]; c[3]; c[3] = c[3]->next){

4313 for (i[3] = c[3]->l; i[3] <= c[3]->u; i[3]++){

4314 for (c[2] = comm_set[2]; c[2]; c[2] = c[2]->next){

4315 for (i[2] = c[2]->l; i[2] <= c[2]->u; i[2]++){

4316 for (c[1] = comm_set[1]; c[1]; c[1] = c[1]->next){

4317 for (i[1] = c[1]->l; i[1] <= c[1]->u; i[1]++){

4318 for (c[0] = comm_set[0]; c[0]; c[0] = c[0]->next){

4319 for (i[0] = c[0]->l; i[0] <= c[0]->u; i[0]++){

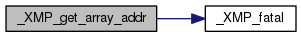

◆ _XMP_get_array_addr()

54 for (

int i = 0; i < ndims; i++){

79 lidx = gidx[i] - glb + l_shadow;

87 lidx = (gidx[i] + offset - t_lb) / np;

96 lidx = w * ((gidx[i] + offset - t_lb) / (np * w))

97 + ((gidx[i] + offset - t_lb) % w);

101 _XMP_fatal(

"_XMP_get_array_addr: unknown align_manner");

106 ret = (

char *)ret + lidx * ai->

dim_acc * type_size;

◆ _XMP_gmove_array_array_common()

| void _XMP_gmove_array_array_common |

( |

_XMP_gmv_desc_t * |

gmv_desc_leftp, |

|

|

_XMP_gmv_desc_t * |

gmv_desc_rightp, |

|

|

int * |

dst_l, |

|

|

int * |

dst_u, |

|

|

int * |

dst_s, |

|

|

unsigned long long * |

dst_d, |

|

|

int * |

src_l, |

|

|

int * |

src_u, |

|

|

int * |

src_s, |

|

|

unsigned long long * |

src_d, |

|

|

int |

mode |

|

) |

| |

2042 if (_XMP_gmove_garray_garray_opt(gmv_desc_leftp, gmv_desc_rightp,

2043 dst_l, dst_u, dst_s, dst_d,

2044 src_l, src_u, src_s, src_d))

return;

2048 _XMP_gmove_1to1(gmv_desc_leftp, gmv_desc_rightp, mode);

◆ _XMP_gmove_bcast_ARRAY()

| unsigned long long _XMP_gmove_bcast_ARRAY |

( |

void * |

dst_addr, |

|

|

int |

dst_dim, |

|

|

int * |

dst_l, |

|

|

int * |

dst_u, |

|

|

int * |

dst_s, |

|

|

unsigned long long * |

dst_d, |

|

|

void * |

src_addr, |

|

|

int |

src_dim, |

|

|

int * |

src_l, |

|

|

int * |

src_u, |

|

|

int * |

src_s, |

|

|

unsigned long long * |

src_d, |

|

|

int |

type, |

|

|

size_t |

type_size, |

|

|

int |

root_rank |

|

) |

| |

278 unsigned long long dst_buffer_elmts = 1;

279 for (

int i = 0; i < dst_dim; i++) {

283 void *buffer =

_XMP_alloc(dst_buffer_elmts * type_size);

288 if (root_rank == (exec_nodes->

comm_rank)) {

289 unsigned long long src_buffer_elmts = 1;

290 for (

int i = 0; i < src_dim; i++) {

294 if (dst_buffer_elmts != src_buffer_elmts) {

295 _XMP_fatal(

"wrong assign statement for gmove");

297 (*_xmp_pack_array)(buffer, src_addr, type, type_size, src_dim, src_l, src_u, src_s, src_d);

301 _XMP_gmove_bcast(buffer, type_size, dst_buffer_elmts, root_rank);

303 (*_xmp_unpack_array)(dst_addr, buffer, type, type_size, dst_dim, dst_l, dst_u, dst_s, dst_d);

306 return dst_buffer_elmts;

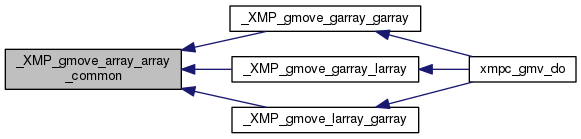

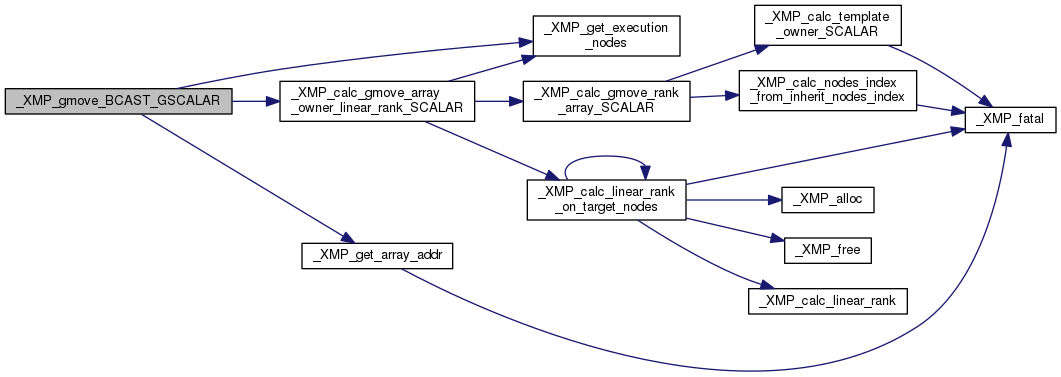

◆ _XMP_gmove_BCAST_GSCALAR()

| void _XMP_gmove_BCAST_GSCALAR |

( |

void * |

dst_addr, |

|

|

_XMP_array_t * |

array, |

|

|

int |

ref_index[] |

|

) |

| |

692 void *src_addr = NULL;

697 memcpy(dst_addr, src_addr, type_size);

706 memcpy(dst_addr, src_addr, type_size);

709 _XMP_gmove_bcast(dst_addr, type_size, 1, root_rank);

◆ _XMP_gmove_calc_unit_size()

| void _XMP_gmove_calc_unit_size |

( |

_XMP_array_t * |

dst_array, |

|

|

_XMP_array_t * |

src_array, |

|

|

unsigned long long * |

alltoall_unit_size, |

|

|

unsigned long long * |

dst_pack_unit_size, |

|

|

unsigned long long * |

src_pack_unit_size, |

|

|

unsigned long long * |

dst_ser_size, |

|

|

unsigned long long * |

src_ser_size, |

|

|

int |

dst_block_dim, |

|

|

int |

src_block_dim |

|

) |

| |

1178 int dst_dim=dst_array->

dim, src_dim=src_array->

dim;

1181 *alltoall_unit_size=1;

1182 *dst_pack_unit_size=1;

1183 *src_pack_unit_size=1;

1188 for(

int i=0; i<dst_dim; i++){

1189 if(i==dst_block_dim){

1190 dst_chunk_size[dst_block_dim]=dst_array->

info[dst_block_dim].

par_size;

1191 }

else if(i==src_block_dim){

1192 dst_chunk_size[src_block_dim]=src_array->

info[src_block_dim].

par_size;

1197 for(

int i=0; i<src_dim; i++){

1198 if(i==dst_block_dim){

1200 }

else if(i==src_block_dim){

1205 *alltoall_unit_size *= src_chunk_size[i];

1207 for(

int i=0; i<dst_block_dim+1; i++){

1208 *src_pack_unit_size *= src_chunk_size[i];

1211 for(

int i=0; i<src_block_dim+1; i++){

1212 *dst_pack_unit_size *= dst_chunk_size[i];

1218 for(

int i=dst_dim-1; i> -1; i--){

1219 if(i==dst_block_dim){

1221 }

else if(i==src_block_dim){

1227 for(

int i=src_dim-1; i>-1; i--){

1228 if(i==dst_block_dim){

1230 }

else if(i==src_block_dim){

1235 *alltoall_unit_size *= src_chunk_size[i];

1237 for(

int i=src_array->

dim-1; i>dst_block_dim-1; i--){

1238 *src_pack_unit_size *= src_chunk_size[i];

1241 for(

int i=src_array->

dim-1; i>src_block_dim-1; i--){

1242 *dst_pack_unit_size *= dst_chunk_size[i];

◆ _XMP_gmove_garray_garray()

4782 unsigned long long dst_total_elmts = 1;

4783 int dst_dim = dst_array->

dim;

4784 int dst_l[dst_dim], dst_u[dst_dim], dst_s[dst_dim];

4785 unsigned long long dst_d[dst_dim];

4786 int dst_scalar_flag = 1;

4787 for (

int i = 0; i < dst_dim; i++) {

4788 dst_l[i] = gmv_desc_leftp->

lb[i];

4789 dst_u[i] = gmv_desc_leftp->

ub[i];

4790 dst_s[i] = gmv_desc_leftp->

st[i];

4794 dst_scalar_flag &= (dst_s[i] == 0);

4798 unsigned long long src_total_elmts = 1;

4799 int src_dim = src_array->

dim;

4800 int src_l[src_dim], src_u[src_dim], src_s[src_dim];

4801 unsigned long long src_d[src_dim];

4802 int src_scalar_flag = 1;

4803 for (

int i = 0; i < src_dim; i++) {

4804 src_l[i] = gmv_desc_rightp->

lb[i];

4805 src_u[i] = gmv_desc_rightp->

ub[i];

4806 src_s[i] = gmv_desc_rightp->

st[i];

4810 src_scalar_flag &= (src_s[i] == 0);

4813 if (dst_total_elmts != src_total_elmts && !src_scalar_flag){

4814 _XMP_fatal(

"wrong assign statement for gmove");

4822 if (dst_scalar_flag && src_scalar_flag){

4826 dst_array, src_array,

4830 else if (!dst_scalar_flag && src_scalar_flag){

4835 _XMP_gmove_gsection_scalar(dst_array, dst_l, dst_u, dst_s, tmp);

4842 dst_l, dst_u, dst_s, dst_d,

4843 src_l, src_u, src_s, src_d,

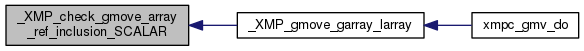

◆ _XMP_gmove_garray_larray()

4857 int type = dst_array->

type;

4858 size_t type_size = dst_array->

type_size;

4863 unsigned long long dst_total_elmts = 1;

4865 int dst_dim = dst_array->

dim;

4866 int dst_l[dst_dim], dst_u[dst_dim], dst_s[dst_dim];

4867 unsigned long long dst_d[dst_dim];

4868 int dst_scalar_flag = 1;

4869 for (

int i = 0; i < dst_dim; i++) {

4870 dst_l[i] = gmv_desc_leftp->

lb[i];

4871 dst_u[i] = gmv_desc_leftp->

ub[i];

4872 dst_s[i] = gmv_desc_leftp->

st[i];

4876 dst_scalar_flag &= (dst_s[i] == 0);

4880 unsigned long long src_total_elmts = 1;

4881 void *src_addr = gmv_desc_rightp->

local_data;

4882 int src_dim = gmv_desc_rightp->

ndims;

4883 int src_l[src_dim], src_u[src_dim], src_s[src_dim];

4884 unsigned long long src_d[src_dim];

4885 int src_scalar_flag = 1;

4886 for (

int i = 0; i < src_dim; i++) {

4887 src_l[i] = gmv_desc_rightp->

lb[i];

4888 src_u[i] = gmv_desc_rightp->

ub[i];

4889 src_s[i] = gmv_desc_rightp->

st[i];

4890 if (i == 0) src_d[i] = 1;

4891 else src_d[i] = src_d[i-1] * (gmv_desc_rightp->

a_ub[i] - gmv_desc_rightp->

a_lb[i] + 1);

4894 src_scalar_flag &= (src_s[i] == 0);

4897 if (dst_total_elmts != src_total_elmts && !src_scalar_flag){

4898 _XMP_fatal(

"wrong assign statement for gmove");

4901 char *scalar = (

char *)src_addr;

4902 if (src_scalar_flag){

4903 for (

int i = 0; i < src_dim; i++){

4904 scalar += ((src_l[i] - gmv_desc_rightp->

a_lb[i]) * src_d[i] * type_size);

4909 if (src_scalar_flag){

4910 #ifdef _XMP_MPI3_ONESIDED

4913 _XMP_fatal(

"Not supported gmove in/out on non-MPI3 environments");

4918 dst_l, dst_u, dst_s, dst_d,

4919 src_l, src_u, src_s, src_d,

4925 if (dst_scalar_flag && src_scalar_flag){

4931 for (

int i = 0; i < dst_dim; i++) {

4936 dst_addr, dst_dim, dst_l, dst_u, dst_s, dst_d,

4937 src_addr, src_dim, src_l, src_u, src_s, src_d);

4941 for (

int i = 0; i < src_dim; i++){

4942 src_l[i] -= gmv_desc_rightp->

a_lb[i];

4943 src_u[i] -= gmv_desc_rightp->

a_lb[i];

4947 int src_dim_index = 0;

4948 unsigned long long dst_buffer_elmts = 1;

4949 unsigned long long src_buffer_elmts = 1;

4950 for (

int i = 0; i < dst_dim; i++) {

4952 if (dst_elmts == 1) {

4957 dst_buffer_elmts *= dst_elmts;

4961 src_elmts =

_XMP_M_COUNT_TRIPLETi(src_l[src_dim_index], src_u[src_dim_index], src_s[src_dim_index]);

4962 if (src_elmts != 1) {

4964 }

else if (src_dim_index < src_dim) {

4967 _XMP_fatal(

"wrong assign statement for gmove");

4972 &(dst_l[i]), &(dst_u[i]), &(dst_s[i]),

4973 &(src_l[src_dim_index]), &(src_u[src_dim_index]), &(src_s[src_dim_index]))) {

4974 src_buffer_elmts *= src_elmts;

4984 for (

int i = src_dim_index; i < src_dim; i++) {

4989 if (dst_buffer_elmts != src_buffer_elmts) {

4990 _XMP_fatal(

"wrong assign statement for gmove");

4993 void *buffer =

_XMP_alloc(dst_buffer_elmts * type_size);

4994 (*_xmp_pack_array)(buffer, src_addr, type, type_size, src_dim, src_l, src_u, src_s, src_d);

4995 (*_xmp_unpack_array)(dst_addr, buffer, type, type_size, dst_dim, dst_l, dst_u, dst_s, dst_d);

◆ _XMP_gmove_garray_scalar()

| void _XMP_gmove_garray_scalar |

( |

_XMP_gmv_desc_t * |

gmv_desc_leftp, |

|

|

void * |

scalar, |

|

|

int |

mode |

|

) |

| |

4737 void *dst_addr = NULL;

4740 int ndims = gmv_desc_leftp->

ndims;

4743 for (

int i=0;i<ndims;i++)

4744 lidx[i] = gmv_desc_leftp->

lb[i];

4748 if (owner_rank == exec_nodes->

comm_rank){

4750 memcpy(dst_addr, scalar, type_size);

4754 #ifdef _XMP_MPI3_ONESIDED

4757 _XMP_fatal(

"Not supported gmove in/out on non-MPI3 environments");

4761 _XMP_fatal(

"_XMPF_gmove_garray_scalar: wrong gmove mode");

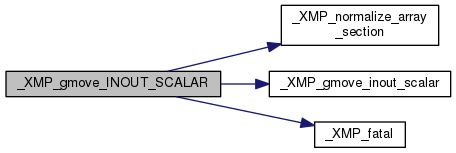

◆ _XMP_gmove_INOUT_SCALAR()

| void _XMP_gmove_INOUT_SCALAR |

( |

_XMP_array_t * |

dst_array, |

|

|

void * |

scalar, |

|

|

|

... |

|

) |

| |

4647 va_start(args, scalar);

4651 int dst_dim = dst_array->

dim;

4652 int dst_l[dst_dim], dst_u[dst_dim], dst_s[dst_dim];

4654 for (

int i = 0; i < dst_dim; i++) {

4655 dst_l[i] = va_arg(args,

int);

4656 int size = va_arg(args,

int);

4657 dst_s[i] = va_arg(args,

int);

4658 dst_u[i] = dst_l[i] + (size - 1) * dst_s[i];

4660 va_arg(args,

unsigned long long);

4671 #ifdef _XMP_MPI3_ONESIDED

4674 for (

int i = 0; i < dst_dim; i++){

4679 gmv_desc.

ndims = dst_dim;

4681 gmv_desc.

a_desc = dst_array;

4684 gmv_desc.

a_lb = NULL;

4685 gmv_desc.

a_ub = NULL;

4687 gmv_desc.

kind = kind;

4688 gmv_desc.

lb = dst_l;

4689 gmv_desc.

ub = dst_u;

4690 gmv_desc.

st = dst_s;

4694 _XMP_fatal(

"Not supported gmove in/out on non-MPI3 environments");

◆ _XMP_gmove_larray_garray()

5008 size_t type_size = src_array->

type_size;

5011 unsigned long long dst_total_elmts = 1;

5012 int dst_dim = gmv_desc_leftp->

ndims;

5013 int dst_l[dst_dim], dst_u[dst_dim], dst_s[dst_dim];

5014 unsigned long long dst_d[dst_dim];

5015 int dst_scalar_flag = 1;

5016 for(

int i=0;i<dst_dim;i++){

5017 dst_l[i] = gmv_desc_leftp->

lb[i];

5018 dst_u[i] = gmv_desc_leftp->

ub[i];

5019 dst_s[i] = gmv_desc_leftp->

st[i];

5020 dst_d[i] = (i == 0)? 1 : dst_d[i-1]*(gmv_desc_leftp->

a_ub[i] - gmv_desc_leftp->

a_lb[i]+1);

5024 dst_scalar_flag &= (dst_s[i] == 0);

5028 unsigned long long src_total_elmts = 1;

5029 int src_dim = src_array->

dim;

5030 int src_l[src_dim], src_u[src_dim], src_s[src_dim];

5031 unsigned long long src_d[src_dim];

5032 int src_scalar_flag = 1;

5033 for(

int i=0;i<src_dim;i++) {

5034 src_l[i] = gmv_desc_rightp->

lb[i];

5035 src_u[i] = gmv_desc_rightp->

ub[i];

5036 src_s[i] = gmv_desc_rightp->

st[i];

5040 src_scalar_flag &= (src_s[i] == 0);

5043 if(dst_total_elmts != src_total_elmts && !src_scalar_flag){

5044 _XMP_fatal(

"wrong assign statement for gmove");

5048 if (dst_scalar_flag && src_scalar_flag){

5049 char *dst_addr = (

char *)gmv_desc_leftp->

local_data;

5050 for (

int i = 0; i < dst_dim; i++)

5051 dst_addr += ((dst_l[i] - gmv_desc_leftp->

a_lb[i]) * dst_d[i]) * type_size;

5055 else if (!dst_scalar_flag && src_scalar_flag){

5058 char *dst_addr = (

char *)gmv_desc_leftp->

local_data;

5061 for (

int i = 0; i < dst_dim; i++) {

5062 dst_l[i] -= gmv_desc_leftp->

a_lb[i];

5063 dst_u[i] -= gmv_desc_leftp->

a_lb[i];

5066 _XMP_gmove_lsection_scalar(dst_addr, dst_dim, dst_l, dst_u, dst_s, dst_d, tmp, type_size);

5076 dst_l, dst_u, dst_s, dst_d,

5077 src_l, src_u, src_s, src_d,

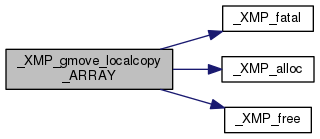

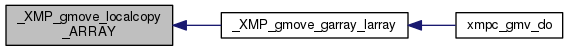

◆ _XMP_gmove_localcopy_ARRAY()

| void _XMP_gmove_localcopy_ARRAY |

( |

int |

type, |

|

|

int |

type_size, |

|

|

void * |

dst_addr, |

|

|

int |

dst_dim, |

|

|

int * |

dst_l, |

|

|

int * |

dst_u, |

|

|

int * |

dst_s, |

|

|

unsigned long long * |

dst_d, |

|

|

void * |

src_addr, |

|

|

int |

src_dim, |

|

|

int * |

src_l, |

|

|

int * |

src_u, |

|

|

int * |

src_s, |

|

|

unsigned long long * |

src_d |

|

) |

| |

327 unsigned long long dst_buffer_elmts = 1;

328 for (

int i = 0; i < dst_dim; i++) {

332 unsigned long long src_buffer_elmts = 1;

333 for (

int i = 0; i < src_dim; i++) {

337 if (dst_buffer_elmts != src_buffer_elmts) {

338 _XMP_fatal(

"wrong assign statement for gmove");

341 void *buffer =

_XMP_alloc(dst_buffer_elmts * type_size);

342 (*_xmp_pack_array)(buffer, src_addr, type, type_size, src_dim, src_l, src_u, src_s, src_d);

343 (*_xmp_unpack_array)(dst_addr, buffer, type, type_size, dst_dim, dst_l, dst_u, dst_s, dst_d);

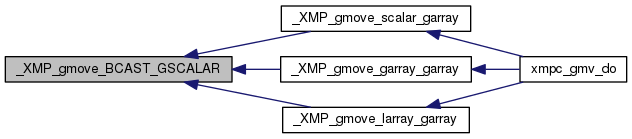

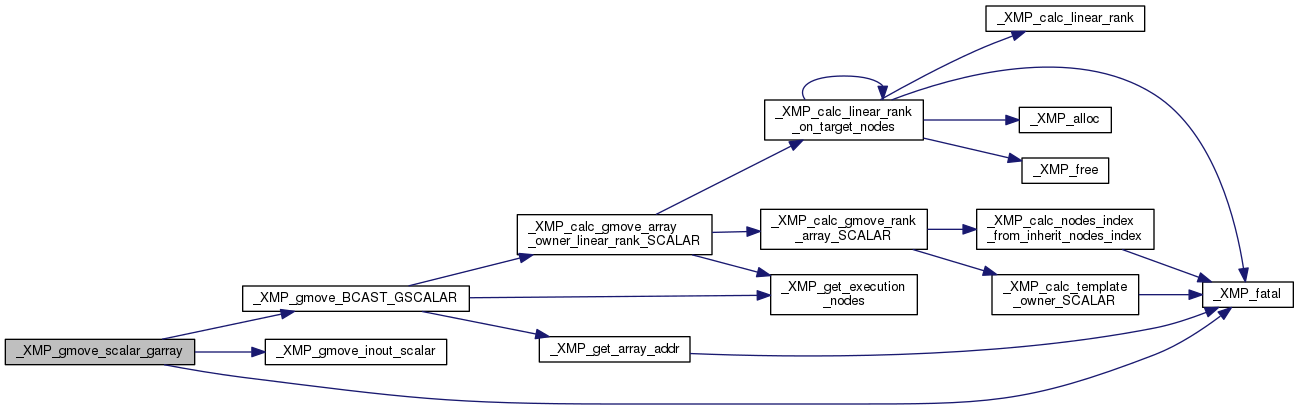

◆ _XMP_gmove_scalar_garray()

| void _XMP_gmove_scalar_garray |

( |

void * |

scalar, |

|

|

_XMP_gmv_desc_t * |

gmv_desc_rightp, |

|

|

int |

mode |

|

) |

| |

4708 int ndims = gmv_desc_rightp->

ndims;

4711 for (

int i=0;i<ndims;i++)

4712 ridx[i] = gmv_desc_rightp->

lb[i];

4717 #ifdef _XMP_MPI3_ONESIDED

4720 _XMP_fatal(

"Not supported gmove in/out on non-MPI3 environments");

4724 _XMP_fatal(

"_XMPF_gmove_scalar_garray: wrong gmove mode");

◆ _XMP_gmove_SENDRECV_GSCALAR()

| void _XMP_gmove_SENDRECV_GSCALAR |

( |

void * |

dst_addr, |

|

|

void * |

src_addr, |

|

|

_XMP_array_t * |

dst_array, |

|

|

_XMP_array_t * |

src_array, |

|

|

int |

dst_ref_index[], |

|

|

int |

src_ref_index[] |

|

) |

| |

896 memcpy(dst_addr, src_addr, type_size);

900 _XMP_nodes_ref_t *dst_ref = _XMP_create_gmove_nodes_ref_SCALAR(dst_array, dst_ref_index);

901 _XMP_nodes_ref_t *src_ref = _XMP_create_gmove_nodes_ref_SCALAR(src_array, src_ref_index);

907 MPI_Comm *exec_comm = exec_nodes->

comm;

911 int dst_ranks[dst_shrink_nodes_size];

912 if (dst_shrink_nodes_size == 1) {

920 int src_ranks[src_shrink_nodes_size];

921 if (src_shrink_nodes_size == 1) {

928 MPI_Request gmove_request;

929 for (

int i = 0; i < dst_shrink_nodes_size; i++) {

930 if (dst_ranks[i] == exec_rank) {

934 if ((dst_shrink_nodes_size == src_shrink_nodes_size) ||

935 (dst_shrink_nodes_size < src_shrink_nodes_size)) {

936 src_rank = src_ranks[i];

938 src_rank = src_ranks[i % src_shrink_nodes_size];

941 MPI_Irecv(dst_addr, type_size, MPI_BYTE, src_rank,

_XMP_N_MPI_TAG_GMOVE, *exec_comm, &gmove_request);

945 for (

int i = 0; i < src_shrink_nodes_size; i++) {

946 if (src_ranks[i] == exec_rank) {

947 if ((dst_shrink_nodes_size == src_shrink_nodes_size) ||

948 (dst_shrink_nodes_size < src_shrink_nodes_size)) {

949 if (i < dst_shrink_nodes_size) {

954 MPI_Request *requests =

_XMP_alloc(

sizeof(MPI_Request) * request_size);

956 int request_count = 0;

957 for (

int j = i; j < dst_shrink_nodes_size; j += src_shrink_nodes_size) {

958 MPI_Isend(src_addr, type_size, MPI_BYTE, dst_ranks[j],

_XMP_N_MPI_TAG_GMOVE, *exec_comm, requests + request_count);

962 MPI_Waitall(request_size, requests, MPI_STATUSES_IGNORE);

969 MPI_Wait(&gmove_request, MPI_STATUS_IGNORE);

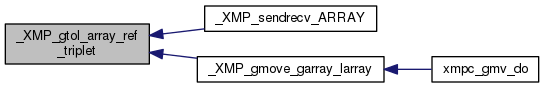

◆ _XMP_gtol_array_ref_triplet()

| void _XMP_gtol_array_ref_triplet |

( |

_XMP_array_t * |

array, |

|

|

int |

dim_index, |

|

|

int * |

lower, |

|

|

int * |

upper, |

|

|

int * |

stride |

|

) |

| |

127 int t_lower = *lower - align_subscript,

128 t_upper = *upper - align_subscript,

153 t_stride = t_stride / template_par_nodes_size;

162 _XMP_fatal(

"wrong distribute manner for normal shadow");

165 *lower = t_lower + align_subscript;

166 *upper = t_upper + align_subscript;

182 *lower += shadow_size_lo;

183 *upper += shadow_size_lo;

190 _XMP_fatal(

"gmove does not support shadow region for cyclic or block-cyclic distribution");

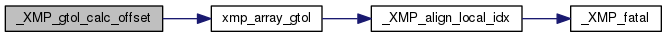

◆ _XMP_gtol_calc_offset()

| unsigned long long _XMP_gtol_calc_offset |

( |

_XMP_array_t * |

a, |

|

|

int |

g_idx[] |

|

) |

| |

2995 for(

int i=0;i<ndims;i++)

3000 unsigned long long offset = 0;

3002 for (

int i = 0; i < a->

dim; i++){

◆ _XMP_sendrecv_ARRAY()

| void _XMP_sendrecv_ARRAY |

( |

int |

type, |

|

|

int |

type_size, |

|

|

MPI_Datatype * |

mpi_datatype, |

|

|

_XMP_array_t * |

dst_array, |

|

|

int * |

dst_array_nodes_ref, |

|

|

int * |

dst_lower, |

|

|

int * |

dst_upper, |

|

|

int * |

dst_stride, |

|

|

unsigned long long * |

dst_dim_acc, |

|

|

_XMP_array_t * |

src_array, |

|

|

int * |

src_array_nodes_ref, |

|

|

int * |

src_lower, |

|

|

int * |

src_upper, |

|

|

int * |

src_stride, |

|

|

unsigned long long * |

src_dim_acc |

|

) |

| |

487 int dst_dim = dst_array->

dim;

488 int src_dim = src_array->

dim;

494 MPI_Comm *exec_comm = exec_nodes->

comm;

499 int dst_ranks[dst_shrink_nodes_size];

500 if (dst_shrink_nodes_size == 1) {

506 unsigned long long total_elmts = 1;

507 for (

int i = 0; i < dst_dim; i++) {

514 int src_ranks[src_shrink_nodes_size];

515 if (src_shrink_nodes_size == 1) {

521 unsigned long long src_total_elmts = 1;

522 for (

int i = 0; i < src_dim; i++) {

526 if (total_elmts != src_total_elmts) {

527 _XMP_fatal(

"wrong assign statement for gmove");

531 void *recv_buffer = NULL;

532 void *recv_alloc = NULL;

534 MPI_Request gmove_request;

535 for (

int i = 0; i < dst_shrink_nodes_size; i++) {

536 if (dst_ranks[i] == exec_rank) {

540 if ((dst_shrink_nodes_size == src_shrink_nodes_size) ||

541 (dst_shrink_nodes_size < src_shrink_nodes_size)) {

542 src_rank = src_ranks[i];

544 src_rank = src_ranks[i % src_shrink_nodes_size];

547 for (

int i = 0; i < dst_dim; i++) {

550 if(dst_dim == 1 && dst_stride[0] == 1){

551 recv_buffer = (

char*)dst_addr + type_size * dst_lower[0];

553 recv_alloc =

_XMP_alloc(total_elmts * type_size);

554 recv_buffer = recv_alloc;

556 MPI_Irecv(recv_buffer, total_elmts, *mpi_datatype, src_rank,

_XMP_N_MPI_TAG_GMOVE, *exec_comm, &gmove_request);

562 for (

int i = 0; i < src_shrink_nodes_size; i++) {

563 if (src_ranks[i] == exec_rank) {

564 void *send_buffer = NULL;

565 void *send_alloc = NULL;

566 for (

int j = 0; j < src_dim; j++) {

569 if(src_dim == 1 && src_stride[0] == 1){

570 send_buffer = (

char*)src_addr + type_size * src_lower[0];

572 send_alloc =

_XMP_alloc(total_elmts * type_size);

573 send_buffer = send_alloc;

574 (*_xmp_pack_array)(send_buffer, src_addr, type, type_size, src_dim, src_lower, src_upper, src_stride, src_dim_acc);

576 if ((dst_shrink_nodes_size == src_shrink_nodes_size) ||

577 (dst_shrink_nodes_size < src_shrink_nodes_size)) {

578 if (i < dst_shrink_nodes_size) {

579 MPI_Send(send_buffer, total_elmts, *mpi_datatype, dst_ranks[i],

_XMP_N_MPI_TAG_GMOVE, *exec_comm);

584 MPI_Request *requests =

_XMP_alloc(

sizeof(MPI_Request) * request_size);

586 int request_count = 0;

587 for (

int j = i; j < dst_shrink_nodes_size; j += src_shrink_nodes_size) {

588 MPI_Isend(send_buffer, total_elmts, *mpi_datatype, dst_ranks[j],

_XMP_N_MPI_TAG_GMOVE, *exec_comm,

589 requests + request_count);

594 MPI_Waitall(request_size, requests, MPI_STATUSES_IGNORE);

604 MPI_Wait(&gmove_request, MPI_STATUS_IGNORE);

605 if(! (dst_dim == 1 && dst_stride[0] == 1)){

606 (*_xmp_unpack_array)(dst_addr, recv_buffer, type, type_size, dst_dim, dst_lower, dst_upper, dst_stride, dst_dim_acc);

◆ xmp_calc_gmove_array_owner_linear_rank_scalar_()

| int xmp_calc_gmove_array_owner_linear_rank_scalar_ |

( |

_XMP_array_t ** |

a, |

|

|

int * |

ref_index |

|

) |

| |

229 int array_nodes_dim = array_nodes->

dim;

230 int rank_array[array_nodes_dim];

◆ _alloc_size

◆ _dim_alloc_size

◆ _XMP_pack_comm_set

◆ _XMP_unpack_comm_set

◆ gmv_nodes

◆ n_gmv_nodes

int _XMPC_running

Definition: xmp_runtime.c:15

long long align_subscript

Definition: xmp_data_struct.h:246

int _XMP_calc_template_par_triplet(_XMP_template_t *template, int template_index, int nodes_rank, int *template_lower, int *template_upper, int *template_stride)

Definition: xmp_template.c:667

long long par_upper

Definition: xmp_data_struct.h:81

_XMP_nodes_t * array_nodes

Definition: xmp_data_struct.h:306

int size

Definition: xmp_data_struct.h:32

Definition: xmp_data_struct.h:31

_XMP_template_info_t info[1]

Definition: xmp_data_struct.h:115

int align_template_index

Definition: xmp_data_struct.h:260

#define _XMP_N_DIST_BLOCK

Definition: xmp_constant.h:29

void _XMP_gmove_garray_scalar(_XMP_gmv_desc_t *gmv_desc_leftp, void *scalar, int mode)

Definition: xmp_gmove.c:4732

long long ser_lower

Definition: xmp_data_struct.h:72

_Bool xmp_is_async()

Definition: xmp_async.c:20

int is_member

Definition: xmp_data_struct.h:46

void * _XMP_alloc(size_t size)

Definition: xmp_util.c:21

int * lb

Definition: xmp_data_struct.h:398

#define _XMP_N_NO_ONTO_NODES

Definition: xmp_constant.h:24

Definition: xmp_data_struct.h:194

int * st

Definition: xmp_data_struct.h:400

_XMP_template_chunk_t * chunk

Definition: xmp_data_struct.h:112

int _XMP_calc_nodes_index_from_inherit_nodes_index(_XMP_nodes_t *nodes, int inherit_nodes_index)

Definition: xmp_nodes.c:1309

#define _XMP_N_GMOVE_NORMAL

Definition: xmp_constant.h:69

int _XMP_calc_linear_rank(_XMP_nodes_t *n, int *rank_array)

Definition: xmp_nodes.c:1035

int * ub

Definition: xmp_data_struct.h:399

int _XMP_calc_gmove_array_owner_linear_rank_SCALAR(_XMP_array_t *array, int *ref_index)

Definition: xmp_gmove.c:216

#define XMP_N_GMOVE_INDEX

Definition: xmp_constant.h:128

long long par_lower

Definition: xmp_data_struct.h:80

void _XMP_translate_nodes_rank_array_to_ranks(_XMP_nodes_t *nodes, int *ranks, int *rank_array, int shrink_nodes_size)

Definition: xmp_nodes.c:1270

void * _XMP_get_array_addr(_XMP_array_t *a, int *gidx)

Definition: xmp_gmove.c:47

Definition: xmp_data_struct.h:386

int shadow_type

Definition: xmp_data_struct.h:248

int ser_size

Definition: xmp_data_struct.h:201

int * a_ub

Definition: xmp_data_struct.h:395

#define _XMP_N_GMOVE_OUT

Definition: xmp_constant.h:71

_XMP_nodes_info_t * onto_nodes_info

Definition: xmp_data_struct.h:94

int _XMP_check_template_ref_inclusion(int ref_lower, int ref_upper, int ref_stride, _XMP_template_t *t, int index)

Definition: xmp_template.c:243

int shrink_nodes_size

Definition: xmp_data_struct.h:66

int _XMP_calc_global_index_HOMECOPY(_XMP_array_t *dst_array, int dst_dim_index, int *dst_l, int *dst_u, int *dst_s, int *src_l, int *src_u, int *src_s)

Definition: xmp_gmove.c:404

int _XMP_calc_linear_rank_on_target_nodes(_XMP_nodes_t *n, int *rank_array, _XMP_nodes_t *target_nodes)

Definition: xmp_nodes.c:1049

#define _XMP_SM_GTOL_CYCLIC(_i, _m, _P)

Definition: xmp_gmove.c:16

void _XMP_gmove_array_array_common(_XMP_gmv_desc_t *gmv_desc_leftp, _XMP_gmv_desc_t *gmv_desc_rightp, int *dst_l, int *dst_u, int *dst_s, unsigned long long *dst_d, int *src_l, int *src_u, int *src_s, unsigned long long *src_d, int mode)

Definition: xmp_gmove.c:2036

Definition: xmp_data_struct.h:70

int comm_rank

Definition: xmp_data_struct.h:52

unsigned long long par_chunk_width

Definition: xmp_data_struct.h:86

#define _XMP_SM_GTOL_BLOCK_CYCLIC(_b, _i, _m, _P)

Definition: xmp_gmove.c:19

int xmp_array_gtol(xmp_desc_t d, int dim, int g_idx, int *lidx)

Definition: xmp_lib.c:234

#define _XMP_N_MPI_TAG_GMOVE

Definition: xmp_constant.h:10

void _XMP_calc_gmove_rank_array_SCALAR(_XMP_array_t *array, int *ref_index, int *rank_array)

Definition: xmp_gmove.c:197

#define _XMP_N_GMOVE_IN

Definition: xmp_constant.h:70

int shadow_size_lo

Definition: xmp_data_struct.h:249

void _XMP_gmove_localcopy_ARRAY(int type, int type_size, void *dst_addr, int dst_dim, int *dst_l, int *dst_u, int *dst_s, unsigned long long *dst_d, void *src_addr, int src_dim, int *src_l, int *src_u, int *src_s, unsigned long long *src_d)

Definition: xmp_gmove.c:322

_XMP_template_t * align_template

Definition: xmp_data_struct.h:312

int * ref

Definition: xmp_data_struct.h:65

int * kind

Definition: xmp_data_struct.h:397

int align_manner

Definition: xmp_data_struct.h:197

#define _XMP_IS_SINGLE

Definition: xmp_internal.h:50

#define _XMP_N_INT_FALSE

Definition: xmp_constant.h:5

#define _XMP_N_DIST_CYCLIC

Definition: xmp_constant.h:30

Definition: xmp_data_struct.h:98

#define _XMP_N_SHADOW_FULL

Definition: xmp_constant.h:66

Definition: xmp_data_struct.h:63

#define _XMP_SM_GTOL_BLOCK(_i, _m, _w)

Definition: xmp_gmove.c:13

int ser_lower

Definition: xmp_data_struct.h:199

_XMP_nodes_ref_t * _XMP_create_nodes_ref_for_target_nodes(_XMP_nodes_t *n, int *rank_array, _XMP_nodes_t *target_nodes)

Definition: xmp_nodes.c:1234

Definition: xmp_data_struct.h:78

int onto_nodes_index

Definition: xmp_data_struct.h:92

#define _XMP_N_DIST_BLOCK_CYCLIC

Definition: xmp_constant.h:31

#define _XMP_N_DIST_DUPLICATION

Definition: xmp_constant.h:28

void * local_data

Definition: xmp_data_struct.h:393

Definition: xmp_data_struct.h:266

#define _XMP_N_NO_ALIGN_TEMPLATE

Definition: xmp_constant.h:23

int par_stride

Definition: xmp_data_struct.h:85

int ndims

Definition: xmp_data_struct.h:389

int dist_manner

Definition: xmp_data_struct.h:87

#define _XMP_N_COARRAY_PUT

Definition: xmp_constant.h:112

size_t type_size

Definition: xmp_data_struct.h:274

#define _XMP_N_ALIGN_CYCLIC

Definition: xmp_constant.h:38

#define _XMP_N_ALIGN_BLOCK

Definition: xmp_constant.h:37

#define _XMP_N_ALIGN_DUPLICATION

Definition: xmp_constant.h:36

_XMP_array_info_t info[1]

Definition: xmp_data_struct.h:313

void _XMP_free(void *p)

Definition: xmp_util.c:37

#define _XMP_ASSERT(_flag)

Definition: xmp_internal.h:34

_Bool is_global

Definition: xmp_data_struct.h:388

void _XMP_finalize_nodes_ref(_XMP_nodes_ref_t *nodes_ref)

Definition: xmp_nodes.c:1228

#define _XMP_N_ALIGN_NOT_ALIGNED

Definition: xmp_constant.h:35

int _XMP_check_gmove_array_ref_inclusion_SCALAR(_XMP_array_t *array, int array_index, int ref_index)

Definition: xmp_gmove.c:309

void * array_addr_p

Definition: xmp_data_struct.h:279

#define _XMP_N_ALIGN_BLOCK_CYCLIC

Definition: xmp_constant.h:39

_XMP_comm_t * comm

Definition: xmp_data_struct.h:53

int par_stride

Definition: xmp_data_struct.h:206

int dim

Definition: xmp_data_struct.h:272

void _XMP_fatal(char *msg)

Definition: xmp_util.c:42

#define XMP_N_GMOVE_RANGE

Definition: xmp_constant.h:129

_XMP_array_t * a_desc

Definition: xmp_data_struct.h:391

unsigned long long dim_acc

Definition: xmp_data_struct.h:242

int par_size

Definition: xmp_data_struct.h:207

unsigned long long par_width

Definition: xmp_data_struct.h:82

#define _XMP_N_COARRAY_GET

Definition: xmp_constant.h:111

_XMP_nodes_t * nodes

Definition: xmp_data_struct.h:64

void _XMP_gmove_SENDRECV_GSCALAR(void *dst_addr, void *src_addr, _XMP_array_t *dst_array, _XMP_array_t *src_array, int dst_ref_index[], int src_ref_index[])

Definition: xmp_gmove.c:889

int dim

Definition: xmp_data_struct.h:47

Definition: xmp_data_struct.h:40

#define _XMP_M_COUNT_TRIPLETi(l_, u_, s_)

Definition: xmp_gpu_func.hpp:25

#define _XMP_N_MAX_DIM

Definition: xmp_constant.h:6

int _XMP_calc_template_owner_SCALAR(_XMP_template_t *ref_template, int dim_index, long long ref_index)

Definition: xmp_template.c:632

unsigned long long _XMP_gtol_calc_offset(_XMP_array_t *a, int g_idx[])

Definition: xmp_gmove.c:2991

void _XMP_gmove_BCAST_GSCALAR(void *dst_addr, _XMP_array_t *array, int ref_index[])

Definition: xmp_gmove.c:687

#define _XMP_N_SHADOW_NORMAL

Definition: xmp_constant.h:65

void _XMP_gmove_inout_scalar(void *scalar, _XMP_gmv_desc_t *gmv_desc, int rdma_type)

#define _XMP_N_SHADOW_NONE

Definition: xmp_constant.h:64

int _XMPF_running

Definition: xmp_runtime.c:16

int type

Definition: xmp_data_struct.h:273

#define _XMP_N_INT_TRUE

Definition: xmp_constant.h:4

#define _XMP_N_ALIGN_GBLOCK

Definition: xmp_constant.h:40

void _XMP_normalize_array_section(int *lower, int *upper, int *stride)

long long * mapping_array

Definition: xmp_data_struct.h:88

_Bool is_allocated

Definition: xmp_data_struct.h:270

void _XMP_gtol_array_ref_triplet(_XMP_array_t *array, int dim_index, int *lower, int *upper, int *stride)

Definition: xmp_gmove.c:114

void * _XMP_get_execution_nodes(void)

Definition: xmp_nodes_stack.c:46

Definition: xmp_data_struct.h:439

int par_lower

Definition: xmp_data_struct.h:204

int * a_lb

Definition: xmp_data_struct.h:394