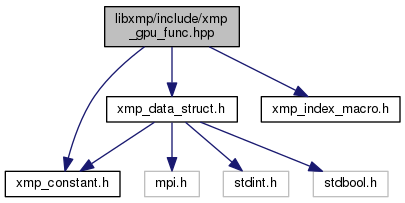

Include dependency graph for xmp_gpu_func.hpp:

Go to the source code of this file.

Macros | |

| #define | _XMP_GPU_M_GTOL(_desc, _dim) (((_XMP_gpu_array_t *)_desc)[_dim].gtol) |

| #define | _XMP_GPU_M_ACC(_desc, _dim) (((_XMP_gpu_array_t *)_desc)[_dim].acc) |

| #define | _XMP_M_CEILi(a_, b_) (((a_) % (b_)) == 0 ? ((a_) / (b_)) : ((a_) / (b_)) + 1) |

| #define | _XMP_M_FLOORi(a_, b_) ((a_) / (b_)) |

| #define | _XMP_M_COUNT_TRIPLETi(l_, u_, s_) (_XMP_M_FLOORi(((u_) - (l_)), s_) + 1) |

| #define | _XMP_GPU_M_BARRIER_THREADS() __syncthreads() |

| #define | _XMP_GPU_M_BARRIER_KERNEL() cudaThreadSynchronize() |

| #define | _XMP_GPU_M_GET_ARRAY_GTOL(_gtol, _desc, _dim) _gtol = _XMP_GPU_M_GTOL(_desc, _dim) |

| #define | _XMP_GPU_M_GET_ARRAY_ACC(_acc, _desc, _dim) _acc = _XMP_GPU_M_ACC(_desc, _dim) |

| #define | _XMP_gpu_calc_iter_MAP_THREADS_1(_l0, _u0, _s0, _i0) |

| #define | _XMP_gpu_calc_iter_MAP_THREADS_2(_l0, _u0, _s0, _l1, _u1, _s1, _i0, _i1) |

| #define | _XMP_gpu_calc_iter_MAP_THREADS_3(_l0, _u0, _s0, _l1, _u1, _s1, _l2, _u2, _s2, _i0, _i1, _i2) |

| #define | _XMP_GPU_M_CALC_CONFIG_PARAMS(_x, _y, _z) |

Functions | |

| void | _XMP_fatal (char *msg) |

| template<typename T > | |

| __device__ void | _XMP_gpu_calc_thread_id (T *index) |

| template<typename T > | |

| __device__ void | _XMP_gpu_calc_iter (unsigned long long tid, T lower0, T upper0, T stride0, T *iter0) |

| template<typename T > | |

| __device__ void | _XMP_gpu_calc_iter (unsigned long long tid, T lower0, T upper0, T stride0, T lower1, T upper1, T stride1, T *iter0, T *iter1) |

| template<typename T > | |

| __device__ void | _XMP_gpu_calc_iter (unsigned long long tid, T lower0, T upper0, T stride0, T lower1, T upper1, T stride1, T lower2, T upper2, T stride2, T *iter0, T *iter1, T *iter2) |

| template<typename T > | |

| void | _XMP_gpu_calc_config_params (unsigned long long *total_iter, int *block_x, int *block_y, int *block_z, int *thread_x, int *thread_y, int *thread_z, T lower0, T upper0, T stride0) |

| template<typename T > | |

| void | _XMP_gpu_calc_config_params (unsigned long long *total_iter, int *block_x, int *block_y, int *block_z, int *thread_x, int *thread_y, int *thread_z, T lower0, T upper0, T stride0, T lower1, T upper1, T stride1) |

| template<typename T > | |

| void | _XMP_gpu_calc_config_params (unsigned long long *total_iter, int *block_x, int *block_y, int *block_z, int *thread_x, int *thread_y, int *thread_z, T lower0, T upper0, T stride0, T lower1, T upper1, T stride1, T lower2, T upper2, T stride2) |

| template<typename T > | |

| void | _XMP_gpu_calc_config_params_MAP_THREADS (int *block_x, int *block_y, int *block_z, int *thread_x, int *thread_y, int *thread_z, int thread_x_v, T lower0, T upper0, T stride0) |

| template<typename T > | |

| void | _XMP_gpu_calc_config_params_MAP_THREADS (int *block_x, int *block_y, int *block_z, int *thread_x, int *thread_y, int *thread_z, int thread_x_v, int thread_y_v, T lower0, T upper0, T stride0, T lower1, T upper1, T stride1) |

| template<typename T > | |

| void | _XMP_gpu_calc_config_params_MAP_THREADS (int *block_x, int *block_y, int *block_z, int *thread_x, int *thread_y, int *thread_z, int thread_x_v, int thread_y_v, int thread_z_v, T lower0, T upper0, T stride0, T lower1, T upper1, T stride1, T lower2, T upper2, T stride2) |

Variables | |

| int | _XMP_gpu_max_thread |

| int | _XMP_gpu_max_block_dim_x |

| int | _XMP_gpu_max_block_dim_y |

| int | _XMP_gpu_max_block_dim_z |

Macro Definition Documentation

◆ _XMP_gpu_calc_iter_MAP_THREADS_1

| #define _XMP_gpu_calc_iter_MAP_THREADS_1 | ( | _l0, | |

| _u0, | |||

| _s0, | |||

| _i0 | |||

| ) |

Value:

{ \

if ((blockIdx.x * blockDim.x + threadIdx.x) >= _XMP_M_COUNT_TRIPLETi(_l0, (_u0 - 1), _s0)) return; \

\

_i0 = _l0 + ((blockIdx.x * blockDim.x + threadIdx.x) * _s0); \

}

◆ _XMP_gpu_calc_iter_MAP_THREADS_2

| #define _XMP_gpu_calc_iter_MAP_THREADS_2 | ( | _l0, | |

| _u0, | |||

| _s0, | |||

| _l1, | |||

| _u1, | |||

| _s1, | |||

| _i0, | |||

| _i1 | |||

| ) |

Value:

{ \

if ((blockIdx.x * blockDim.x + threadIdx.x) >= _XMP_M_COUNT_TRIPLETi(_l0, (_u0 - 1), _s0)) return; \

if ((blockIdx.y * blockDim.y + threadIdx.y) >= _XMP_M_COUNT_TRIPLETi(_l1, (_u1 - 1), _s1)) return; \

\

_i0 = _l0 + ((blockIdx.x * blockDim.x + threadIdx.x) * _s0); \

_i1 = _l1 + ((blockIdx.y * blockDim.y + threadIdx.y) * _s1); \

}

◆ _XMP_gpu_calc_iter_MAP_THREADS_3

| #define _XMP_gpu_calc_iter_MAP_THREADS_3 | ( | _l0, | |

| _u0, | |||

| _s0, | |||

| _l1, | |||

| _u1, | |||

| _s1, | |||

| _l2, | |||

| _u2, | |||

| _s2, | |||

| _i0, | |||

| _i1, | |||

| _i2 | |||

| ) |

Value:

{ \

if ((blockIdx.x * blockDim.x + threadIdx.x) >= _XMP_M_COUNT_TRIPLETi(_l0, (_u0 - 1), _s0)) return; \

if ((blockIdx.y * blockDim.y + threadIdx.y) >= _XMP_M_COUNT_TRIPLETi(_l1, (_u1 - 1), _s1)) return; \

if ((blockIdx.z * blockDim.z + threadIdx.z) >= _XMP_M_COUNT_TRIPLETi(_l2, (_u2 - 1), _s2)) return; \

\

_i0 = _l0 + ((blockIdx.x * blockDim.x + threadIdx.x) * _s0); \

_i1 = _l1 + ((blockIdx.y * blockDim.y + threadIdx.y) * _s1); \

_i2 = _l2 + ((blockIdx.z * blockDim.z + threadIdx.z) * _s2); \

}

◆ _XMP_GPU_M_ACC

| #define _XMP_GPU_M_ACC | ( | _desc, | |

| _dim | |||

| ) | (((_XMP_gpu_array_t *)_desc)[_dim].acc) |

◆ _XMP_GPU_M_BARRIER_KERNEL

| #define _XMP_GPU_M_BARRIER_KERNEL | ( | ) | cudaThreadSynchronize() |

◆ _XMP_GPU_M_BARRIER_THREADS

| #define _XMP_GPU_M_BARRIER_THREADS | ( | ) | __syncthreads() |

◆ _XMP_GPU_M_CALC_CONFIG_PARAMS

| #define _XMP_GPU_M_CALC_CONFIG_PARAMS | ( | _x, | |

| _y, | |||

| _z | |||

| ) |

◆ _XMP_GPU_M_GET_ARRAY_ACC

| #define _XMP_GPU_M_GET_ARRAY_ACC | ( | _acc, | |

| _desc, | |||

| _dim | |||

| ) | _acc = _XMP_GPU_M_ACC(_desc, _dim) |

◆ _XMP_GPU_M_GET_ARRAY_GTOL

| #define _XMP_GPU_M_GET_ARRAY_GTOL | ( | _gtol, | |

| _desc, | |||

| _dim | |||

| ) | _gtol = _XMP_GPU_M_GTOL(_desc, _dim) |

◆ _XMP_GPU_M_GTOL

| #define _XMP_GPU_M_GTOL | ( | _desc, | |

| _dim | |||

| ) | (((_XMP_gpu_array_t *)_desc)[_dim].gtol) |

◆ _XMP_M_CEILi

| #define _XMP_M_CEILi | ( | a_, | |

| b_ | |||

| ) | (((a_) % (b_)) == 0 ? ((a_) / (b_)) : ((a_) / (b_)) + 1) |

◆ _XMP_M_COUNT_TRIPLETi

| #define _XMP_M_COUNT_TRIPLETi | ( | l_, | |

| u_, | |||

| s_ | |||

| ) | (_XMP_M_FLOORi(((u_) - (l_)), s_) + 1) |

◆ _XMP_M_FLOORi

| #define _XMP_M_FLOORi | ( | a_, | |

| b_ | |||

| ) | ((a_) / (b_)) |

Function Documentation

◆ _XMP_fatal()

| void _XMP_fatal | ( | char * | msg | ) |

◆ _XMP_gpu_calc_config_params() [1/3]

template<typename T >

| void _XMP_gpu_calc_config_params | ( | unsigned long long * | total_iter, |

| int * | block_x, | ||

| int * | block_y, | ||

| int * | block_z, | ||

| int * | thread_x, | ||

| int * | thread_y, | ||

| int * | thread_z, | ||

| T | lower0, | ||

| T | upper0, | ||

| T | stride0 | ||

| ) |

◆ _XMP_gpu_calc_config_params() [2/3]

template<typename T >

| void _XMP_gpu_calc_config_params | ( | unsigned long long * | total_iter, |

| int * | block_x, | ||

| int * | block_y, | ||

| int * | block_z, | ||

| int * | thread_x, | ||

| int * | thread_y, | ||

| int * | thread_z, | ||

| T | lower0, | ||

| T | upper0, | ||

| T | stride0, | ||

| T | lower1, | ||

| T | upper1, | ||

| T | stride1 | ||

| ) |

◆ _XMP_gpu_calc_config_params() [3/3]

template<typename T >

| void _XMP_gpu_calc_config_params | ( | unsigned long long * | total_iter, |

| int * | block_x, | ||

| int * | block_y, | ||

| int * | block_z, | ||

| int * | thread_x, | ||

| int * | thread_y, | ||

| int * | thread_z, | ||

| T | lower0, | ||

| T | upper0, | ||

| T | stride0, | ||

| T | lower1, | ||

| T | upper1, | ||

| T | stride1, | ||

| T | lower2, | ||

| T | upper2, | ||

| T | stride2 | ||

| ) |

◆ _XMP_gpu_calc_config_params_MAP_THREADS() [1/3]

template<typename T >

| void _XMP_gpu_calc_config_params_MAP_THREADS | ( | int * | block_x, |

| int * | block_y, | ||

| int * | block_z, | ||

| int * | thread_x, | ||

| int * | thread_y, | ||

| int * | thread_z, | ||

| int | thread_x_v, | ||

| int | thread_y_v, | ||

| int | thread_z_v, | ||

| T | lower0, | ||

| T | upper0, | ||

| T | stride0, | ||

| T | lower1, | ||

| T | upper1, | ||

| T | stride1, | ||

| T | lower2, | ||

| T | upper2, | ||

| T | stride2 | ||

| ) |

◆ _XMP_gpu_calc_config_params_MAP_THREADS() [2/3]

template<typename T >

| void _XMP_gpu_calc_config_params_MAP_THREADS | ( | int * | block_x, |

| int * | block_y, | ||

| int * | block_z, | ||

| int * | thread_x, | ||

| int * | thread_y, | ||

| int * | thread_z, | ||

| int | thread_x_v, | ||

| int | thread_y_v, | ||

| T | lower0, | ||

| T | upper0, | ||

| T | stride0, | ||

| T | lower1, | ||

| T | upper1, | ||

| T | stride1 | ||

| ) |

◆ _XMP_gpu_calc_config_params_MAP_THREADS() [3/3]

template<typename T >

| void _XMP_gpu_calc_config_params_MAP_THREADS | ( | int * | block_x, |

| int * | block_y, | ||

| int * | block_z, | ||

| int * | thread_x, | ||

| int * | thread_y, | ||

| int * | thread_z, | ||

| int | thread_x_v, | ||

| T | lower0, | ||

| T | upper0, | ||

| T | stride0 | ||

| ) |

◆ _XMP_gpu_calc_iter() [1/3]

template<typename T >

| __device__ void _XMP_gpu_calc_iter | ( | unsigned long long | tid, |

| T | lower0, | ||

| T | upper0, | ||

| T | stride0, | ||

| T * | iter0 | ||

| ) |

◆ _XMP_gpu_calc_iter() [2/3]

template<typename T >

| __device__ void _XMP_gpu_calc_iter | ( | unsigned long long | tid, |

| T | lower0, | ||

| T | upper0, | ||

| T | stride0, | ||

| T | lower1, | ||

| T | upper1, | ||

| T | stride1, | ||

| T * | iter0, | ||

| T * | iter1 | ||

| ) |

◆ _XMP_gpu_calc_iter() [3/3]

template<typename T >

| __device__ void _XMP_gpu_calc_iter | ( | unsigned long long | tid, |

| T | lower0, | ||

| T | upper0, | ||

| T | stride0, | ||

| T | lower1, | ||

| T | upper1, | ||

| T | stride1, | ||

| T | lower2, | ||

| T | upper2, | ||

| T | stride2, | ||

| T * | iter0, | ||

| T * | iter1, | ||

| T * | iter2 | ||

| ) |

◆ _XMP_gpu_calc_thread_id()

template<typename T >

| __device__ void _XMP_gpu_calc_thread_id | ( | T * | index | ) |

Variable Documentation

◆ _XMP_gpu_max_block_dim_x

| int _XMP_gpu_max_block_dim_x |

◆ _XMP_gpu_max_block_dim_y

| int _XMP_gpu_max_block_dim_y |

◆ _XMP_gpu_max_block_dim_z

| int _XMP_gpu_max_block_dim_z |

◆ _XMP_gpu_max_thread

| int _XMP_gpu_max_thread |