|

libxmp/libxmpf in Omni Compiler

1.3.4

|

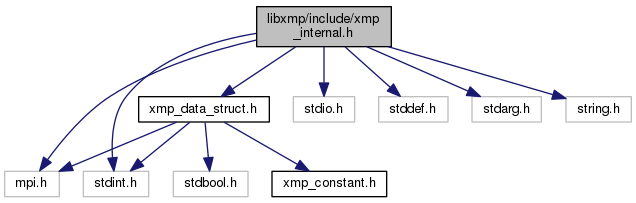

#include <mpi.h>

#include <stdio.h>

#include <stddef.h>

#include <stdarg.h>

#include <stdint.h>

#include <string.h>

#include "xmp_data_struct.h"

Go to the source code of this file.

|

| int | xmp_get_ruuning () |

| |

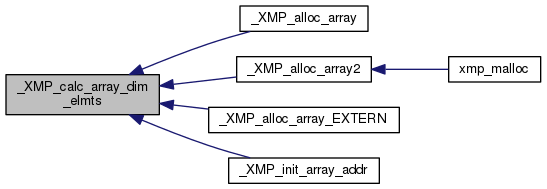

| void | _XMP_calc_array_dim_elmts (_XMP_array_t *array, int array_index) |

| |

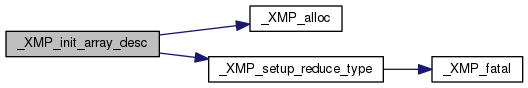

| void | _XMP_init_array_desc (_XMP_array_t **array, _XMP_template_t *template, int dim, int type, size_t type_size,...) |

| |

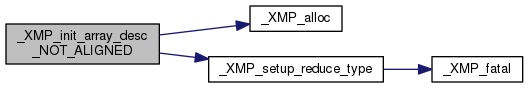

| void | _XMP_init_array_desc_NOT_ALIGNED (_XMP_array_t **adesc, _XMP_template_t *template, int ndims, int type, size_t type_size, unsigned long long *dim_acc, void *ap) |

| |

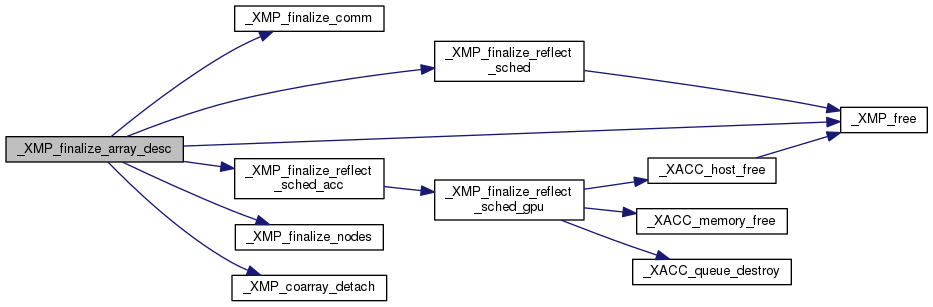

| void | _XMP_finalize_array_desc (_XMP_array_t *array) |

| |

| void | _XMP_align_array_NOT_ALIGNED (_XMP_array_t *array, int array_index) |

| |

| void | _XMP_align_array_DUPLICATION (_XMP_array_t *array, int array_index, int template_index, long long align_subscript) |

| |

| void | _XMP_align_array_BLOCK (_XMP_array_t *array, int array_index, int template_index, long long align_subscript, int *temp0) |

| |

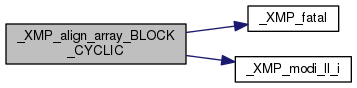

| void | _XMP_align_array_CYCLIC (_XMP_array_t *array, int array_index, int template_index, long long align_subscript, int *temp0) |

| |

| void | _XMP_align_array_BLOCK_CYCLIC (_XMP_array_t *array, int array_index, int template_index, long long align_subscript, int *temp0) |

| |

| void | _XMP_align_array_GBLOCK (_XMP_array_t *array, int array_index, int template_index, long long align_subscript, int *temp0) |

| |

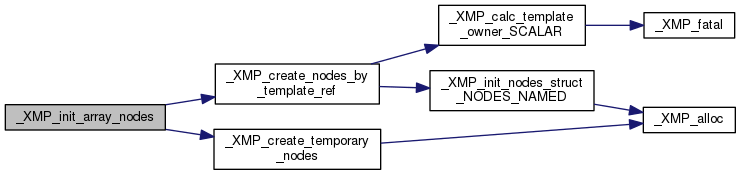

| void | _XMP_init_array_nodes (_XMP_array_t *array) |

| |

| void | _XMP_init_array_comm (_XMP_array_t *array,...) |

| |

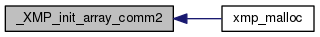

| void | _XMP_init_array_comm2 (_XMP_array_t *array, int args[]) |

| |

| void | _XMP_alloc_array (void **array_addr, _XMP_array_t *array_desc, int is_coarray,...) |

| |

| void | _XMP_alloc_array2 (void **array_addr, _XMP_array_t *array_desc, int is_coarray, unsigned long long *acc[]) |

| |

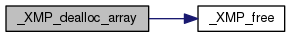

| void | _XMP_dealloc_array (_XMP_array_t *array_desc) |

| |

| void | _XMP_normalize_array_section (_XMP_gmv_desc_t *gmv_desc, int idim, int *lower, int *upper, int *stride) |

| |

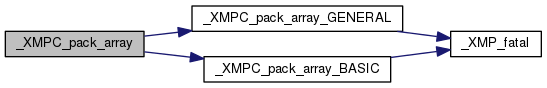

| void | _XMPC_pack_array (void *buffer, void *src, int array_type, size_t array_type_size, int array_dim, int *l, int *u, int *s, unsigned long long *d) |

| |

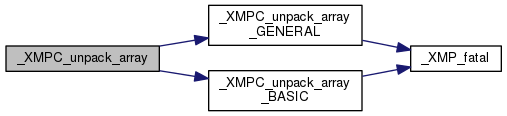

| void | _XMPC_unpack_array (void *dst, void *buffer, int array_type, size_t array_type_size, int array_dim, int *l, int *u, int *s, unsigned long long *d) |

| |

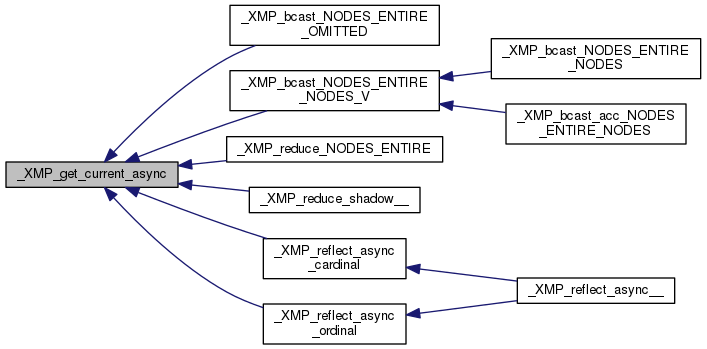

| _XMP_async_comm_t * | _XMP_get_current_async () |

| |

| void | _XMP_initialize_async_comm_tab () |

| |

| void | _XMP_nodes_dealloc_after_wait_async (_XMP_nodes_t *n) |

| |

| void | xmpc_end_async (int) |

| |

| void | _XMP_barrier_NODES_ENTIRE (_XMP_nodes_t *nodes) |

| |

| void | _XMP_barrier_EXEC (void) |

| |

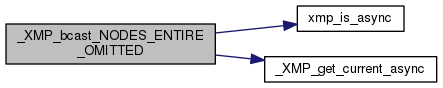

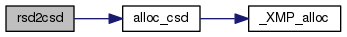

| void | _XMP_bcast_NODES_ENTIRE_OMITTED (_XMP_nodes_t *bcast_nodes, void *addr, int count, size_t datatype_size) |

| |

| void | _XMP_bcast_NODES_ENTIRE_NODES (_XMP_nodes_t *bcast_nodes, void *addr, int count, size_t datatype_size, _XMP_nodes_t *from_nodes,...) |

| |

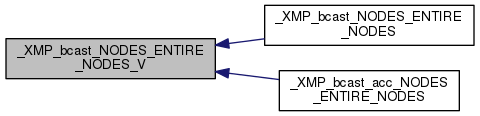

| void | _XMP_bcast_NODES_ENTIRE_NODES_V (_XMP_nodes_t *bcast_nodes, void *addr, int count, size_t datatype_size, _XMP_nodes_t *from_nodes, va_list args) |

| |

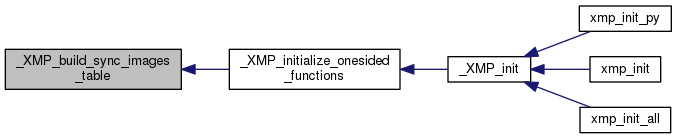

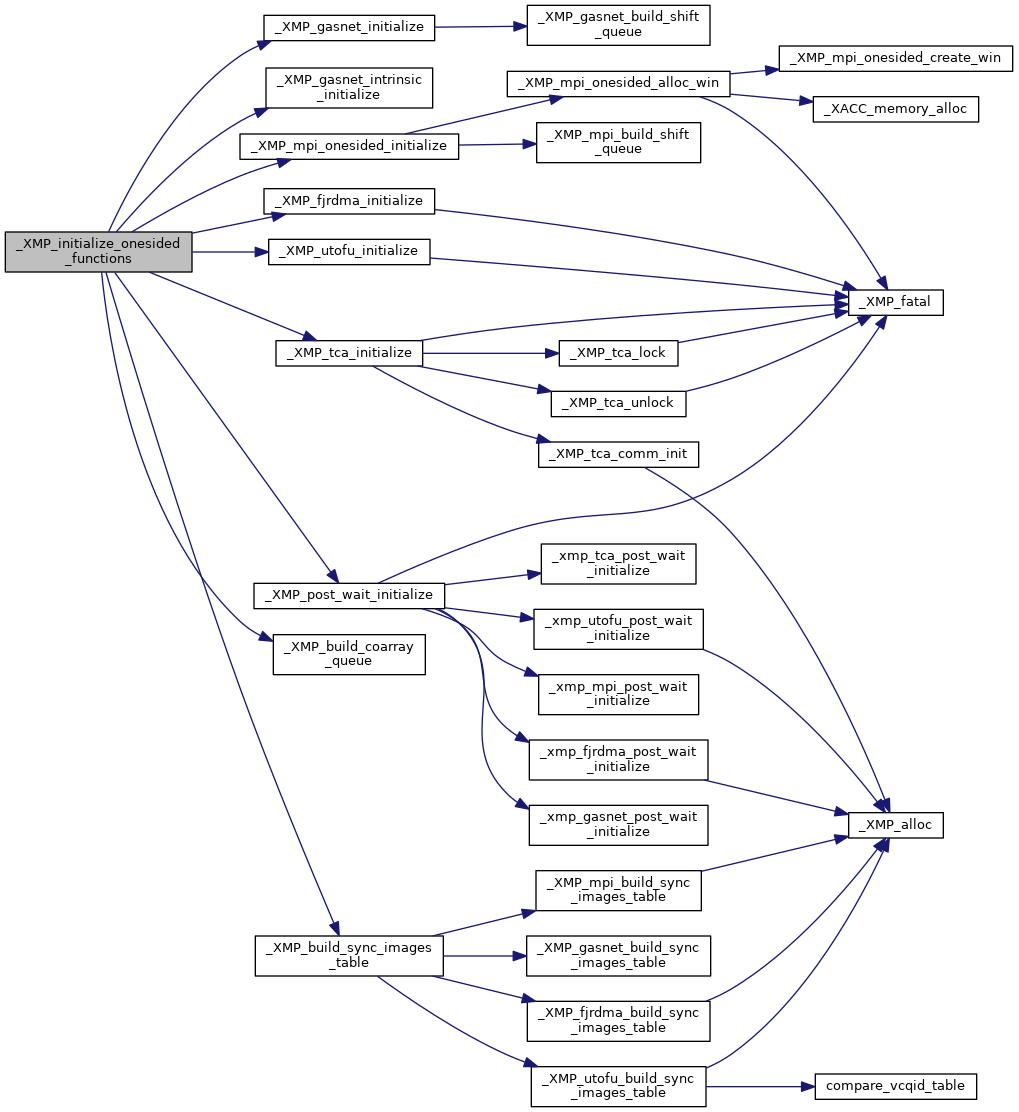

| void | _XMP_initialize_onesided_functions () |

| |

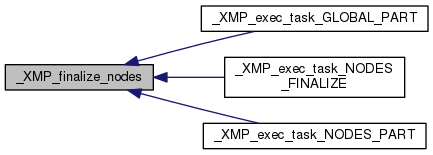

| void | _XMP_finalize_onesided_functions () |

| |

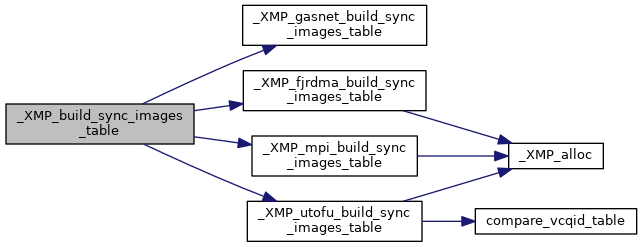

| void | _XMP_build_sync_images_table () |

| | Build table for sync images. More...

|

| |

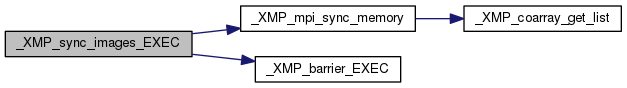

| void | _XMP_sync_images_EXEC (int *status) |

| |

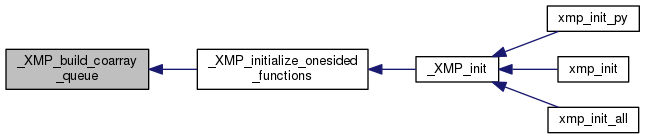

| void | _XMP_build_coarray_queue () |

| | Build queue for coarray. More...

|

| |

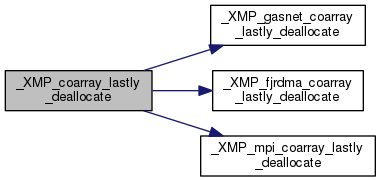

| void | _XMP_coarray_lastly_deallocate () |

| | Deallocate memory space and an object of the last coarray. More...

|

| |

| void | _XMP_mpi_coarray_deallocate (_XMP_coarray_t *, bool is_acc) |

| |

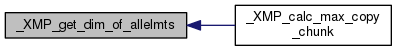

| size_t | _XMP_calc_copy_chunk (const int, const _XMP_array_section_t *) |

| |

| int | _XMP_get_dim_of_allelmts (const int, const _XMP_array_section_t *) |

| |

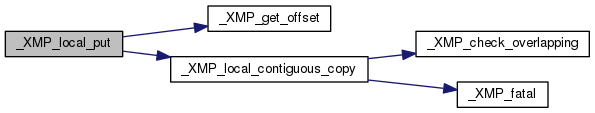

| void | _XMP_local_put (_XMP_coarray_t *, const void *, const int, const int, const int, const int, const _XMP_array_section_t *, const _XMP_array_section_t *, const size_t, const size_t) |

| |

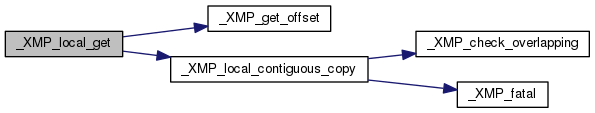

| void | _XMP_local_get (void *, const _XMP_coarray_t *, const int, const int, const int, const int, const _XMP_array_section_t *, const _XMP_array_section_t *, const size_t, const size_t) |

| |

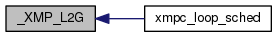

| void | _XMP_L2G (int local_idx, long long int *global_idx, _XMP_template_t *template, int template_index) |

| |

| void | _XMP_G2L (long long int global_idx, int *local_idx, _XMP_template_t *template, int template_index) |

| |

| void | xmpf_transpose (void *dst_p, void *src_p, int opt) |

| |

| void | xmpf_matmul (void *x_p, void *a_p, void *b_p) |

| |

| void | xmpf_pack_mask (void *v_p, void *a_p, void *m_p) |

| |

| void | xmpf_pack_nomask (void *v_p, void *a_p) |

| |

| void | xmpf_pack (void *v_p, void *a_p, void *m_p) |

| |

| void | xmpf_unpack_mask (void *a_p, void *v_p, void *m_p) |

| |

| void | xmpf_unpack_nomask (void *a_p, void *v_p) |

| |

| void | xmpf_unpack (void *a_p, void *v_p, void *m_p) |

| |

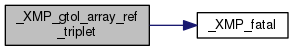

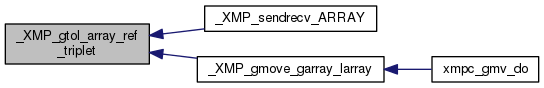

| void | _XMP_gtol_array_ref_triplet (_XMP_array_t *array, int dim_index, int *lower, int *upper, int *stride) |

| |

| int | _XMP_calc_gmove_array_owner_linear_rank_SCALAR (_XMP_array_t *array, int *ref_index) |

| |

| void | _XMP_gmove_bcast_SCALAR (void *dst_addr, void *src_addr, size_t type_size, int root_rank) |

| |

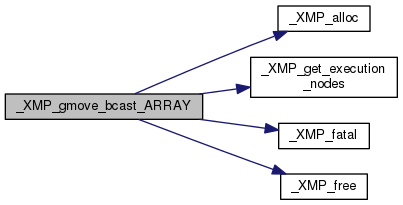

| unsigned long long | _XMP_gmove_bcast_ARRAY (void *dst_addr, int dst_dim, int *dst_l, int *dst_u, int *dst_s, unsigned long long *dst_d, void *src_addr, int src_dim, int *src_l, int *src_u, int *src_s, unsigned long long *src_d, int type, size_t type_size, int root_rank) |

| |

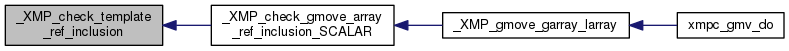

| int | _XMP_check_gmove_array_ref_inclusion_SCALAR (_XMP_array_t *array, int array_index, int ref_index) |

| |

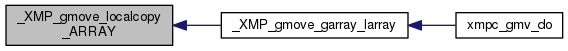

| void | _XMP_gmove_localcopy_ARRAY (int type, int type_size, void *dst_addr, int dst_dim, int *dst_l, int *dst_u, int *dst_s, unsigned long long *dst_d, void *src_addr, int src_dim, int *src_l, int *src_u, int *src_s, unsigned long long *src_d) |

| |

| int | _XMP_calc_global_index_HOMECOPY (_XMP_array_t *dst_array, int dst_dim_index, int *dst_l, int *dst_u, int *dst_s, int *src_l, int *src_u, int *src_s) |

| |

| int | _XMP_calc_global_index_BCAST (int dst_dim, int *dst_l, int *dst_u, int *dst_s, _XMP_array_t *src_array, int *src_array_nodes_ref, int *src_l, int *src_u, int *src_s) |

| |

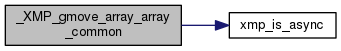

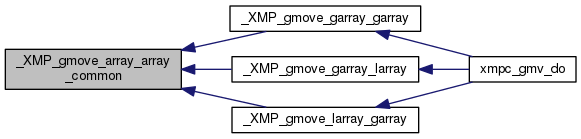

| void | _XMP_gmove_array_array_common (_XMP_gmv_desc_t *gmv_desc_leftp, _XMP_gmv_desc_t *gmv_desc_rightp, int *dst_l, int *dst_u, int *dst_s, unsigned long long *dst_d, int *src_l, int *src_u, int *src_s, unsigned long long *src_d, int mode) |

| |

| void | _XMP_gmove_inout_scalar (void *scalar, _XMP_gmv_desc_t *gmv_desc, int rdma_type) |

| |

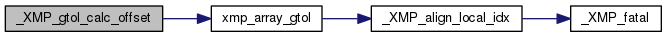

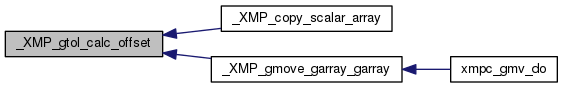

| unsigned long long | _XMP_gtol_calc_offset (_XMP_array_t *a, int g_idx[]) |

| |

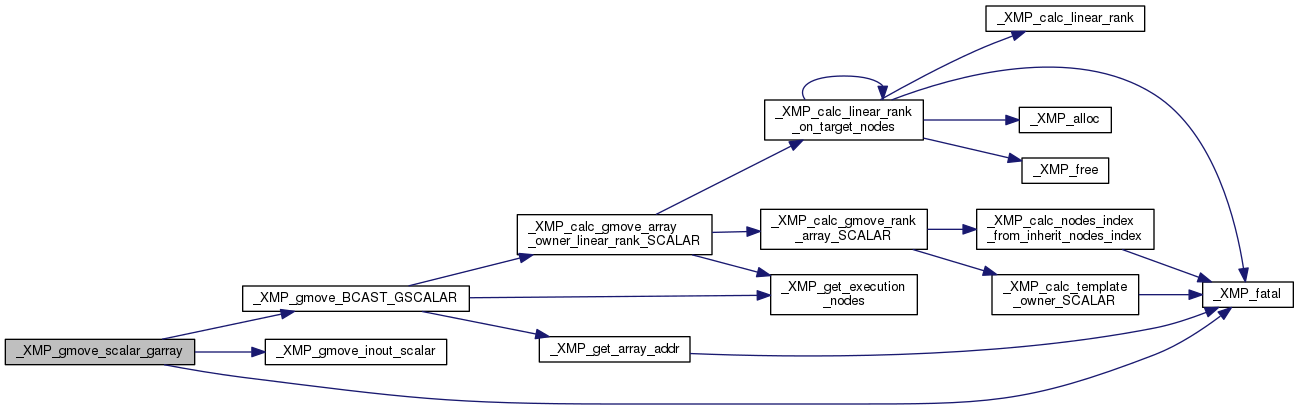

| void | _XMP_gmove_scalar_garray (void *scalar, _XMP_gmv_desc_t *gmv_desc_rightp, int mode) |

| |

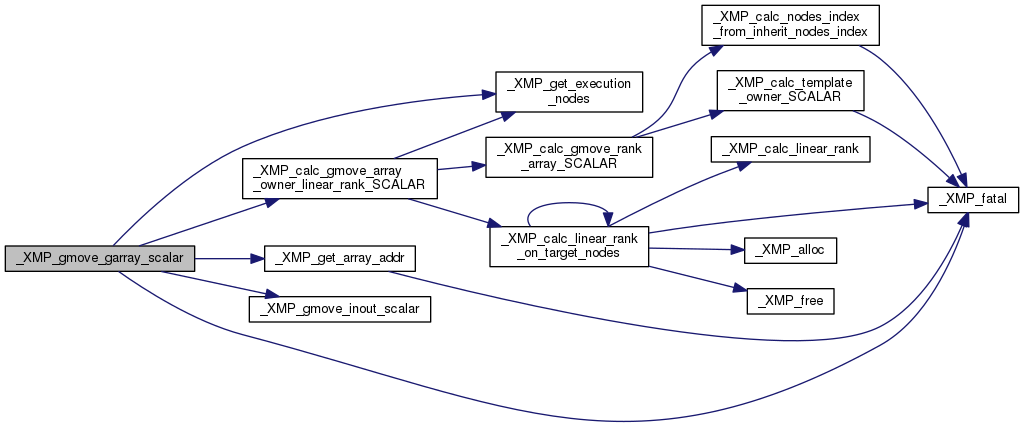

| void | _XMP_gmove_garray_scalar (_XMP_gmv_desc_t *gmv_desc_leftp, void *scalar, int mode) |

| |

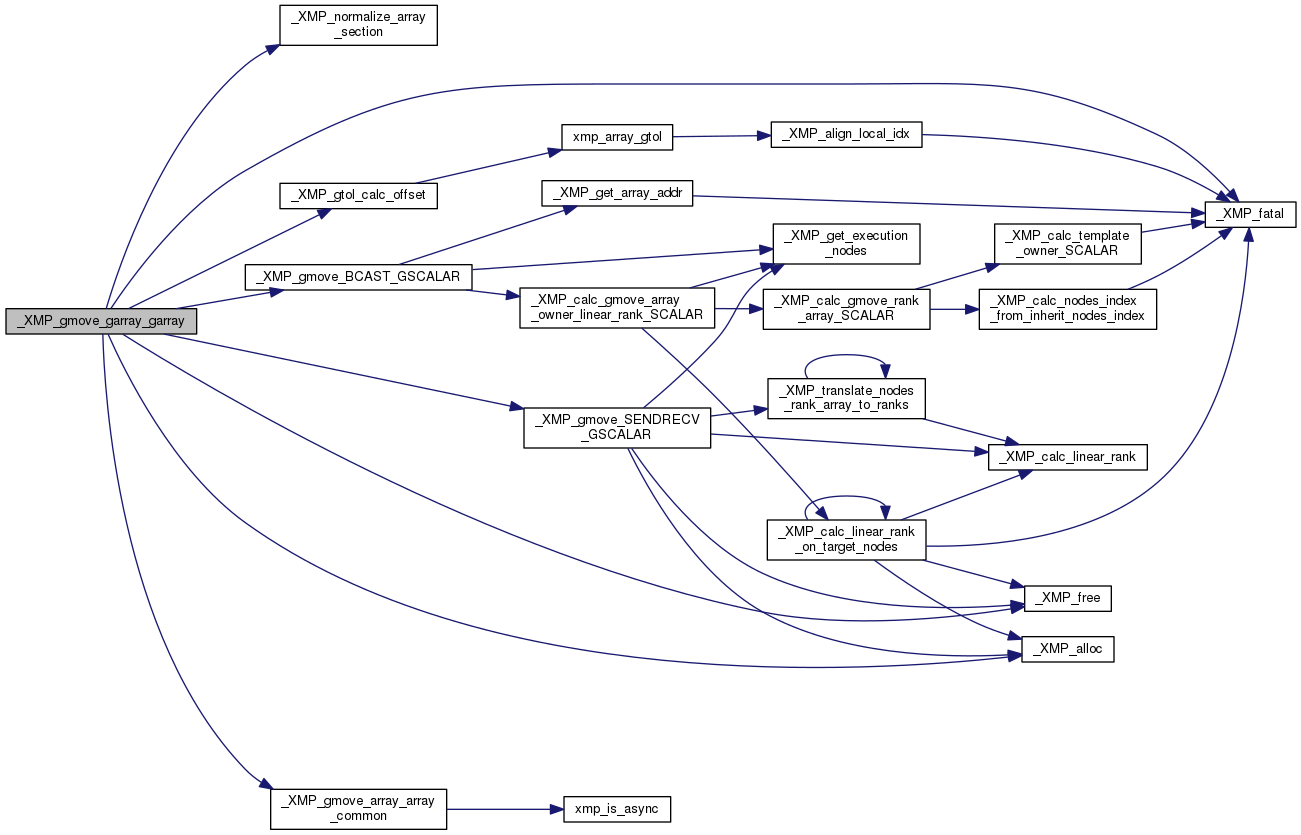

| void | _XMP_gmove_garray_garray (_XMP_gmv_desc_t *gmv_desc_leftp, _XMP_gmv_desc_t *gmv_desc_rightp, int mode) |

| |

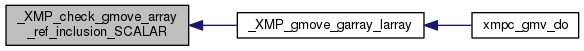

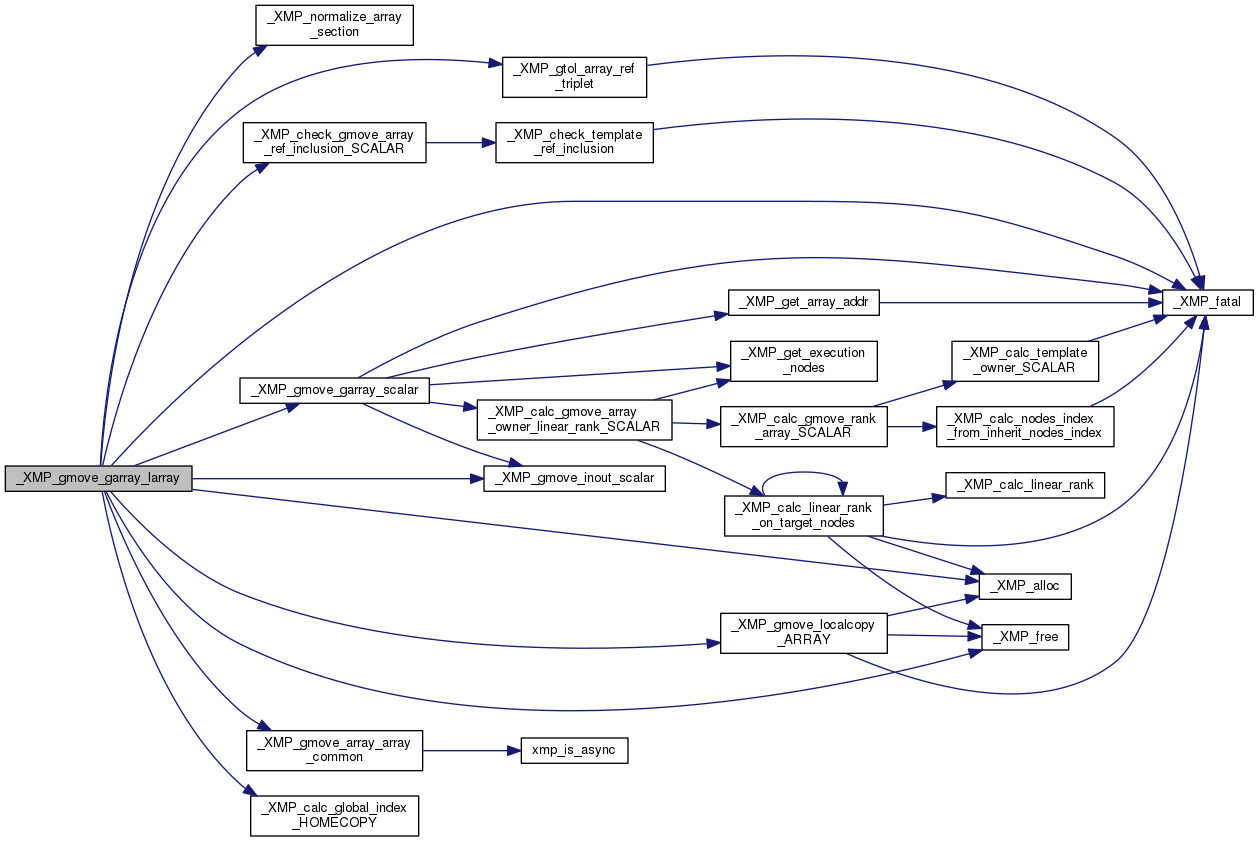

| void | _XMP_gmove_garray_larray (_XMP_gmv_desc_t *gmv_desc_leftp, _XMP_gmv_desc_t *gmv_desc_rightp, int mode) |

| |

| void | _XMP_gmove_larray_garray (_XMP_gmv_desc_t *gmv_desc_leftp, _XMP_gmv_desc_t *gmv_desc_rightp, int mode) |

| |

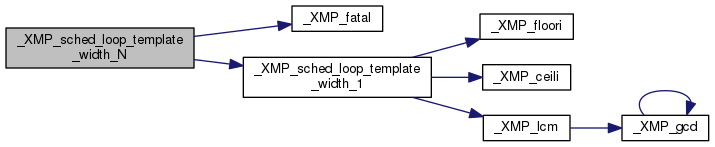

| int | _XMP_sched_loop_template_width_1 (int ser_init, int ser_cond, int ser_step, int *par_init, int *par_cond, int *par_step, int template_lower, int template_upper, int template_stride) |

| |

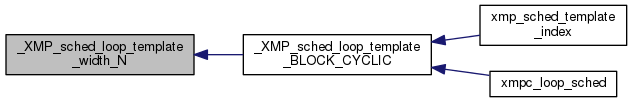

| int | _XMP_sched_loop_template_width_N (int ser_init, int ser_cond, int ser_step, int *par_init, int *par_cond, int *par_step, int template_lower, int template_upper, int template_stride, int width, int template_ser_lower, int template_ser_upper) |

| |

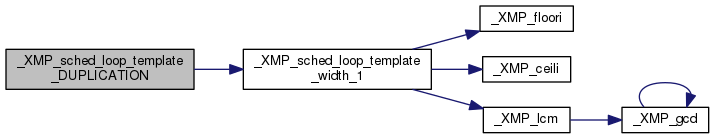

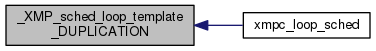

| void | _XMP_sched_loop_template_DUPLICATION (int ser_init, int ser_cond, int ser_step, int *par_init, int *par_cond, int *par_step, _XMP_template_t *template, int template_index) |

| |

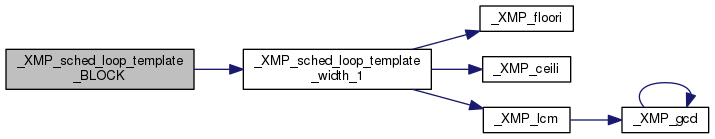

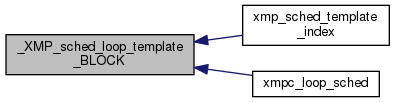

| void | _XMP_sched_loop_template_BLOCK (int ser_init, int ser_cond, int ser_step, int *par_init, int *par_cond, int *par_step, _XMP_template_t *template, int template_index) |

| |

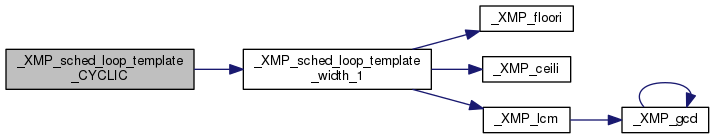

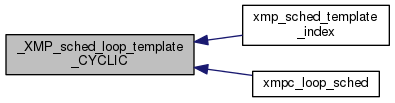

| void | _XMP_sched_loop_template_CYCLIC (int ser_init, int ser_cond, int ser_step, int *par_init, int *par_cond, int *par_step, _XMP_template_t *template, int template_index) |

| |

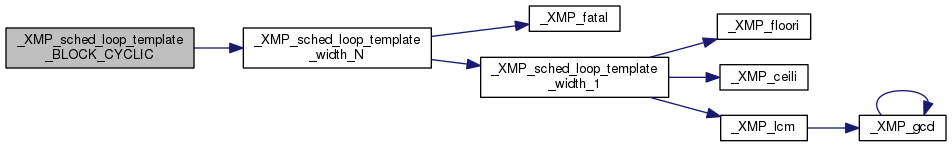

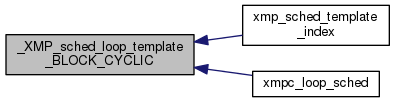

| void | _XMP_sched_loop_template_BLOCK_CYCLIC (int ser_init, int ser_cond, int ser_step, int *par_init, int *par_cond, int *par_step, _XMP_template_t *template, int template_index) |

| |

| void | _XMP_sched_loop_template_GBLOCK (int ser_init, int ser_cond, int ser_step, int *par_init, int *par_cond, int *par_step, _XMP_template_t *template, int template_index) |

| |

| _XMP_nodes_t * | _XMP_create_temporary_nodes (_XMP_nodes_t *n) |

| |

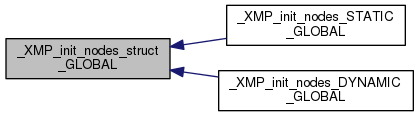

| _XMP_nodes_t * | _XMP_init_nodes_struct_GLOBAL (int dim, int *dim_size, int is_static) |

| |

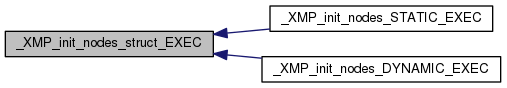

| _XMP_nodes_t * | _XMP_init_nodes_struct_EXEC (int dim, int *dim_size, int is_static) |

| |

| _XMP_nodes_t * | _XMP_init_nodes_struct_NODES_NUMBER (int dim, int ref_lower, int ref_upper, int ref_stride, int *dim_size, int is_static) |

| |

| _XMP_nodes_t * | _XMP_init_nodes_struct_NODES_NAMED (int dim, _XMP_nodes_t *ref_nodes, int *shrink, int *ref_lower, int *ref_upper, int *ref_stride, int *dim_size, int is_static) |

| |

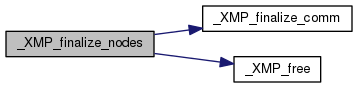

| void | _XMP_finalize_nodes (_XMP_nodes_t *nodes) |

| |

| _XMP_nodes_t * | _XMP_create_nodes_by_comm (int is_member, _XMP_comm_t *comm) |

| |

| void | _XMP_calc_rank_array (_XMP_nodes_t *n, int *rank_array, int linear_rank) |

| |

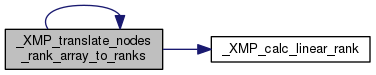

| int | _XMP_calc_linear_rank (_XMP_nodes_t *n, int *rank_array) |

| |

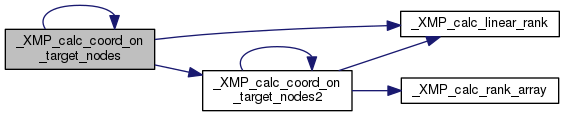

| int | _XMP_calc_linear_rank_on_target_nodes (_XMP_nodes_t *n, int *rank_array, _XMP_nodes_t *target_nodes) |

| |

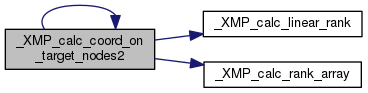

| _Bool | _XMP_calc_coord_on_target_nodes2 (_XMP_nodes_t *n, int *ncoord, _XMP_nodes_t *target_n, int *target_ncoord) |

| |

| _Bool | _XMP_calc_coord_on_target_nodes (_XMP_nodes_t *n, int *ncoord, _XMP_nodes_t *target_n, int *target_ncoord) |

| |

| _XMP_nodes_ref_t * | _XMP_init_nodes_ref (_XMP_nodes_t *n, int *rank_array) |

| |

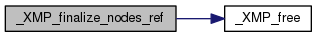

| void | _XMP_finalize_nodes_ref (_XMP_nodes_ref_t *nodes_ref) |

| |

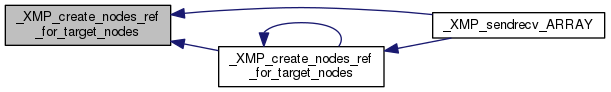

| _XMP_nodes_ref_t * | _XMP_create_nodes_ref_for_target_nodes (_XMP_nodes_t *n, int *rank_array, _XMP_nodes_t *target_nodes) |

| |

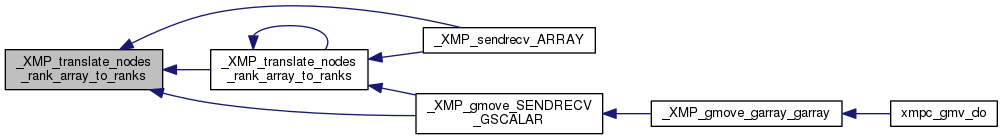

| void | _XMP_translate_nodes_rank_array_to_ranks (_XMP_nodes_t *nodes, int *ranks, int *rank_array, int shrink_nodes_size) |

| |

| int | _XMP_get_next_rank (_XMP_nodes_t *nodes, int *rank_array) |

| |

| int | _XMP_calc_nodes_index_from_inherit_nodes_index (_XMP_nodes_t *nodes, int inherit_nodes_index) |

| |

| void | _XMP_push_nodes (_XMP_nodes_t *nodes) |

| |

| void | _XMP_pop_nodes (void) |

| |

| void | _XMP_pop_n_free_nodes (void) |

| |

| void | _XMP_pop_n_free_nodes_wo_finalize_comm (void) |

| |

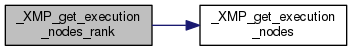

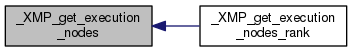

| _XMP_nodes_t * | _XMP_get_execution_nodes (void) |

| |

| int | _XMP_get_execution_nodes_rank (void) |

| |

| void | _XMP_push_comm (_XMP_comm_t *comm) |

| |

| void | _XMP_finalize_comm (_XMP_comm_t *comm) |

| |

| void | _XMP_pack_vector (char *restrict dst, char *restrict src, int count, int blocklength, long stride) |

| |

| void | _XMP_pack_vector2 (char *restrict dst, char *restrict src, int count, int blocklength, int nnodes, int type_size, int src_block_dim) |

| |

| void | _XMP_unpack_vector (char *restrict dst, char *restrict src, int count, int blocklength, long stride) |

| |

| void | _XMPF_unpack_transpose_vector (char *restrict dst, char *restrict src, int dst_stride, int src_stride, int type_size, int dst_block_dim) |

| |

| void | _XMP_check_reflect_type (void) |

| |

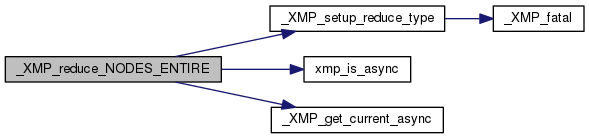

| void | _XMP_reduce_NODES_ENTIRE (_XMP_nodes_t *nodes, void *addr, int count, int datatype, int op) |

| |

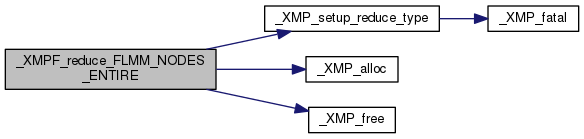

| void | _XMPF_reduce_FLMM_NODES_ENTIRE (_XMP_nodes_t *nodes, void *addr, int count, int datatype, int op, int num_locs, void **loc_vars, int *loc_types) |

| |

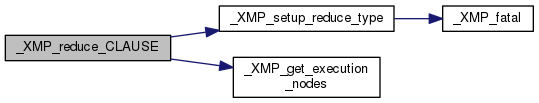

| void | _XMP_reduce_CLAUSE (void *data_addr, int count, int datatype, int op) |

| |

| void | xmp_reduce_initialize () |

| |

| void | _XMP_set_reflect__ (_XMP_array_t *a, int dim, int lwidth, int uwidth, int is_periodic) |

| |

| void | _XMP_reflect__ (_XMP_array_t *a) |

| |

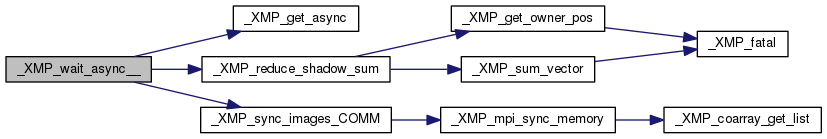

| void | _XMP_wait_async__ (int async_id) |

| |

| void | _XMP_reflect_async__ (_XMP_array_t *a, int async_id) |

| |

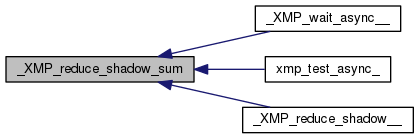

| void | _XMP_reduce_shadow_wait (_XMP_array_t *a) |

| |

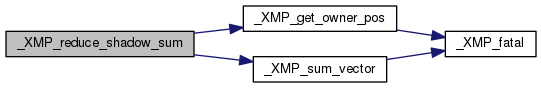

| void | _XMP_reduce_shadow_sum (_XMP_array_t *a) |

| |

| void | _XMP_init (int argc, char **argv, MPI_Comm comm) |

| |

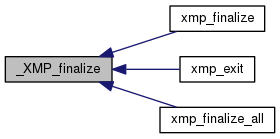

| void | _XMP_finalize (bool isFinalize) |

| |

| size_t | _XMP_get_datatype_size (int datatype) |

| |

| void | print_rsd (_XMP_rsd_t *rsd) |

| |

| void | print_bsd (_XMP_bsd_t *bsd) |

| |

| void | print_csd (_XMP_csd_t *csd) |

| |

| void | print_comm_set (_XMP_comm_set_t *comm_set0) |

| |

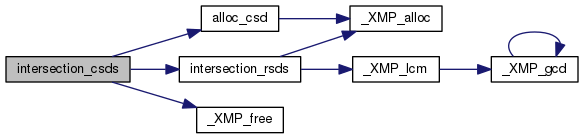

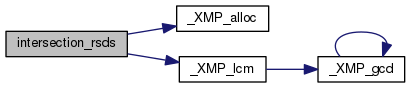

| _XMP_rsd_t * | intersection_rsds (_XMP_rsd_t *_rsd1, _XMP_rsd_t *_rsd2) |

| |

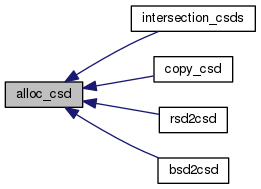

| _XMP_csd_t * | intersection_csds (_XMP_csd_t *csd1, _XMP_csd_t *csd2) |

| |

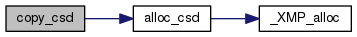

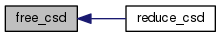

| _XMP_csd_t * | alloc_csd (int n) |

| |

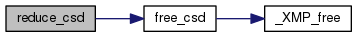

| void | free_csd (_XMP_csd_t *csd) |

| |

| _XMP_csd_t * | copy_csd (_XMP_csd_t *csd) |

| |

| int | get_csd_size (_XMP_csd_t *csd) |

| |

| void | free_comm_set (_XMP_comm_set_t *comm_set) |

| |

| _XMP_csd_t * | rsd2csd (_XMP_rsd_t *rsd) |

| |

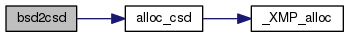

| _XMP_csd_t * | bsd2csd (_XMP_bsd_t *bsd) |

| |

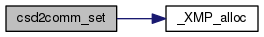

| _XMP_comm_set_t * | csd2comm_set (_XMP_csd_t *csd) |

| |

| void | reduce_csd (_XMP_csd_t *csd[_XMP_N_MAX_DIM], int ndims) |

| |

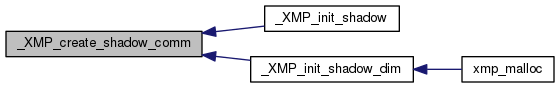

| void | _XMP_create_shadow_comm (_XMP_array_t *array, int array_index) |

| |

| void | _XMP_reflect_shadow_FULL (void *array_addr, _XMP_array_t *array_desc, int array_index) |

| |

| void | _XMP_init_reflect_sched (_XMP_reflect_sched_t *sched) |

| |

| void | _XMP_finalize_reflect_sched (_XMP_reflect_sched_t *sched, _Bool free_buf) |

| |

| void | _XMP_init_shadow (_XMP_array_t *array,...) |

| |

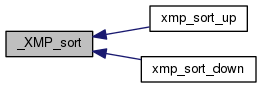

| void | _XMP_sort (_XMP_array_t *a_desc, _XMP_array_t *b_desc, int is_up) |

| |

| int | xmpc_ltog (int local_idx, _XMP_template_t *template, int template_index, int offset) |

| |

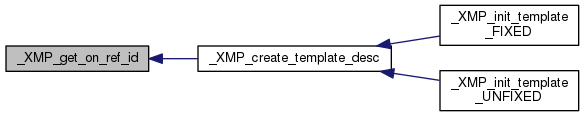

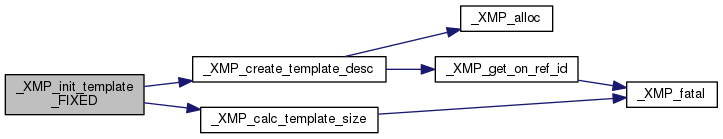

| _XMP_template_t * | _XMP_create_template_desc (int dim, _Bool is_fixed) |

| |

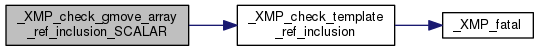

| int | _XMP_check_template_ref_inclusion (int ref_lower, int ref_upper, int ref_stride, _XMP_template_t *t, int index) |

| |

| void | _XMP_init_template_FIXED (_XMP_template_t **template, int dim,...) |

| |

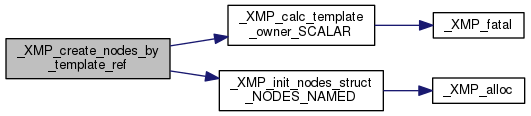

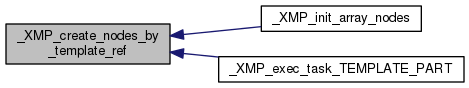

| _XMP_nodes_t * | _XMP_create_nodes_by_template_ref (_XMP_template_t *ref_template, int *shrink, long long *ref_lower, long long *ref_upper, long long *ref_stride) |

| |

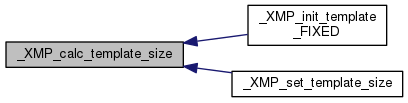

| void | _XMP_calc_template_size (_XMP_template_t *t) |

| |

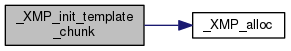

| void | _XMP_init_template_chunk (_XMP_template_t *template, _XMP_nodes_t *nodes) |

| |

| void | _XMP_finalize_template (_XMP_template_t *template) |

| |

| int | _XMP_calc_template_owner_SCALAR (_XMP_template_t *ref_template, int dim_index, long long ref_index) |

| |

| int | _XMP_calc_template_par_triplet (_XMP_template_t *template, int template_index, int nodes_rank, int *template_lower, int *template_upper, int *template_stride) |

| |

| void | _XMP_dist_template_DUPLICATION (_XMP_template_t *template, int template_index) |

| |

| void | _XMP_dist_template_BLOCK (_XMP_template_t *template, int template_index, int nodes_index) |

| |

| void | _XMP_dist_template_CYCLIC (_XMP_template_t *template, int template_index, int nodes_index) |

| |

| void | _XMP_dist_template_BLOCK_CYCLIC (_XMP_template_t *template, int template_index, int nodes_index, unsigned long long width) |

| |

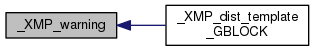

| void | _XMP_dist_template_GBLOCK (_XMP_template_t *template, int template_index, int nodes_index, int *mapping_array, int *temp0) |

| |

| unsigned long long | _XMP_get_on_ref_id (void) |

| |

| void * | _XMP_alloc (size_t size) |

| |

| void | _XMP_free (void *p) |

| |

| void | _XMP_fatal (char *msg) |

| |

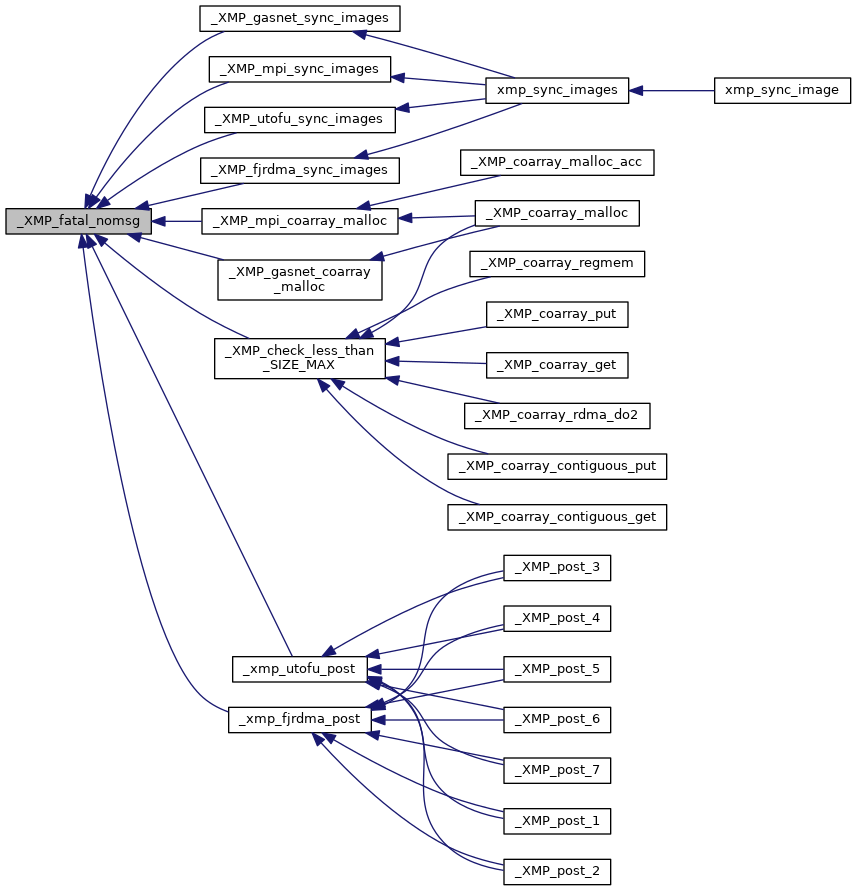

| void | _XMP_fatal_nomsg () |

| |

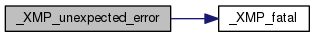

| void | _XMP_unexpected_error (void) |

| |

| void | _XMP_warning (char *msg) |

| |

| void | _XMP_init_world (int *argc, char ***argv) |

| |

| void | _XMP_finalize_world (bool) |

| |

| int | _XMP_split_world_by_color (int color) |

| |

| _Bool | xmp_is_async () |

| |

| void | _XMP_threads_init (void) |

| |

| void | _XMP_threads_finalize (void) |

| |

|

| int | _XMPC_running |

| |

| int | _XMPF_running |

| |

| void(* | _xmp_pack_array )(void *buffer, void *src, int array_type, size_t array_type_size, int array_dim, int *l, int *u, int *s, unsigned long long *d) |

| |

| void(* | _xmp_unpack_array )(void *dst, void *buffer, int array_type, size_t array_type_size, int array_dim, int *l, int *u, int *s, unsigned long long *d) |

| |

| _XMP_coarray_list_t * | _XMP_coarray_list_head |

| |

| _XMP_coarray_list_t * | _XMP_coarray_list_tail |

| |

| int | _XMP_world_size |

| |

| int | _XMP_world_rank |

| |

| void * | _XMP_world_nodes |

| |

◆ _XMP_ASSERT

| #define _XMP_ASSERT |

( |

|

_flag | ) |

|

◆ _XMP_INTERNAL

◆ _XMP_IS_SINGLE

◆ _XMP_RETURN_IF_AFTER_FINALIZATION

| #define _XMP_RETURN_IF_AFTER_FINALIZATION |

◆ _XMP_RETURN_IF_SINGLE

| #define _XMP_RETURN_IF_SINGLE |

◆ _XMP_TEND

| #define _XMP_TEND |

( |

|

t, |

|

|

|

t0 |

|

) |

| |

◆ _XMP_TEND2

| #define _XMP_TEND2 |

( |

|

t, |

|

|

|

tt, |

|

|

|

t0 |

|

) |

| |

◆ _XMP_TSTART

| #define _XMP_TSTART |

( |

|

t0 | ) |

|

◆ MAX

| #define MAX |

( |

|

a, |

|

|

|

b |

|

) |

| ( (a)>(b) ? (a) : (b) ) |

◆ MIN

| #define MIN |

( |

|

a, |

|

|

|

b |

|

) |

| ( (a)<(b) ? (a) : (b) ) |

◆ XACC_DEBUG

| #define XACC_DEBUG |

( |

|

... | ) |

do{}while(0) |

◆ _XMP_coarray_list_t

◆ _XMP_align_array_BLOCK()

| void _XMP_align_array_BLOCK |

( |

_XMP_array_t * |

array, |

|

|

int |

array_index, |

|

|

int |

template_index, |

|

|

long long |

align_subscript, |

|

|

int * |

temp0 |

|

) |

| |

335 long long align_lower = ai->

ser_lower + align_subscript;

336 long long align_upper = ai->

ser_upper + align_subscript;

337 if(align_lower < ti->ser_lower || align_upper > ti->

ser_upper)

338 _XMP_fatal(

"aligned array is out of template bound");

344 if(template->is_owner){

345 long long template_lower = chunk->

par_lower;

346 long long template_upper = chunk->

par_upper;

349 if(align_lower < template_lower){

350 ai->

par_lower = template_lower - align_subscript;

352 else if(template_upper < align_lower){

354 goto EXIT_CALC_PARALLEL_MEMBERS;

361 if(align_upper < template_lower){

363 goto EXIT_CALC_PARALLEL_MEMBERS;

365 else if(template_upper < align_upper){

366 ai->

par_upper = template_upper - align_subscript;

383 EXIT_CALC_PARALLEL_MEMBERS:

◆ _XMP_align_array_BLOCK_CYCLIC()

| void _XMP_align_array_BLOCK_CYCLIC |

( |

_XMP_array_t * |

array, |

|

|

int |

array_index, |

|

|

int |

template_index, |

|

|

long long |

align_subscript, |

|

|

int * |

temp0 |

|

) |

| |

454 long long align_lower = ai->

ser_lower + align_subscript;

455 long long align_upper = ai->

ser_upper + align_subscript;

456 if (((align_lower < ti->ser_lower) || (align_upper > ti->

ser_upper))) {

457 _XMP_fatal(

"aligned array is out of template bound");

464 if (template->is_owner) {

510 int rank_lb = rank_dist % nsize;

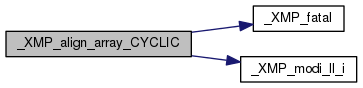

◆ _XMP_align_array_CYCLIC()

| void _XMP_align_array_CYCLIC |

( |

_XMP_array_t * |

array, |

|

|

int |

array_index, |

|

|

int |

template_index, |

|

|

long long |

align_subscript, |

|

|

int * |

temp0 |

|

) |

| |

400 long long align_lower = ai->

ser_lower + align_subscript;

401 long long align_upper = ai->

ser_upper + align_subscript;

402 if (((align_lower < ti->ser_lower) || (align_upper > ti->

ser_upper))) {

403 _XMP_fatal(

"aligned array is out of template bound");

410 if (template->is_owner) {

◆ _XMP_align_array_DUPLICATION()

| void _XMP_align_array_DUPLICATION |

( |

_XMP_array_t * |

array, |

|

|

int |

array_index, |

|

|

int |

template_index, |

|

|

long long |

align_subscript |

|

) |

| |

296 long long align_lower = lower + align_subscript;

297 long long align_upper = upper + align_subscript;

298 if (((align_lower < ti->ser_lower) || (align_upper > ti->

ser_upper))) {

299 _XMP_fatal(

"aligned array is out of template bound");

306 if (template->is_owner) {

◆ _XMP_align_array_GBLOCK()

| void _XMP_align_array_GBLOCK |

( |

_XMP_array_t * |

array, |

|

|

int |

array_index, |

|

|

int |

template_index, |

|

|

long long |

align_subscript, |

|

|

int * |

temp0 |

|

) |

| |

567 long long align_lower = ai->

ser_lower + align_subscript;

568 long long align_upper = ai->

ser_upper + align_subscript;

569 if (((align_lower < ti->ser_lower) || (align_upper > ti->

ser_upper))) {

570 _XMP_fatal(

"aligned array is out of template bound");

577 if (template->is_owner) {

578 long long template_lower = chunk->

par_lower;

579 long long template_upper = chunk->

par_upper;

582 if (align_lower < template_lower) {

583 ai->

par_lower = template_lower - align_subscript;

585 else if (template_upper < align_lower) {

587 goto EXIT_CALC_PARALLEL_MEMBERS;

594 if (align_upper < template_lower) {

596 goto EXIT_CALC_PARALLEL_MEMBERS;

598 else if (template_upper < align_upper) {

599 ai->

par_upper = template_upper - align_subscript;

619 EXIT_CALC_PARALLEL_MEMBERS:

◆ _XMP_align_array_NOT_ALIGNED()

| void _XMP_align_array_NOT_ALIGNED |

( |

_XMP_array_t * |

array, |

|

|

int |

array_index |

|

) |

| |

◆ _XMP_alloc()

| void* _XMP_alloc |

( |

size_t |

size | ) |

|

25 int error = posix_memalign(&addr, 128, size);

26 if(addr == NULL || error != 0)

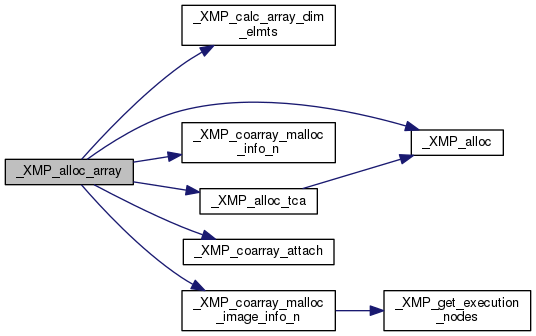

◆ _XMP_alloc_array()

| void _XMP_alloc_array |

( |

void ** |

array_addr, |

|

|

_XMP_array_t * |

array_desc, |

|

|

int |

is_coarray, |

|

|

|

... |

|

) |

| |

633 unsigned long long total_elmts = 1;

634 int dim = array_desc->

dim;

637 va_start(args, is_coarray);

638 for(

int i=dim-1;i>=0;i--){

639 unsigned long long *acc = va_arg(args,

unsigned long long *);

647 for(

int i=0;i<dim;i++)

658 #ifdef _XMP_MPI3_ONESIDED

663 for (

int i = 0; i < dim; i++){

672 int ndims_node = ndesc->

dim;

673 int nsize[ndims_node-1];

674 for (

int i = 0; i < ndims_node-1; i++){

681 array_desc->coarray = c;

◆ _XMP_alloc_array2()

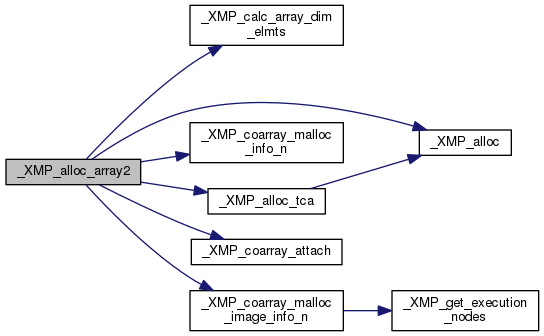

| void _XMP_alloc_array2 |

( |

void ** |

array_addr, |

|

|

_XMP_array_t * |

array_desc, |

|

|

int |

is_coarray, |

|

|

unsigned long long * |

acc[] |

|

) |

| |

697 unsigned long long total_elmts = 1;

698 int dim = array_desc->

dim;

700 for (

int i = dim - 1; i >= 0; i--) {

701 *acc[i] = total_elmts;

708 for (

int i = 0; i < dim; i++) {

720 #ifdef _XMP_MPI3_ONESIDED

725 for (

int i = 0; i < dim; i++){

734 int ndims_node = ndesc->

dim;

735 int nsize[ndims_node-1];

736 for (

int i = 0; i < ndims_node-1; i++){

743 array_desc->coarray = c;

◆ _XMP_barrier_EXEC()

| void _XMP_barrier_EXEC |

( |

void |

| ) |

|

◆ _XMP_barrier_NODES_ENTIRE()

12 MPI_Barrier(*((MPI_Comm *)nodes->

comm));

◆ _XMP_bcast_NODES_ENTIRE_NODES()

| void _XMP_bcast_NODES_ENTIRE_NODES |

( |

_XMP_nodes_t * |

bcast_nodes, |

|

|

void * |

addr, |

|

|

int |

count, |

|

|

size_t |

datatype_size, |

|

|

_XMP_nodes_t * |

from_nodes, |

|

|

|

... |

|

) |

| |

62 va_start(args, from_nodes);

◆ _XMP_bcast_NODES_ENTIRE_NODES_V()

| void _XMP_bcast_NODES_ENTIRE_NODES_V |

( |

_XMP_nodes_t * |

bcast_nodes, |

|

|

void * |

addr, |

|

|

int |

count, |

|

|

size_t |

datatype_size, |

|

|

_XMP_nodes_t * |

from_nodes, |

|

|

va_list |

args |

|

) |

| |

78 _XMP_fatal(

"broadcast failed, cannot find the source node");

83 int acc_nodes_size = 1;

84 int from_dim = from_nodes->

dim;

85 int from_lower, from_upper, from_stride;

88 if(inherit_info == NULL){

89 for (

int i = 0; i < from_dim; i++) {

91 if(inherit_info != NULL){

92 if(inherit_info[i].shrink ==

true)

94 size = inherit_info[i].

upper - inherit_info[i].

lower + 1;

95 if(size == 0)

continue;

99 if (va_arg(args,

int) == 1) {

100 root += (acc_nodes_size * rank);

103 from_lower = va_arg(args,

int) - 1;

104 from_upper = va_arg(args,

int) - 1;

105 from_stride = va_arg(args,

int);

109 _XMP_fatal(

"multiple source nodes indicated in bcast directive");

112 root += (acc_nodes_size * (from_lower));

115 acc_nodes_size *= size;

121 for (

int i = 0; i < inherit_node_dim; i++) {

123 if(inherit_info[i].shrink)

126 int size = inherit_info[i].

upper - inherit_info[i].

lower + 1;

136 int is_astrisk = va_arg(args,

int);

137 if (is_astrisk == 1){

138 int rank = from_nodes->

info[i].

rank;

139 root += (acc_nodes_size * rank);

142 from_lower = va_arg(args,

int) - 1;

143 from_upper = va_arg(args,

int) - 1;

147 if(from_lower != from_upper)

148 _XMP_fatal(

"multiple source nodes indicated in bcast directive");

150 root += (acc_nodes_size * (from_lower - inherit_info[i].

lower));

153 acc_nodes_size *= size;

160 MPI_Ibcast(addr, count*datatype_size, MPI_BYTE, root,

161 *((MPI_Comm *)bcast_nodes->

comm), &async->

reqs[async->

nreqs]);

166 MPI_Bcast(addr, count*datatype_size, MPI_BYTE, root,

167 *((MPI_Comm *)bcast_nodes->

comm));

◆ _XMP_bcast_NODES_ENTIRE_OMITTED()

| void _XMP_bcast_NODES_ENTIRE_OMITTED |

( |

_XMP_nodes_t * |

bcast_nodes, |

|

|

void * |

addr, |

|

|

int |

count, |

|

|

size_t |

datatype_size |

|

) |

| |

21 *((MPI_Comm *)bcast_nodes->

comm), &async->

reqs[async->

nreqs]);

27 *((MPI_Comm *)bcast_nodes->

comm));

◆ _XMP_build_coarray_queue()

| void _XMP_build_coarray_queue |

( |

| ) |

|

Build queue for coarray.

1576 _coarray_queue.

max_size = _XMP_COARRAY_QUEUE_INITIAL_SIZE;

1577 _coarray_queue.

num = 0;

◆ _XMP_build_sync_images_table()

| void _XMP_build_sync_images_table |

( |

| ) |

|

Build table for sync images.

1566 #elif _XMP_MPI3_ONESIDED

◆ _XMP_calc_array_dim_elmts()

| void _XMP_calc_array_dim_elmts |

( |

_XMP_array_t * |

array, |

|

|

int |

array_index |

|

) |

| |

22 unsigned long long dim_elmts = 1;

23 for (

int i = 0; i < dim; i++) {

24 if (i != array_index) {

◆ _XMP_calc_coord_on_target_nodes()

1167 for(

int i=1;i<dim;i++)

1168 multiplier[i] = multiplier[i-1] * inherit_info[i].size;

1171 for (

int i=dim;i>= 0;i--){

1172 if(inherit_info[i].shrink){

1176 int rank_dim = j / multiplier[i];

1177 pcoord[i] = inherit_info[i].

stride * rank_dim + inherit_info[i].

lower;

1178 j = j % multiplier[i];

◆ _XMP_calc_coord_on_target_nodes2()

1095 memcpy(target_ncoord, ncoord,

sizeof(

int) * n->

dim);

1115 int target_rank = 0;

1118 for (

int i = 0; i < target_p->

dim; i++){

1120 if (inherit_info[i].shrink){

1123 else if (target_pcoord[i] < inherit_info[i].lower || target_pcoord[i] > inherit_info[i].upper){

1124 for (

int i = 0; i < target_n->

dim; i++){

1125 target_ncoord[i] = -1;

1130 int target_rank_dim = (target_pcoord[i] - inherit_info[i].

lower) / inherit_info[i].stride;

1131 target_rank += multiplier * target_rank_dim;

1133 multiplier *=

_XMP_M_COUNT_TRIPLETi(inherit_info[i].lower, inherit_info[i].upper, inherit_info[i].stride);

◆ _XMP_calc_copy_chunk()

24 if(copy_chunk_dim == 0)

25 return array[0].length * array[0].distance;

27 if(array[copy_chunk_dim-1].stride == 1)

28 return array[copy_chunk_dim-1].length * array[copy_chunk_dim-1].distance;

30 return array[copy_chunk_dim-1].distance;

◆ _XMP_calc_global_index_BCAST()

| int _XMP_calc_global_index_BCAST |

( |

int |

dst_dim, |

|

|

int * |

dst_l, |

|

|

int * |

dst_u, |

|

|

int * |

dst_s, |

|

|

_XMP_array_t * |

src_array, |

|

|

int * |

src_array_nodes_ref, |

|

|

int * |

src_l, |

|

|

int * |

src_u, |

|

|

int * |

src_s |

|

) |

| |

423 int dst_dim_index = 0;

424 int array_dim = src_array->

dim;

425 for (

int i = 0; i < array_dim; i++) {

436 }

else if (dst_dim_index < dst_dim) {

439 _XMP_fatal(

"wrong assign statement for gmove");

443 int onto_nodes_index =

template->chunk[template_index].onto_nodes_index;

447 int rank = src_array_nodes_ref[array_nodes_index];

450 int template_lower, template_upper, template_stride;

457 if (_XMP_sched_gmove_triplet(template_lower, template_upper, template_stride,

459 &(src_l[i]), &(src_u[i]), &(src_s[i]),

460 &(dst_l[dst_dim_index]), &(dst_u[dst_dim_index]), &(dst_s[dst_dim_index]))) {

466 }

else if (dst_dim_index < dst_dim) {

469 _XMP_fatal(

"wrong assign statement for gmove");

◆ _XMP_calc_global_index_HOMECOPY()

| int _XMP_calc_global_index_HOMECOPY |

( |

_XMP_array_t * |

dst_array, |

|

|

int |

dst_dim_index, |

|

|

int * |

dst_l, |

|

|

int * |

dst_u, |

|

|

int * |

dst_s, |

|

|

int * |

src_l, |

|

|

int * |

src_u, |

|

|

int * |

src_s |

|

) |

| |

411 dst_array, dst_dim_index,

413 src_l, src_u, src_s);

◆ _XMP_calc_gmove_array_owner_linear_rank_SCALAR()

| int _XMP_calc_gmove_array_owner_linear_rank_SCALAR |

( |

_XMP_array_t * |

array, |

|

|

int * |

ref_index |

|

) |

| |

218 int array_nodes_dim = array_nodes->

dim;

219 int rank_array[array_nodes_dim];

◆ _XMP_calc_linear_rank()

| int _XMP_calc_linear_rank |

( |

_XMP_nodes_t * |

n, |

|

|

int * |

rank_array |

|

) |

| |

1038 int acc_nodes_size = 1;

1039 int nodes_dim = n->

dim;

1041 for(

int i=0;i<nodes_dim;i++){

1042 acc_rank += (rank_array[i]) * acc_nodes_size;

◆ _XMP_calc_linear_rank_on_target_nodes()

1051 if(_XMP_compare_nodes(n, target_nodes)){

1056 if(inherit_nodes == NULL){

1062 int inherit_nodes_dim = inherit_nodes->

dim;

1063 int *new_rank_array =

_XMP_alloc(

sizeof(

int) * inherit_nodes_dim);

1067 for(

int i=0;i<inherit_nodes_dim;i++){

1068 if(inherit_info[i].shrink){

1070 new_rank_array[i] = 0;

1073 new_rank_array[i] = ((inherit_info[i].

stride) * rank_array[j]) + (inherit_info[i].

lower);

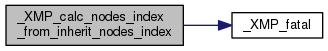

◆ _XMP_calc_nodes_index_from_inherit_nodes_index()

| int _XMP_calc_nodes_index_from_inherit_nodes_index |

( |

_XMP_nodes_t * |

nodes, |

|

|

int |

inherit_nodes_index |

|

) |

| |

1312 if(inherit_nodes == NULL)

1315 int nodes_index = 0;

1316 int inherit_nodes_index_count = 0;

1317 int inherit_nodes_dim = inherit_nodes->

dim;

1318 for(

int i=0;i<inherit_nodes_dim;i++,inherit_nodes_index_count++){

1319 if(inherit_nodes_index_count == inherit_nodes_index){

1328 _XMP_fatal(

"the function does not reach here");

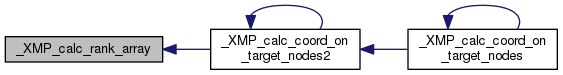

◆ _XMP_calc_rank_array()

| void _XMP_calc_rank_array |

( |

_XMP_nodes_t * |

n, |

|

|

int * |

rank_array, |

|

|

int |

linear_rank |

|

) |

| |

1028 int j = linear_rank;

1029 for(

int i=n->

dim-1;i>=0;i--){

◆ _XMP_calc_template_owner_SCALAR()

| int _XMP_calc_template_owner_SCALAR |

( |

_XMP_template_t * |

ref_template, |

|

|

int |

dim_index, |

|

|

long long |

ref_index |

|

) |

| |

654 for (

int i = 0; i < np; i++){

655 if (m[i] <= ref_index && ref_index < m[i+1]){

◆ _XMP_calc_template_par_triplet()

| int _XMP_calc_template_par_triplet |

( |

_XMP_template_t * |

template, |

|

|

int |

template_index, |

|

|

int |

nodes_rank, |

|

|

int * |

template_lower, |

|

|

int * |

template_upper, |

|

|

int * |

template_stride |

|

) |

| |

671 int par_lower = 0, par_upper = 0, par_stride = 0;

685 par_lower = ser_lower;

686 par_upper = ser_upper;

692 par_lower = nodes_rank * par_chunk_width + ser_lower;

693 int owner_nodes_size =

_XMP_M_CEILi(ser_size, par_chunk_width);

694 if (nodes_rank == (owner_nodes_size - 1)) {

695 par_upper = ser_upper;

696 }

else if (nodes_rank >= owner_nodes_size) {

699 par_upper = par_lower + par_chunk_width - 1;

710 int nodes_size = ni->

size;

713 if (template_size < nodes_size) {

714 if (nodes_rank < template_size) {

715 int par_index = ser_lower + (nodes_rank * par_width);

717 par_lower = par_index;

718 par_upper = par_index;

723 int div = template_size / nodes_size;

724 int mod = template_size % nodes_size;

729 if(nodes_rank >= mod) {

736 par_lower = ser_lower + (nodes_rank * par_width);

737 par_upper = par_lower + (nodes_size * (par_size - 1) * par_width);

740 par_stride = nodes_size * par_width;

747 *template_lower = par_lower;

748 *template_upper = par_upper;

749 *template_stride = par_stride;

◆ _XMP_calc_template_size()

40 for(

int i=0;i<dim;i++){

44 if(ser_lower > ser_upper)

45 _XMP_fatal(

"the lower bound of template should be less than or equal to the upper bound");

◆ _XMP_check_gmove_array_ref_inclusion_SCALAR()

| int _XMP_check_gmove_array_ref_inclusion_SCALAR |

( |

_XMP_array_t * |

array, |

|

|

int |

array_index, |

|

|

int |

ref_index |

|

) |

| |

◆ _XMP_check_reflect_type()

| void _XMP_check_reflect_type |

( |

void |

| ) |

|

302 char *reflect_type = getenv(

"XMP_REFLECT_TYPE");

307 if (strcmp(reflect_type,

"REFLECT_NOPACK") == 0){

312 else if (strcmp(reflect_type,

"REFLECT_PACK") == 0){

◆ _XMP_check_template_ref_inclusion()

| int _XMP_check_template_ref_inclusion |

( |

int |

ref_lower, |

|

|

int |

ref_upper, |

|

|

int |

ref_stride, |

|

|

_XMP_template_t * |

t, |

|

|

int |

index |

|

) |

| |

248 _XMP_validate_template_ref(&ref_lower, &ref_upper, &ref_stride, info->

ser_lower, info->

ser_upper);

255 return _XMP_check_template_ref_inclusion_width_1(ref_lower, ref_upper, ref_stride,

258 return _XMP_check_template_ref_inclusion_width_N(ref_lower, ref_upper, ref_stride, t, index);

◆ _XMP_coarray_lastly_deallocate()

| void _XMP_coarray_lastly_deallocate |

( |

| ) |

|

Deallocate memory space and an object of the last coarray.

1651 #elif _XMP_MPI3_ONESIDED

1656 _XMP_coarray_deallocate(_last_coarray_ptr);

◆ _XMP_create_nodes_by_comm()

1010 MPI_Comm_size(*((MPI_Comm *)comm), &size);

1012 _XMP_nodes_t *n = _XMP_create_new_nodes(is_member, 1, size, comm);

1016 MPI_Comm_rank(*((MPI_Comm *)comm), &(n->

info[0].

rank));

◆ _XMP_create_nodes_by_template_ref()

| _XMP_nodes_t* _XMP_create_nodes_by_template_ref |

( |

_XMP_template_t * |

ref_template, |

|

|

int * |

shrink, |

|

|

long long * |

ref_lower, |

|

|

long long * |

ref_upper, |

|

|

long long * |

ref_stride |

|

) |

| |

466 int onto_nodes_dim = onto_nodes->

dim;

467 int onto_nodes_shrink[onto_nodes_dim];

468 int onto_nodes_ref_lower[onto_nodes_dim];

469 int onto_nodes_ref_upper[onto_nodes_dim];

470 int onto_nodes_ref_stride[onto_nodes_dim];

472 for(

int i=0;i<onto_nodes_dim;i++)

473 onto_nodes_shrink[i] = 1;

475 int new_nodes_dim = 0;

477 int acc_dim_size = 1;

478 int ref_template_dim = ref_template->

dim;

480 for(

int i=0;i<ref_template_dim;i++){

488 onto_nodes_shrink[onto_nodes_index] = 0;

494 onto_nodes_ref_lower[onto_nodes_index] = j;

495 onto_nodes_ref_upper[onto_nodes_index] = j;

496 onto_nodes_ref_stride[onto_nodes_index] = 1;

498 new_nodes_dim_size[new_nodes_dim] = 1;

500 onto_nodes_ref_lower[onto_nodes_index] = 1;

501 onto_nodes_ref_upper[onto_nodes_index] = size;

502 onto_nodes_ref_stride[onto_nodes_index] = 1;

505 new_nodes_dim_size[new_nodes_dim] = size;

512 if (new_nodes_dim > 0)

514 onto_nodes_ref_lower, onto_nodes_ref_upper,

◆ _XMP_create_nodes_ref_for_target_nodes()

1236 if(_XMP_compare_nodes(n, target_nodes)){

1241 if(inherit_nodes == NULL){

1248 int inherit_nodes_dim = inherit_nodes->

dim;

1249 int *new_rank_array =

_XMP_alloc(

sizeof(

int) * inherit_nodes_dim);

1253 for(

int i = 0; i < inherit_nodes_dim; i++){

1254 if(inherit_info[i].shrink){

1258 new_rank_array[i] = ((inherit_info[i].

stride) * rank_array[j]) + (inherit_info[i].

lower);

◆ _XMP_create_shadow_comm()

| void _XMP_create_shadow_comm |

( |

_XMP_array_t * |

array, |

|

|

int |

array_index |

|

) |

| |

32 int acc_nodes_size = 1;

33 int nodes_dim = onto_nodes->

dim;

34 for (

int i = 0; i < nodes_dim; i++) {

36 int size = onto_nodes_info->

size;

37 int rank = onto_nodes_info->

rank;

39 if (i != onto_nodes_index) {

40 color += (acc_nodes_size * rank);

43 acc_nodes_size *= size;

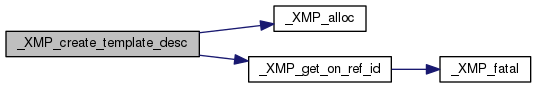

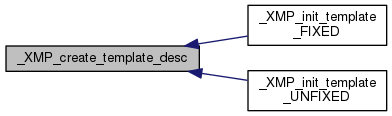

◆ _XMP_create_template_desc()

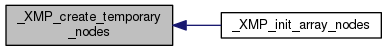

◆ _XMP_create_temporary_nodes()

260 int onto_nodes_dim = n->

dim;

262 int dim_size[onto_nodes_dim];

263 _XMP_nodes_t *new_node = _XMP_create_new_nodes(is_member, onto_nodes_dim, onto_nodes_size, n->

comm);

266 for(

int i=0;i<onto_nodes_dim;i++){

268 inherit_info[i].

lower = 1;

270 inherit_info[i].

stride = 1;

280 for(

int i=1;i<onto_nodes_dim;i++)

◆ _XMP_dealloc_array()

◆ _XMP_dist_template_BLOCK()

| void _XMP_dist_template_BLOCK |

( |

_XMP_template_t * |

template, |

|

|

int |

template_index, |

|

|

int |

nodes_index |

|

) |

| |

357 unsigned long long nodes_size = ni->

size;

363 unsigned long long nodes_rank = ni->

rank;

367 if(nodes_rank == (owner_nodes_size - 1)){

370 else if (nodes_rank >= owner_nodes_size){

371 template->is_owner =

false;

383 if((ti->

ser_size % nodes_size) == 0){

◆ _XMP_dist_template_BLOCK_CYCLIC()

| void _XMP_dist_template_BLOCK_CYCLIC |

( |

_XMP_template_t * |

template, |

|

|

int |

template_index, |

|

|

int |

nodes_index, |

|

|

unsigned long long |

width |

|

) |

| |

401 _XMP_dist_template_CYCLIC_WIDTH(

template, template_index, nodes_index, width);

◆ _XMP_dist_template_CYCLIC()

| void _XMP_dist_template_CYCLIC |

( |

_XMP_template_t * |

template, |

|

|

int |

template_index, |

|

|

int |

nodes_index |

|

) |

| |

396 _XMP_dist_template_CYCLIC_WIDTH(

template, template_index, nodes_index, 1);

◆ _XMP_dist_template_DUPLICATION()

| void _XMP_dist_template_DUPLICATION |

( |

_XMP_template_t * |

template, |

|

|

int |

template_index |

|

) |

| |

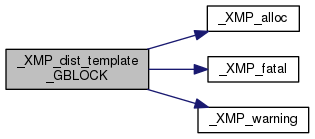

◆ _XMP_dist_template_GBLOCK()

| void _XMP_dist_template_GBLOCK |

( |

_XMP_template_t * |

template, |

|

|

int |

template_index, |

|

|

int |

nodes_index, |

|

|

int * |

mapping_array, |

|

|

int * |

temp0 |

|

) |

| |

420 long long *rsum_array =

_XMP_alloc(

sizeof(

long long) * (ni->

size + 1));

422 for (

int i = 1; i <= ni->

size; i++){

423 rsum_array[i] = rsum_array[i-1] + (

long long)mapping_array[i-1];

428 _XMP_fatal(

"The size of the template exceeds the sum of the mapping array.");

431 _XMP_warning(

"The sum of the mapping array exceeds the size of the template.");

433 template->is_owner =

false;

438 template->is_owner =

true;

447 template->is_fixed =

false;

◆ _XMP_fatal()

| void _XMP_fatal |

( |

char * |

msg | ) |

|

44 fprintf(stderr,

"[RANK:%d] XcalableMP runtime error: %s\n",

_XMP_world_rank, msg);

45 MPI_Abort(MPI_COMM_WORLD, 1);

◆ _XMP_fatal_nomsg()

| void _XMP_fatal_nomsg |

( |

| ) |

|

50 MPI_Abort(MPI_COMM_WORLD, 1);

◆ _XMP_finalize()

| void _XMP_finalize |

( |

bool |

isFinalize | ) |

|

75 if (_XMP_runtime_working) {

78 #if defined(_XMP_GASNET) || defined(_XMP_FJRDMA) || defined(_XMP_TCA) || defined(_XMP_MPI3_ONESIDED) || defined(_XMP_UTOFU)

◆ _XMP_finalize_array_desc()

191 int dim = array->

dim;

193 for(

int i=0;i<dim;i++){

218 for(

int i=0;i<async_reflect->

nreqs;i++){

219 if (async_reflect->

datatype[i] != MPI_DATATYPE_NULL)

220 MPI_Type_free(&async_reflect->

datatype[i]);

221 if (async_reflect->

reqs[i] != MPI_REQUEST_NULL)

222 MPI_Request_free(&async_reflect->

reqs[i]);

243 #ifdef _XMP_MPI3_ONESIDED

◆ _XMP_finalize_comm()

◆ _XMP_finalize_nodes()

822 int dim = nodes->

dim;

823 int num_comms = 1<<dim;

824 for(

int i=0;i<num_comms;i++){

825 MPI_Comm *comm = (MPI_Comm *)nodes->

subcomm;

826 MPI_Comm_free(&comm[i]);

◆ _XMP_finalize_nodes_ref()

◆ _XMP_finalize_onesided_functions()

| void _XMP_finalize_onesided_functions |

( |

| ) |

|

151 #elif _XMP_MPI3_ONESIDED

◆ _XMP_finalize_reflect_sched()

243 for (

int j = 0; j < 4; j++){

244 if (sched->

req[j] != MPI_REQUEST_NULL){

245 MPI_Request_free(&sched->

req[j]);

247 if (sched->

req_reduce[j] != MPI_REQUEST_NULL){

◆ _XMP_finalize_template()

315 for (

int i = 0; i <

template->dim; i++){

◆ _XMP_finalize_world()

| void _XMP_finalize_world |

( |

bool |

| ) |

|

30 MPI_Barrier(MPI_COMM_WORLD);

◆ _XMP_free()

| void _XMP_free |

( |

void * |

p | ) |

|

◆ _XMP_G2L()

| void _XMP_G2L |

( |

long long int |

global_idx, |

|

|

int * |

local_idx, |

|

|

_XMP_template_t * |

template, |

|

|

int |

template_index |

|

) |

| |

49 long long base =

template->info[template_index].ser_lower;

56 *local_idx = global_idx;

62 *local_idx = (global_idx - base) / n_info->

size;

66 int off = global_idx - base;

68 *local_idx = (off / (n_info->

size*w)) * w + off%w;

75 _XMP_fatal(

"_XMP_: unknown chunk dist_manner");

◆ _XMP_get_current_async()

◆ _XMP_get_datatype_size()

| size_t _XMP_get_datatype_size |

( |

int |

datatype | ) |

|

117 size = SIZEOF_UNSIGNED_CHAR;

break;

121 size = SIZEOF_UNSIGNED_SHORT;

break;

125 size = SIZEOF_UNSIGNED_INT;

break;

129 size = SIZEOF_UNSIGNED_LONG;

break;

133 size = SIZEOF_UNSIGNED_LONG_LONG;

break;

136 #ifdef __STD_IEC_559_COMPLEX__

137 case _XMP_N_TYPE_FLOAT_IMAGINARY:

139 size = SIZEOF_FLOAT;

break;

142 #ifdef __STD_IEC_559_COMPLEX__

143 case _XMP_N_TYPE_DOUBLE_IMAGINARY:

145 size = SIZEOF_DOUBLE;

break;

148 #ifdef __STD_IEC_559_COMPLEX__

149 case _XMP_N_TYPE_LONG_DOUBLE_IMAGINARY:

151 size = SIZEOF_LONG_DOUBLE;

break;

154 size = SIZEOF_FLOAT * 2;

break;

157 size = SIZEOF_DOUBLE * 2;

break;

160 size = SIZEOF_LONG_DOUBLE * 2;

break;

◆ _XMP_get_dim_of_allelmts()

124 for(

int i=dims-1;i>=0;i--){

125 if(array_info[i].start == 0 && array_info[i].length == array_info[i].elmts)

◆ _XMP_get_execution_nodes()

48 return _XMP_nodes_stack_top->

nodes;

◆ _XMP_get_execution_nodes_rank()

| int _XMP_get_execution_nodes_rank |

( |

void |

| ) |

|

◆ _XMP_get_next_rank()

| int _XMP_get_next_rank |

( |

_XMP_nodes_t * |

nodes, |

|

|

int * |

rank_array |

|

) |

| |

1293 int i, dim = nodes->

dim;

1297 if(rank_array[i] == size)

◆ _XMP_get_on_ref_id()

| unsigned long long _XMP_get_on_ref_id |

( |

void |

| ) |

|

15 if(_XMP_on_ref_id_count == ULLONG_MAX)

16 _XMP_fatal(

"cannot create a new nodes/template: too many");

18 return _XMP_on_ref_id_count++;

◆ _XMP_gmove_array_array_common()

| void _XMP_gmove_array_array_common |

( |

_XMP_gmv_desc_t * |

gmv_desc_leftp, |

|

|

_XMP_gmv_desc_t * |

gmv_desc_rightp, |

|

|

int * |

dst_l, |

|

|

int * |

dst_u, |

|

|

int * |

dst_s, |

|

|

unsigned long long * |

dst_d, |

|

|

int * |

src_l, |

|

|

int * |

src_u, |

|

|

int * |

src_s, |

|

|

unsigned long long * |

src_d, |

|

|

int |

mode |

|

) |

| |

2042 if (_XMP_gmove_garray_garray_opt(gmv_desc_leftp, gmv_desc_rightp,

2043 dst_l, dst_u, dst_s, dst_d,

2044 src_l, src_u, src_s, src_d))

return;

2048 _XMP_gmove_1to1(gmv_desc_leftp, gmv_desc_rightp, mode);

◆ _XMP_gmove_bcast_ARRAY()

| unsigned long long _XMP_gmove_bcast_ARRAY |

( |

void * |

dst_addr, |

|

|

int |

dst_dim, |

|

|

int * |

dst_l, |

|

|

int * |

dst_u, |

|

|

int * |

dst_s, |

|

|

unsigned long long * |

dst_d, |

|

|

void * |

src_addr, |

|

|

int |

src_dim, |

|

|

int * |

src_l, |

|

|

int * |

src_u, |

|

|

int * |

src_s, |

|

|

unsigned long long * |

src_d, |

|

|

int |

type, |

|

|

size_t |

type_size, |

|

|

int |

root_rank |

|

) |

| |

278 unsigned long long dst_buffer_elmts = 1;

279 for (

int i = 0; i < dst_dim; i++) {

283 void *buffer =

_XMP_alloc(dst_buffer_elmts * type_size);

288 if (root_rank == (exec_nodes->

comm_rank)) {

289 unsigned long long src_buffer_elmts = 1;

290 for (

int i = 0; i < src_dim; i++) {

294 if (dst_buffer_elmts != src_buffer_elmts) {

295 _XMP_fatal(

"wrong assign statement for gmove");

297 (*_xmp_pack_array)(buffer, src_addr, type, type_size, src_dim, src_l, src_u, src_s, src_d);

301 _XMP_gmove_bcast(buffer, type_size, dst_buffer_elmts, root_rank);

303 (*_xmp_unpack_array)(dst_addr, buffer, type, type_size, dst_dim, dst_l, dst_u, dst_s, dst_d);

306 return dst_buffer_elmts;

◆ _XMP_gmove_bcast_SCALAR()

| void _XMP_gmove_bcast_SCALAR |

( |

void * |

dst_addr, |

|

|

void * |

src_addr, |

|

|

size_t |

type_size, |

|

|

int |

root_rank |

|

) |

| |

◆ _XMP_gmove_garray_garray()

4782 unsigned long long dst_total_elmts = 1;

4783 int dst_dim = dst_array->

dim;

4784 int dst_l[dst_dim], dst_u[dst_dim], dst_s[dst_dim];

4785 unsigned long long dst_d[dst_dim];

4786 int dst_scalar_flag = 1;

4787 for (

int i = 0; i < dst_dim; i++) {

4788 dst_l[i] = gmv_desc_leftp->

lb[i];

4789 dst_u[i] = gmv_desc_leftp->

ub[i];

4790 dst_s[i] = gmv_desc_leftp->

st[i];

4794 dst_scalar_flag &= (dst_s[i] == 0);

4798 unsigned long long src_total_elmts = 1;

4799 int src_dim = src_array->

dim;

4800 int src_l[src_dim], src_u[src_dim], src_s[src_dim];

4801 unsigned long long src_d[src_dim];

4802 int src_scalar_flag = 1;

4803 for (

int i = 0; i < src_dim; i++) {

4804 src_l[i] = gmv_desc_rightp->

lb[i];

4805 src_u[i] = gmv_desc_rightp->

ub[i];

4806 src_s[i] = gmv_desc_rightp->

st[i];

4810 src_scalar_flag &= (src_s[i] == 0);

4813 if (dst_total_elmts != src_total_elmts && !src_scalar_flag){

4814 _XMP_fatal(

"wrong assign statement for gmove");

4822 if (dst_scalar_flag && src_scalar_flag){

4826 dst_array, src_array,

4830 else if (!dst_scalar_flag && src_scalar_flag){

4835 _XMP_gmove_gsection_scalar(dst_array, dst_l, dst_u, dst_s, tmp);

4842 dst_l, dst_u, dst_s, dst_d,

4843 src_l, src_u, src_s, src_d,

◆ _XMP_gmove_garray_larray()

4857 int type = dst_array->

type;

4858 size_t type_size = dst_array->

type_size;

4863 unsigned long long dst_total_elmts = 1;

4865 int dst_dim = dst_array->

dim;

4866 int dst_l[dst_dim], dst_u[dst_dim], dst_s[dst_dim];

4867 unsigned long long dst_d[dst_dim];

4868 int dst_scalar_flag = 1;

4869 for (

int i = 0; i < dst_dim; i++) {

4870 dst_l[i] = gmv_desc_leftp->

lb[i];

4871 dst_u[i] = gmv_desc_leftp->

ub[i];

4872 dst_s[i] = gmv_desc_leftp->

st[i];

4876 dst_scalar_flag &= (dst_s[i] == 0);

4880 unsigned long long src_total_elmts = 1;

4881 void *src_addr = gmv_desc_rightp->

local_data;

4882 int src_dim = gmv_desc_rightp->

ndims;

4883 int src_l[src_dim], src_u[src_dim], src_s[src_dim];

4884 unsigned long long src_d[src_dim];

4885 int src_scalar_flag = 1;

4886 for (

int i = 0; i < src_dim; i++) {

4887 src_l[i] = gmv_desc_rightp->

lb[i];

4888 src_u[i] = gmv_desc_rightp->

ub[i];

4889 src_s[i] = gmv_desc_rightp->

st[i];

4890 if (i == 0) src_d[i] = 1;

4891 else src_d[i] = src_d[i-1] * (gmv_desc_rightp->

a_ub[i] - gmv_desc_rightp->

a_lb[i] + 1);

4894 src_scalar_flag &= (src_s[i] == 0);

4897 if (dst_total_elmts != src_total_elmts && !src_scalar_flag){

4898 _XMP_fatal(

"wrong assign statement for gmove");

4901 char *scalar = (

char *)src_addr;

4902 if (src_scalar_flag){

4903 for (

int i = 0; i < src_dim; i++){

4904 scalar += ((src_l[i] - gmv_desc_rightp->

a_lb[i]) * src_d[i] * type_size);

4909 if (src_scalar_flag){

4910 #ifdef _XMP_MPI3_ONESIDED

4913 _XMP_fatal(

"Not supported gmove in/out on non-MPI3 environments");

4918 dst_l, dst_u, dst_s, dst_d,

4919 src_l, src_u, src_s, src_d,

4925 if (dst_scalar_flag && src_scalar_flag){

4931 for (

int i = 0; i < dst_dim; i++) {

4936 dst_addr, dst_dim, dst_l, dst_u, dst_s, dst_d,

4937 src_addr, src_dim, src_l, src_u, src_s, src_d);

4941 for (

int i = 0; i < src_dim; i++){

4942 src_l[i] -= gmv_desc_rightp->

a_lb[i];

4943 src_u[i] -= gmv_desc_rightp->

a_lb[i];

4947 int src_dim_index = 0;

4948 unsigned long long dst_buffer_elmts = 1;

4949 unsigned long long src_buffer_elmts = 1;

4950 for (

int i = 0; i < dst_dim; i++) {

4952 if (dst_elmts == 1) {

4957 dst_buffer_elmts *= dst_elmts;

4961 src_elmts =

_XMP_M_COUNT_TRIPLETi(src_l[src_dim_index], src_u[src_dim_index], src_s[src_dim_index]);

4962 if (src_elmts != 1) {

4964 }

else if (src_dim_index < src_dim) {

4967 _XMP_fatal(

"wrong assign statement for gmove");

4972 &(dst_l[i]), &(dst_u[i]), &(dst_s[i]),

4973 &(src_l[src_dim_index]), &(src_u[src_dim_index]), &(src_s[src_dim_index]))) {

4974 src_buffer_elmts *= src_elmts;

4984 for (

int i = src_dim_index; i < src_dim; i++) {

4989 if (dst_buffer_elmts != src_buffer_elmts) {

4990 _XMP_fatal(

"wrong assign statement for gmove");

4993 void *buffer =

_XMP_alloc(dst_buffer_elmts * type_size);

4994 (*_xmp_pack_array)(buffer, src_addr, type, type_size, src_dim, src_l, src_u, src_s, src_d);

4995 (*_xmp_unpack_array)(dst_addr, buffer, type, type_size, dst_dim, dst_l, dst_u, dst_s, dst_d);

◆ _XMP_gmove_garray_scalar()

| void _XMP_gmove_garray_scalar |

( |

_XMP_gmv_desc_t * |

gmv_desc_leftp, |

|

|

void * |

scalar, |

|

|

int |

mode |

|

) |

| |

4737 void *dst_addr = NULL;

4740 int ndims = gmv_desc_leftp->

ndims;

4743 for (

int i=0;i<ndims;i++)

4744 lidx[i] = gmv_desc_leftp->

lb[i];

4748 if (owner_rank == exec_nodes->

comm_rank){

4750 memcpy(dst_addr, scalar, type_size);

4754 #ifdef _XMP_MPI3_ONESIDED

4757 _XMP_fatal(

"Not supported gmove in/out on non-MPI3 environments");

4761 _XMP_fatal(

"_XMPF_gmove_garray_scalar: wrong gmove mode");

◆ _XMP_gmove_inout_scalar()

| void _XMP_gmove_inout_scalar |

( |

void * |

scalar, |

|

|

_XMP_gmv_desc_t * |

gmv_desc, |

|

|

int |

rdma_type |

|

) |

| |

◆ _XMP_gmove_larray_garray()

5008 size_t type_size = src_array->

type_size;

5011 unsigned long long dst_total_elmts = 1;

5012 int dst_dim = gmv_desc_leftp->

ndims;

5013 int dst_l[dst_dim], dst_u[dst_dim], dst_s[dst_dim];

5014 unsigned long long dst_d[dst_dim];

5015 int dst_scalar_flag = 1;

5016 for(

int i=0;i<dst_dim;i++){

5017 dst_l[i] = gmv_desc_leftp->

lb[i];

5018 dst_u[i] = gmv_desc_leftp->

ub[i];

5019 dst_s[i] = gmv_desc_leftp->

st[i];

5020 dst_d[i] = (i == 0)? 1 : dst_d[i-1]*(gmv_desc_leftp->

a_ub[i] - gmv_desc_leftp->

a_lb[i]+1);

5024 dst_scalar_flag &= (dst_s[i] == 0);

5028 unsigned long long src_total_elmts = 1;

5029 int src_dim = src_array->

dim;

5030 int src_l[src_dim], src_u[src_dim], src_s[src_dim];

5031 unsigned long long src_d[src_dim];

5032 int src_scalar_flag = 1;

5033 for(

int i=0;i<src_dim;i++) {

5034 src_l[i] = gmv_desc_rightp->

lb[i];

5035 src_u[i] = gmv_desc_rightp->

ub[i];

5036 src_s[i] = gmv_desc_rightp->

st[i];

5040 src_scalar_flag &= (src_s[i] == 0);

5043 if(dst_total_elmts != src_total_elmts && !src_scalar_flag){

5044 _XMP_fatal(

"wrong assign statement for gmove");

5048 if (dst_scalar_flag && src_scalar_flag){

5049 char *dst_addr = (

char *)gmv_desc_leftp->

local_data;

5050 for (

int i = 0; i < dst_dim; i++)

5051 dst_addr += ((dst_l[i] - gmv_desc_leftp->

a_lb[i]) * dst_d[i]) * type_size;

5055 else if (!dst_scalar_flag && src_scalar_flag){

5058 char *dst_addr = (

char *)gmv_desc_leftp->

local_data;

5061 for (

int i = 0; i < dst_dim; i++) {

5062 dst_l[i] -= gmv_desc_leftp->

a_lb[i];

5063 dst_u[i] -= gmv_desc_leftp->

a_lb[i];

5066 _XMP_gmove_lsection_scalar(dst_addr, dst_dim, dst_l, dst_u, dst_s, dst_d, tmp, type_size);

5076 dst_l, dst_u, dst_s, dst_d,

5077 src_l, src_u, src_s, src_d,

◆ _XMP_gmove_localcopy_ARRAY()

| void _XMP_gmove_localcopy_ARRAY |

( |

int |

type, |

|

|

int |

type_size, |

|

|

void * |

dst_addr, |

|

|

int |

dst_dim, |

|

|

int * |

dst_l, |

|

|

int * |

dst_u, |

|

|

int * |

dst_s, |

|

|

unsigned long long * |

dst_d, |

|

|

void * |

src_addr, |

|

|

int |

src_dim, |

|

|

int * |

src_l, |

|

|

int * |

src_u, |

|

|

int * |

src_s, |

|

|

unsigned long long * |

src_d |

|

) |

| |

327 unsigned long long dst_buffer_elmts = 1;

328 for (

int i = 0; i < dst_dim; i++) {

332 unsigned long long src_buffer_elmts = 1;

333 for (

int i = 0; i < src_dim; i++) {

337 if (dst_buffer_elmts != src_buffer_elmts) {

338 _XMP_fatal(

"wrong assign statement for gmove");

341 void *buffer =

_XMP_alloc(dst_buffer_elmts * type_size);

342 (*_xmp_pack_array)(buffer, src_addr, type, type_size, src_dim, src_l, src_u, src_s, src_d);

343 (*_xmp_unpack_array)(dst_addr, buffer, type, type_size, dst_dim, dst_l, dst_u, dst_s, dst_d);

◆ _XMP_gmove_scalar_garray()

| void _XMP_gmove_scalar_garray |

( |

void * |

scalar, |

|

|

_XMP_gmv_desc_t * |

gmv_desc_rightp, |

|

|

int |

mode |

|

) |

| |

4708 int ndims = gmv_desc_rightp->

ndims;

4711 for (

int i=0;i<ndims;i++)

4712 ridx[i] = gmv_desc_rightp->

lb[i];

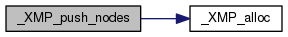

4717 #ifdef _XMP_MPI3_ONESIDED

4720 _XMP_fatal(

"Not supported gmove in/out on non-MPI3 environments");

4724 _XMP_fatal(

"_XMPF_gmove_scalar_garray: wrong gmove mode");

◆ _XMP_gtol_array_ref_triplet()

| void _XMP_gtol_array_ref_triplet |

( |

_XMP_array_t * |

array, |

|

|

int |

dim_index, |

|

|

int * |

lower, |

|

|

int * |

upper, |

|

|

int * |

stride |

|

) |

| |

127 int t_lower = *lower - align_subscript,

128 t_upper = *upper - align_subscript,

153 t_stride = t_stride / template_par_nodes_size;

162 _XMP_fatal(

"wrong distribute manner for normal shadow");

165 *lower = t_lower + align_subscript;

166 *upper = t_upper + align_subscript;

182 *lower += shadow_size_lo;

183 *upper += shadow_size_lo;

190 _XMP_fatal(

"gmove does not support shadow region for cyclic or block-cyclic distribution");

◆ _XMP_gtol_calc_offset()

| unsigned long long _XMP_gtol_calc_offset |

( |

_XMP_array_t * |

a, |

|

|

int |

g_idx[] |

|

) |

| |

2995 for(

int i=0;i<ndims;i++)

3000 unsigned long long offset = 0;

3002 for (

int i = 0; i < a->

dim; i++){

◆ _XMP_init()

| void _XMP_init |

( |

int |

argc, |

|

|

char ** |

argv, |

|

|

MPI_Comm |

comm |

|

) |

| |

32 if (!_XMP_runtime_working) {

34 MPI_Initialized(&flag);

37 MPI_Init(&argc, &argv);

54 #if defined(_XMP_GASNET) || defined(_XMP_FJRDMA) || defined(_XMP_TCA) || defined(_XMP_MPI3_ONESIDED) || defined(_XMP_UTOFU)

67 if (!_XMP_runtime_working) {

◆ _XMP_init_array_comm()

842 int acc_nodes_size = 1;

843 int template_dim = align_template->

dim;

846 va_start(args, array);

847 for(

int i=0;i<template_dim;i++){

857 size = onto_nodes_info->

size;

858 rank = onto_nodes_info->

rank;

861 if(va_arg(args,

int) == 1)

862 color += (acc_nodes_size * rank);

864 acc_nodes_size *= size;

872 MPI_Comm *comm =

_XMP_alloc(

sizeof(MPI_Comm));

◆ _XMP_init_array_comm2()

| void _XMP_init_array_comm2 |

( |

_XMP_array_t * |

array, |

|

|

int |

args[] |

|

) |

| |

890 int acc_nodes_size = 1;

891 int template_dim = align_template->

dim;

893 for (

int i = 0; i < template_dim; i++) {

902 size = onto_nodes_info->

size;

903 rank = onto_nodes_info->

rank;

907 color += (acc_nodes_size * rank);

910 acc_nodes_size *= size;

916 MPI_Comm *comm =

_XMP_alloc(

sizeof(MPI_Comm));

◆ _XMP_init_array_desc()

54 a->

order = MPI_ORDER_C;

62 #ifdef _XMP_MPI3_ONESIDED

72 va_start(args, type_size);

73 for (

int i=0;i<dim;i++){

74 int size = va_arg(args,

int);

◆ _XMP_init_array_desc_NOT_ALIGNED()

| void _XMP_init_array_desc_NOT_ALIGNED |

( |

_XMP_array_t ** |

adesc, |

|

|

_XMP_template_t * |

template, |

|

|

int |

ndims, |

|

|

int |

type, |

|

|

size_t |

type_size, |

|

|

unsigned long long * |

dim_acc, |

|

|

void * |

ap |

|

) |

| |

127 a->

order = MPI_ORDER_C;

138 #ifdef _XMP_MPI3_ONESIDED

146 for (

int i = 0; i < ndims; i++) {

◆ _XMP_init_array_nodes()

931 int template_dim = align_template->

dim;

932 int align_template_shrink[template_dim];

933 int align_template_num = 0;

934 long long align_template_lower[template_dim], align_template_upper[template_dim],

935 align_template_stride[template_dim];

937 for(

int i=0;i<template_dim;i++)

938 align_template_shrink[i] = 1;

940 int array_dim = array->

dim;

941 for(

int i=0;i<array_dim;i++){

945 align_template_shrink[align_template_index] = 0;

946 align_template_num++;

949 align_template_stride[align_template_index] = 1;

953 if(template_dim == align_template_num){

956 else if (template_dim > align_template_num){

963 align_template_upper, align_template_stride);

◆ _XMP_init_nodes_ref()

1211 int *new_rank_array =

_XMP_alloc(

sizeof(

int) * dim);

1213 int shrink_nodes_size = 1;

1214 for(

int i=0;i<dim;i++){

1215 new_rank_array[i] = rank_array[i];

1217 shrink_nodes_size *= (n->

info[i].

size);

1221 nodes_ref->

nodes = n;

1222 nodes_ref->

ref = new_rank_array;

◆ _XMP_init_nodes_struct_EXEC()

| _XMP_nodes_t* _XMP_init_nodes_struct_EXEC |

( |

int |

dim, |

|

|

int * |

dim_size, |

|

|

int |

is_static |

|

) |

| |

397 MPI_Comm *comm =

_XMP_alloc(

sizeof(MPI_Comm));

398 MPI_Comm_dup(*((MPI_Comm *)exec_nodes->

comm), comm);

405 n->

inherit_info = _XMP_calc_inherit_info(inherit_nodes);

409 _XMP_init_nodes_info(n, dim_size, is_static);

412 for(

int i=1;i<dim;i++)

◆ _XMP_init_nodes_struct_GLOBAL()

| _XMP_nodes_t* _XMP_init_nodes_struct_GLOBAL |

( |

int |

dim, |

|

|

int * |

dim_size, |

|

|

int |

is_static |

|

) |

| |

346 MPI_Comm *comm =

_XMP_alloc(

sizeof(MPI_Comm));

347 MPI_Comm_dup(MPI_COMM_WORLD, comm);

359 for(

int i=0;i<dim;i++){

360 if(dim_size[i] == -1){

362 sprintf(name,

"XMP_NODE_SIZE%d", i);

363 char *size = getenv(name);

370 else _XMP_fatal(

"XMP_NODE_SIZE not specified although '*' is in the dimension of a node array\n");

373 dim_size[i] = atoi(size);

381 _XMP_init_nodes_info(n, dim_size, is_static);

384 for(

int i=1;i<dim;i++)

387 n->

subcomm = create_subcomm(n);

◆ _XMP_init_nodes_struct_NODES_NAMED()

| _XMP_nodes_t* _XMP_init_nodes_struct_NODES_NAMED |

( |

int |

dim, |

|

|

_XMP_nodes_t * |

ref_nodes, |

|

|

int * |

shrink, |

|

|

int * |

ref_lower, |

|

|

int * |

ref_upper, |

|

|

int * |

ref_stride, |

|

|

int * |

dim_size, |

|

|

int |

is_static |

|

) |

| |

501 int ref_dim = ref_nodes->

dim;

502 int is_ref_member = ref_nodes->

is_member;

507 int acc_nodes_size = 1;

508 for(

int i=0;i<ref_dim;i++){

513 color += (acc_nodes_size * rank);

516 _XMP_validate_nodes_ref(&ref_lower[i], &ref_upper[i], &ref_stride[i], size);

517 is_member = is_member && _XMP_check_nodes_ref_inclusion(ref_lower[i], ref_upper[i], ref_stride[i], size, rank);

520 acc_nodes_size *= size;

533 for(

int i=0;i<ref_dim;i++)

539 if(check_subcomm(ref_nodes, ref_lower, ref_upper, ref_stride, shrink)){

541 comm = (MPI_Comm *)get_subcomm(ref_nodes, ref_lower, ref_upper, ref_stride, shrink);

544 else if(comm_size == 1){

547 MPI_Comm_dup(MPI_COMM_SELF, comm);

559 n->

inherit_info = _XMP_calc_inherit_info_by_ref(ref_nodes, shrink, ref_lower, ref_upper, ref_stride);

564 _XMP_init_nodes_info(n, dim_size, is_static);

567 for(

int i=1;i<dim;i++)

◆ _XMP_init_nodes_struct_NODES_NUMBER()

| _XMP_nodes_t* _XMP_init_nodes_struct_NODES_NUMBER |

( |

int |

dim, |

|

|

int |

ref_lower, |

|

|

int |

ref_upper, |

|

|

int |

ref_stride, |

|

|

int * |

dim_size, |

|

|

int |

is_static |

|

) |

| |

421 _XMP_validate_nodes_ref(&ref_lower, &ref_upper, &ref_stride,

_XMP_world_size);

424 MPI_Comm *comm =

_XMP_alloc(

sizeof(MPI_Comm));

432 int l[1] = {ref_lower};

433 int u[1] = {ref_upper};

434 int s[1] = {ref_stride};

440 _XMP_init_nodes_info(n, dim_size, is_static);

443 for(

int i=1;i<dim;i++)

446 n->

subcomm = create_subcomm(n);

◆ _XMP_init_reflect_sched()

225 for (

int j = 0; j < 4; j++){

226 sched->

req[j] = MPI_REQUEST_NULL;

◆ _XMP_init_shadow()

262 int dim = array->

dim;

264 va_start(args, array);

265 for (

int i = 0; i < dim; i++) {

268 int type = va_arg(args,

int);

277 int lo = va_arg(args,

int);

279 _XMP_fatal(

"<shadow-width> should be a nonnegative integer");

282 int hi = va_arg(args,

int);

284 _XMP_fatal(

"<shadow-width> should be a nonnegative integer");

287 if ((lo == 0) && (hi == 0)) {

◆ _XMP_init_template_chunk()

307 template->is_distributed =

true;

309 template->onto_nodes = nodes;

◆ _XMP_init_template_FIXED()

| void _XMP_init_template_FIXED |

( |

_XMP_template_t ** |

template, |

|

|

int |

dim, |

|

|

|

... |

|

) |

| |

273 for(

int i=0;i<dim;i++){

◆ _XMP_init_world()

| void _XMP_init_world |

( |

int * |

argc, |

|

|

char *** |

argv |

|

) |

| |

14 MPI_Initialized(&flag);

15 if (!flag) MPI_Init(argc, argv);

18 MPI_Comm_dup(MPI_COMM_WORLD, comm);

26 MPI_Barrier(MPI_COMM_WORLD);

◆ _XMP_initialize_async_comm_tab()

| void _XMP_initialize_async_comm_tab |

( |

| ) |

|

53 _XMP_async_comm_tab[i].

nreqs = 0;

54 _XMP_async_comm_tab[i].

nnodes = 0;

55 _XMP_async_comm_tab[i].

is_used =

false;

57 _XMP_async_comm_tab[i].

node = NULL;

58 _XMP_async_comm_tab[i].

reqs = NULL;

59 _XMP_async_comm_tab[i].

gmove = NULL;

60 _XMP_async_comm_tab[i].

a = NULL;

61 _XMP_async_comm_tab[i].

next = NULL;

◆ _XMP_initialize_onesided_functions()

| void _XMP_initialize_onesided_functions |

( |

| ) |

|

76 fprintf(stderr,

"Warning : Onesided operations cannot be not used in %d processes (up to %d processes)\n",

84 size_t _xmp_heap_size, _xmp_stride_size;

85 _xmp_heap_size = _get_size(

"XMP_ONESIDED_HEAP_SIZE");

86 _xmp_stride_size = _get_size(

"XMP_ONESIDED_STRIDE_SIZE");

87 _xmp_heap_size += _xmp_stride_size;

94 #elif _XMP_MPI3_ONESIDED

95 size_t _xmp_heap_size;

96 _xmp_heap_size = _get_size(

"XMP_ONESIDED_HEAP_SIZE");

104 #if defined(_XMP_GASNET) || defined(_XMP_FJRDMA) || defined(_XMP_TCA) || defined(_XMP_MPI3_ONESIDED) || defined(_XMP_UTOFU)

111 if(getenv(

"XMP_PUT_NB") != NULL){

114 printf(

"*** _XMP_coarray_contiguous_put() is Non-Blocking ***\n");

117 if(getenv(

"XMP_GET_NB") != NULL){

120 printf(

"*** _XMP_coarray_contiguous_get() is Non-Blocking ***\n");

123 #if defined(_XMP_FJRDMA)

124 if(getenv(

"XMP_PUT_NB_RR") != NULL){

128 printf(

"*** _XMP_coarray_contiguous_put() is Non-Blocking and Round-Robin ***\n");

131 if(getenv(

"XMP_PUT_NB_RR_I") != NULL){

136 printf(

"*** _XMP_coarray_contiguous_put() is Non-Blocking, Round-Robin and Immediately ***\n");

◆ _XMP_L2G()

| void _XMP_L2G |

( |

int |

local_idx, |

|

|

long long int * |

global_idx, |

|

|

_XMP_template_t * |

template, |

|

|

int |

template_index |

|

) |

| |

13 long long base =

template->info[template_index].ser_lower;

18 *global_idx = local_idx ;

25 *global_idx = base + n_info->

rank + n_info->

size * local_idx;

30 *global_idx = base + n_info->

rank * w

31 + (local_idx/w) * w * n_info->

size + local_idx%w;

38 _XMP_fatal(

"_XMP_: unknown chunk dist_manner");

◆ _XMP_local_get()

603 size_t elmt_size = src_desc->elmt_size;

605 if(dst_contiguous && src_contiguous)

607 dst_elmts, src_elmts, elmt_size);

609 _local_NON_contiguous_copy((

char *)dst+dst_offset, (

char *)src_desc->real_addr+src_offset,

610 dst_dims, src_dims, dst_info, src_info, dst_elmts, src_elmts, elmt_size);

◆ _XMP_local_put()

569 size_t elmt_size = dst_desc->elmt_size;

571 if(dst_contiguous && src_contiguous)

573 dst_elmts, src_elmts, elmt_size);

575 _local_NON_contiguous_copy((

char *)dst_desc->real_addr+dst_offset, (

char *)src+src_offset,

576 dst_dims, src_dims, dst_info, src_info, dst_elmts, src_elmts, elmt_size);

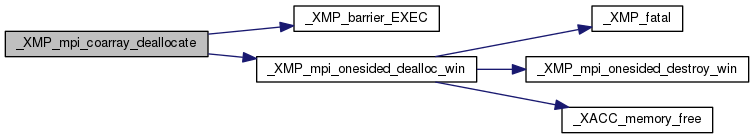

◆ _XMP_mpi_coarray_deallocate()

225 MPI_Win_unlock_all(c->win);

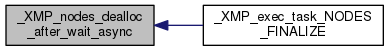

◆ _XMP_nodes_dealloc_after_wait_async()

| void _XMP_nodes_dealloc_after_wait_async |

( |

_XMP_nodes_t * |

n | ) |

|

277 if(_tmp_async->

nnodes == 0)

◆ _XMP_normalize_array_section()

| void _XMP_normalize_array_section |

( |

_XMP_gmv_desc_t * |

gmv_desc, |

|

|

int |

idim, |

|

|

int * |

lower, |

|

|

int * |

upper, |

|

|

int * |

stride |

|

) |

| |

936 u = u - ((u - l) % s);

940 l = l + ((u - l) % s);

◆ _XMP_pack_vector()

| void _XMP_pack_vector |

( |

char *restrict |

dst, |

|

|

char *restrict |

src, |

|

|

int |

count, |

|

|

int |

blocklength, |

|

|

long |

stride |

|

) |

| |

13 #pragma omp parallel for private(i)

14 for (i = 0; i < count; i++){

15 memcpy(dst + i * blocklength, src + i * stride, blocklength);

19 for (i = 0; i < count; i++){

20 memcpy(dst + i * blocklength, src + i * stride, blocklength);

◆ _XMP_pack_vector2()

| void _XMP_pack_vector2 |

( |

char *restrict |

dst, |

|

|

char *restrict |

src, |

|

|

int |

count, |

|

|

int |

blocklength, |

|

|

int |

nnodes, |

|

|

int |

type_size, |

|

|

int |

src_block_dim |

|

) |

| |

30 if (src_block_dim == 1){

31 #pragma omp parallel for private(j,k)

32 for (j = 0; j < count; j++){

33 for (k = 0; k < nnodes; k++){